Original link: http://gaocegege.com/Blog/vector

With the popularity of LLM, the vector database has also become a hot topic. With just a few simple Python codes, the vector database can plug in a cheap but extremely effective “external brain” for your LLM. But do we really need a (specialized) vector database?

Why does LLM need vector search?

First, let me briefly introduce why LLM needs to use vector search technology. Vector search is a very old problem. Given an object, the process of finding the object most similar to it in a set is vector search. Content such as text/picture can be converted into a vector representation, and then the similarity problem of text/picture can be converted into the similarity problem of vector.

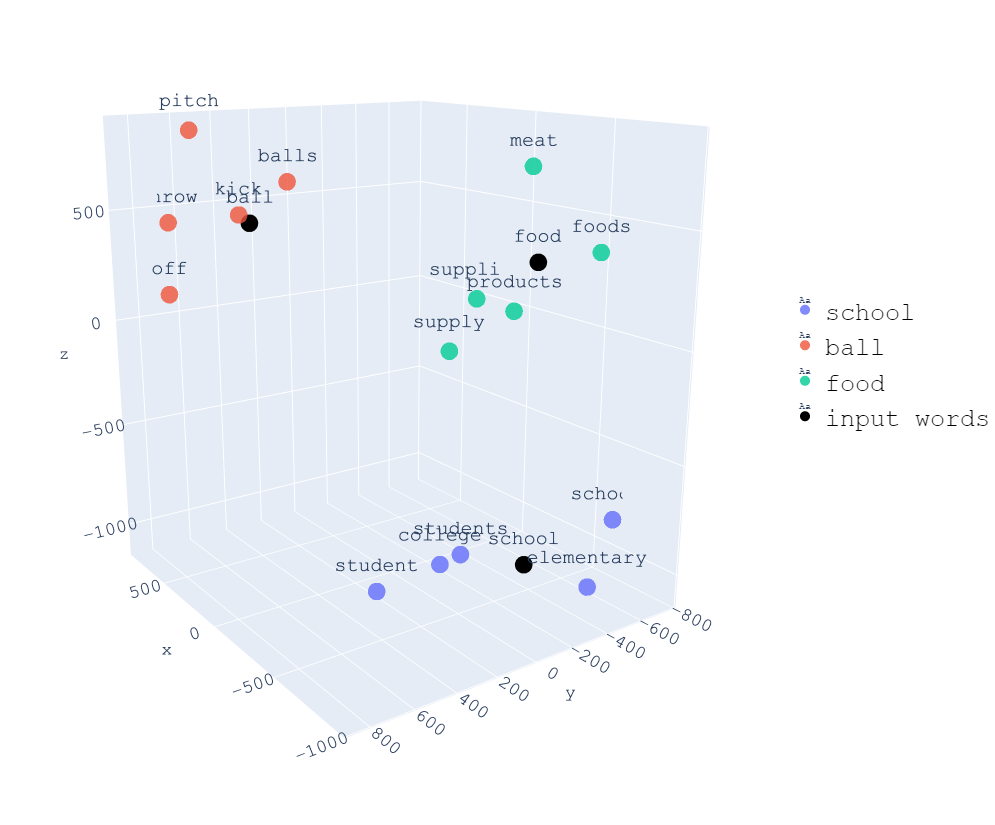

Text search (credit: https://ift.tt/qsQ8356)

In the above example, we converted the different words into a 3D vector. Therefore, we can intuitively display the similarity between different words in a 3D space. For example, the similarity between student and school is higher than the similarity between student and food .

Going back to the LLM scene, the length limit of the model context is a headache. For example, the context length of ChatGPT 3.5 is limited to 4k tokens. One of the most amazing capabilities of LLM is context-learning, a limitation that affects the experience of using the model. And vector search can bypass this problem very neatly:

- Divide text that exceeds the context length limit into shorter chunks, and convert different chunks into vectors (embedding).

- The prompt is also converted to a vector (embedding) before being fed into the LLM.

- Search the prompt vector to find the most similar chunk vector.

- Concatenate the most similar chunk vector and prompt vector as the input of LLM.

This is equivalent to giving LLM an external memory so that it can search for the most relevant information from this memory. This memory is the capability brought about by vector search. If you want more details, you can read this article and this article , which explain it more clearly.

Why are vector databases so popular?

One of the most important reasons why a vector database has become an essential part of LLM is ease of use. After cooperating with the OpenAI Embedding model (such as text-embedding-ada-002 etc.), only about ten lines of code are needed to realize the whole process of converting the prompt query into a vector and then performing a vector search:

def query ( query , collection_name , top_k = 20 ): # Creates embedding vector from user query embedded_query = openai . Embedding . create ( input = query , model = EMBEDDING_MODEL , )[ "data" ][ 0 ][ 'embedding' ] near_vector = { "vector" : embedded_query } # Queries input schema with vectorized user query query_result = ( client . query . get ( collection_name ) . with_near_vector ( near_vector ) . with_limit ( top_k ) . do () ) return query_result

Vector search mainly plays the role of recall. In layman’s terms, recall is to find the closest objects in the candidate set. In LLM, the candidate set is all chunks, and the closest object is the chunk most similar to the prompt. Vector search is regarded as the main implementation of recall in the process of LLM inference. It is simple to implement, and can solve the most troublesome problem of converting text to vectors with the help of the OpenAI Embedding model. What remains is the problem of independent and clean vector search, which can be done very well by current vector databases, so the whole process is very smooth.

Vector database, as the name suggests, is a database specially designed for the special data type of vector. The similarity calculation of vectors is originally a problem of O(n^2) complexity, because all vectors in the set need to be compared two by two. Therefore, the industry has proposed the algorithm of Approximate Near Neighbor (ANN). Using the ANN algorithm, constructing the vector index in the vector database through pre-calculation, and exchanging space for time, can greatly speed up the process of similarity calculation. This is similar to the index of the traditional database.

Therefore, the vector database not only has strong performance, but also is easy to use, and it is really a perfect match with LLM! (?)

Maybe a general purpose database would be better?

The advantages and benefits of many vector databases have been introduced earlier, so what are the problems with it? The blog post of SingleStore gave a good answer:

Vectors and vector search are a data type and query processing approach, not a foundation for a new way of processing data. Using a specialty vector database (SVDB) will lead to the usual problems we see (and solve) again and again with our customers who use multiple specialty systems: redundant data, excessive data movement, lack of agreement on data values among distributed components, extra labor expense for specialized skills, extra licensing costs, limited query language power, programmability and extensibility , limited tool integration, and poor data integrity and availability compared with a true DBMS.

Vectors and vector searches are a data type and query processing method, not the basis for new ways of processing data. Using a specialized vector database (SVDB) will lead to common problems we see (and solve) time and time again in customers using multiple specialized databases: redundant data, excessive data movement, lack of consistency of data between distributed components additional labor costs for specialized skills, additional licensing costs, limited query language capabilities, programmability and scalability, limited tool integration, and poor data integrity and availability compared to real DBMSs.

Among them, there are two issues that I think are more important. The first is the issue of data consistency. In the stage of prototyping experiments, vector databases are very suitable, and ease of use trumps everything. But the vector database is an independent system completely decoupled from other data stores (such as TP database, AP data lake, etc.). Therefore, data needs to be synchronized, transferred, and processed between multiple systems.

Imagine if your data has been stored in an OLTP database such as PostgresQL, and using an independent vector database for vector search requires taking the data out of the database first, and then using services such as OpenAI Embedding to convert them into vectors one by one, and then synchronize them to in a dedicated vector database. This adds a lot of complexity. The more complicated part is that if a user deletes a piece of data in PostgresQL, but not in the vector database, then there will be data inconsistency. This problem may be very serious in the actual production environment.

-- Update the embedding column for the documents table UPDATE documents SET embedding = openai_embedding ( content ) WHERE length ( embedding ) = 0 ; -- Create an index on the embedding column CREATE INDEX ON documents USING ivfflat ( embedding vector_l2_ops ) WITH ( lists = 100 ); -- Query the similar embeddings SELECT * FROM documents ORDER BY embedding <-> openai_embedding ( 'hello world' ) LIMIT 5 ;

And if all this is done in a common database, the user’s use process may be simpler than the independent vector database. Vectors are just one of the data types, not an independent system. This way, data consistency is no longer an issue.

Second is the question of query language. The query languages of vector databases are usually designed specifically for vector searches, so there may be many restrictions on other queries. For example, in the metadata filtering scenario, users need to filter based on certain metadata fields. The filter operators supported by some vector databases are limited.

In addition, the data types supported by metadata are also very limited, usually only including String, Number, List of Strings, Booleans. This is unfriendly for complex metadata queries.

And if the traditional database can support the data type of vector, then there will be no problems mentioned above. First of all, it goes without saying about data consistency. The TP or AP database in the production environment is an existing infrastructure. Secondly, the problem of query language does not exist anymore, because the vector data type is only a data type in the database, so the query of the vector data type can use the native query language of the database, such as SQL.

more detailed explanation

However, it is obviously unfair to compare only with the shortcomings of the vector database. I can easily list some counterarguments:

- The ease of use of the vector database is very good, and users can easily use the vector database without caring about the underlying implementation details.

- The performance of the vector database is unmatched by traditional databases. The entire database is specially designed for vector search, so it has a natural advantage in performance.

- Although the functions of metadata filtering are limited, the capabilities provided by the vector database can already meet most business scenarios.

How should you deal with these problems? Next, I will share my views by answering questions.

Q: The ease of use of the vector database is very good, and users can easily use the vector database without caring about the underlying implementation details.

The answer to this question is yes. The ease of use of the vector database is indeed very good, and users can easily use the vector database without caring about the underlying implementation details. But this is not unique to vector databases. Compared with traditional databases, the ease of use of vector databases mainly comes from the abstraction of specific domains. Because we only need to focus on the vector scene, we can design concepts specifically for it, and do more targeted optimization and support for the most commonly used Python programming language in the field of machine learning.

And these are not unique to the vector database, but focus on the benefits of the vector scene. Similar ease of use can be provided if traditional databases also support the vector data type. Independent of database standard interfaces, traditional databases can also provide Python SDKs similar to various vector databases, as well as other tool integrations to meet the needs of most scenarios. Not only that, traditional databases can also meet more complex query scenarios through standard SQL interfaces.

Another part of the ease of use of the vector database comes from the distribution. Most vector databases are designed to expand nodes horizontally to meet the user’s data volume and QPS requirements. However, this is not unique to vector databases either. Traditional databases can also meet user needs in a distributed manner. Of course, what is more important is how much user data and QPS need distributed capabilities. From another point of view, if the size of the vector is not large, why introduce a new database?

Q: The performance of the vector database is unmatched by traditional databases. The entire database is specially designed for vector search, so it has a natural advantage in performance.

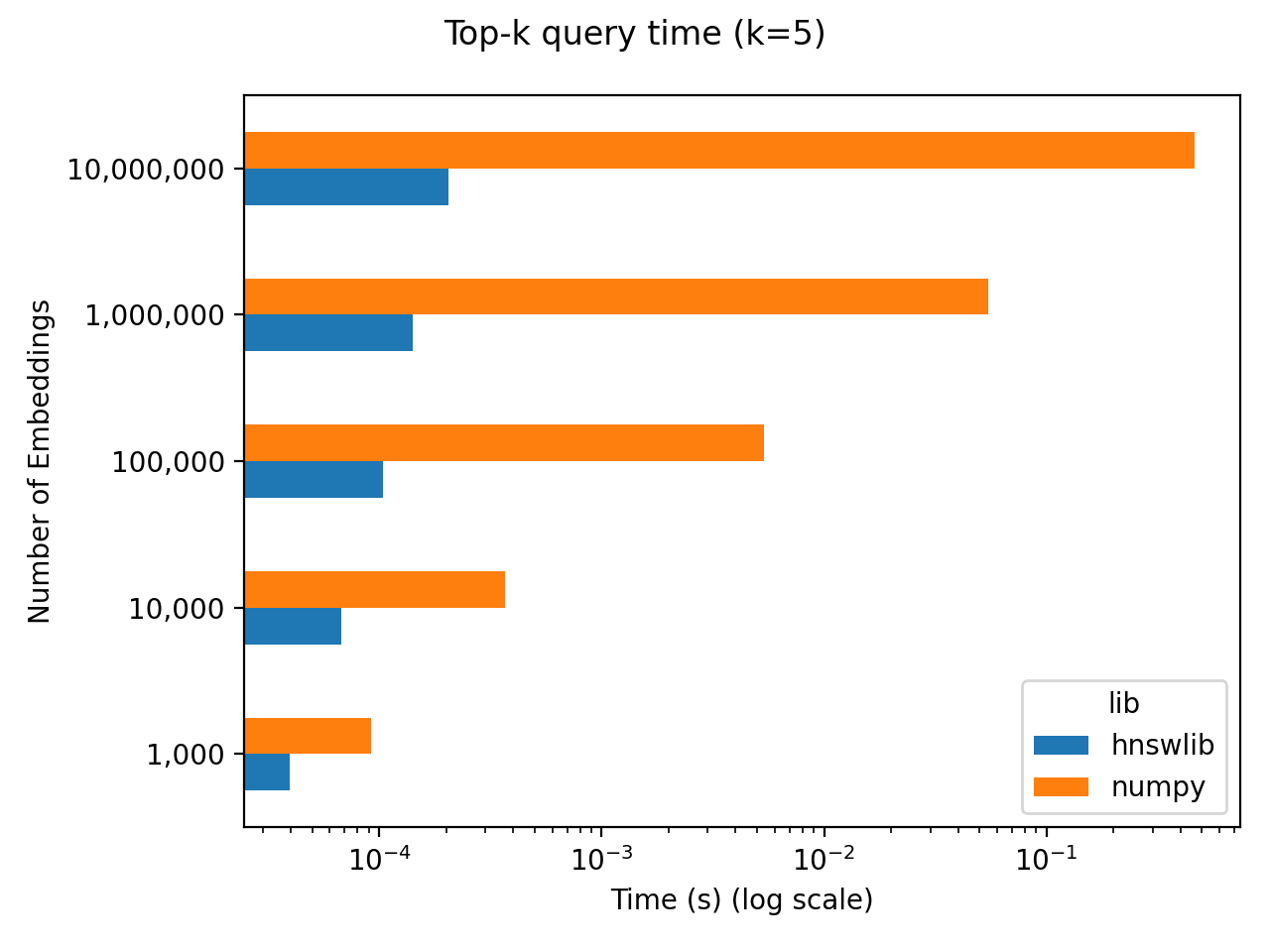

Regarding performance, first let’s analyze where the performance bottleneck is in the LLM scenario. The figure below is a naive benchmark for vector retrieval. In this benchmark, 256-dimensional N vectors are randomly initialized, and then the query time of top-5 nearest neighbors is counted under different scales of N. And tested in two different ways:

- Numpy does calculations “on the fly”, performing fully accurate , non-ahead nearest neighbor calculations.

- Hnswlib Use Hnswlib to precompute approximate nearest neighbors.

Benchmark (credit: https://ift.tt/2E6FU0e)

It can be seen that at the scale of 1M vectors, the delay of numpy real-time calculation is about 50ms. We use this as a benchmark to compare the time taken for subsequent LLM inference after the vector search is complete. It takes about 10s for the 7B model to perform inference on 300 Chinese characters on Nvidia A100 (40GB). So even if numpy accurately calculates the query time of the similarity of 1M vectors in real time, it only accounts for 0.5% of the total delay in the entire end-to-end LLM reasoning. Therefore, in terms of delay, the benefits brought by the vector database are overshadowed by the delay of LLM itself in the current LLM scenario. So, let’s look at throughput again. The throughput of LLM is much lower than that of vector database. So I also think that the throughput of the vector database is not the core issue in this scenario.

So if performance is not important, what question will determine the user’s choice? I think it’s ease of use in a broad sense. This includes not only the ease of use, but also the ease of operation and maintenance, and solutions to problems in the database field such as consistency. These problems have already had very mature solutions in traditional databases, while vector databases are still in their infancy.

Q: Although the functions of metadata filtering are limited, the capabilities provided by the vector database can meet most business scenarios.

Metadata filtering is not only a matter of the number of supported operators, but more importantly, the problem of data consistency. The metadata in the vector is the data in the traditional database, and the vector itself is the index of the data. That being the case, why not just store both vector and metadata storage in a traditional database.

Vector support for legacy databases

Since we think that vector is just a new data type of traditional database, let’s take a look at how to support vector data type in traditional database. Here we take Postgres as an example. pgvector is an open source Postgres plugin that supports vector data types. pgvector uses the precise calculation method by default, but also supports the establishment of IVFFlat index, and uses the IVFFlat algorithm to pre-calculate the results of ANN, sacrificing the accuracy of calculation for performance.

pgvector already has very good support for vectors and is used by products such as supabase . However, the indexing algorithms it supports are limited, only the simplest IVFFlat, and there is no quantization or storage optimization. Moreover, the indexing algorithm of pgvector is not friendly to the disk, and is designed for use in memory. In the traditional database ecosystem, diskANN and other vector indexing algorithms designed for disk are also very valuable.

And if you want to extend pgvector, it is very difficult. pgvector is implemented in C, and although it has been open source for two years, it currently has only 3 contributors. The implementation of pgvector is not complicated, so why not rewrite it in Rust?

Rewriting pgvector in Rust allows the code to be organized in a more modern way and be easier to extend. The ecology of Rust is also very rich, faiss and others already have a corresponding Rust binding.

pgvecto.rs

Thus pgvecto.rs was born. pgvecto.rs currently supports precise vector query operations and three distance calculation operators. Index support is currently being designed and implemented. In addition to IVFFlat, we also hope to support more indexing algorithms such as DiskANN, SPTAG, ScaNN, etc. We welcome your contributions and feedback!

-- call the distance function through operators -- square Euclidean distance SELECT array [ 1 , 2 , 3 ] <-> array [ 3 , 2 , 1 ]; -- dot product distance SELECT array [ 1 , 2 , 3 ] <#> array [ 3 , 2 , 1 ]; -- cosine distance SELECT array [ 1 , 2 , 3 ] <=> array [ 3 , 2 , 1 ]; -- create table CREATE TABLE items ( id bigserial PRIMARY KEY , emb numeric []); -- insert values INSERT INTO items ( emb ) VALUES ( ARRAY [ 1 , 2 , 3 ]), ( ARRAY [ 4 , 5 , 6 ]); -- query the similar embeddings SELECT * FROM items ORDER BY emb <-> ARRAY [ 3 , 2 , 1 ] LIMIT 5 ; -- query the neighbors within a certain distance SELECT * FROM items WHERE emb <-> ARRAY [ 3 , 2 , 1 ] < 5 ;

future

As LLM gradually enters the production environment, the requirements for infrastructure are getting higher and higher. The emergence of the vector database is an important supplement to the infrastructure. We do not think that vector databases and traditional databases will replace each other, but will give full play to their respective advantages in different scenarios. The emergence of vector databases will also promote the support of traditional databases for vector data types. We hope that pgvecto.rs can become an important part of the Postgres ecosystem and provide better vector support for Postgres.

License

- This article is licensed under CC BY-NC-SA 3.0 .

- Please contact me for commercial use.

This article is transferred from: http://gaocegege.com/Blog/vector

This site is only for collection, and the copyright belongs to the original author.