To say which technology has subverted people’s lives the most in recent years, it must be cloud computing.

As early as 1961, computer pioneer John McCarthy predicted that computing resources in the future would be used like public resources such as water and electricity. [1]

Good soup is not afraid of slow cooking. When cluster computing, utility computing, grid computing, service computing and other technologies continue to develop and integrate [2] , and are assembled into a perfect system, the industry hopes to create a cloud that falls from the sky, allowing Every user can enjoy the power of the cloud on demand.

As a collection of state-of-the-art technologies, change often occurs here as well. In the era of digital and intelligent economy, cloud infrastructure has become the infrastructure of society. Alibaba, as a leading cloud service provider, and Intel, as a leading infrastructure service provider, are expecting to jointly promote the rapid development of the cloud computing industry through end-to-end full-link cooperation.

Everything is for a better life

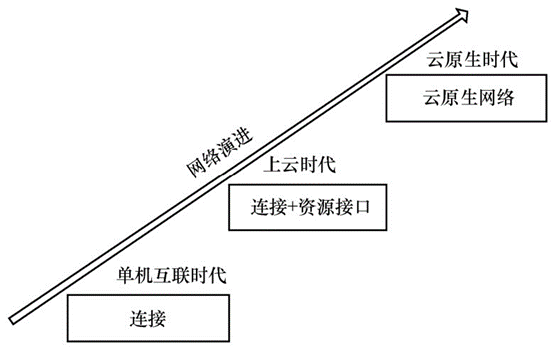

The field of cloud computing has been iterating rapidly for a long time. When the cloud was born, the industry has realized the transition from single-machine interconnection to the cloud era. As the technology enters the deep water area, the next era is cloud native.

Cloud native will revolutionize our lives. In the cloud-native era, thanks to the cloud-side-end computing power network, the network is no longer just a separate data transmission, but an information system integrating communication, computing and storage, and we can also enjoy its a seamless application experience.

The evolution process of the network, the source of the picture丨”Telecommunications Science” [3]

Cloud native, that is, born in the cloud, grows in the cloud, as the name suggests, at the beginning of the architecture design, it is aimed at deploying on the cloud, fully considering the native characteristics of the cloud, to carry out development and subsequent operation and maintenance, establishment and deployment are more flexible , more flexible, more agile, and more fault-tolerant platforms and applications, rather than simply migrating traditional applications to the cloud. [4]

Although the benefits are numerous, it seems to be very far from our lives.

Really excellent technology must be taken from the needs of life and returned to life. Cloud native, that is the case, everything is born to change lives.

Taking the medical field as an example, the rise of digital medicine in recent years is related to the health of each of us. However, digital medicine is inseparable from the support of computing power. Once the cloud-native capabilities are insufficient, it will tie hands and feet, and more innovative drugs that benefit mankind will only appear later.

But now, with Intel’s innovative storage technology Distributed Asynchronous Object Storage (DAOS) based on the Xeon Scalable platform, drug discovery will be faster, which can optimize system architecture performance, achieve data acceleration, and greatly improve the correlation of bioinformatics analysis. Project efficiency.

DeepMind AlphaFold2 is accelerated module by module through Intel Xeon Scalable processors, which can meet the needs of high-efficiency and fast artificial intelligence analysis brought by current protein structure analysis and big data sequencing.

In addition, Intel’s unified programming framework oneAPI can also break down the platform barriers of cross-architecture computing, unleash the potential of hardware, help industrial users accelerate artificial intelligence-assisted drug discovery (AIDD) and computer-aided drug design (CADD), and innovate new paradigms of drug research and development.

The basis for the acceleration of digital medicine is the update of the underlying technology. It can be said that without the advancement of the underlying infrastructure of cloud native, we will not be able to enjoy the dividends it brings.

In terms of entertainment, cloud native can also better serve to improve our lives. With the popularity of 5G, the global cloud gaming market has also ushered in unprecedented growth.

Both gamers, game operators, content service providers, and game developers are looking forward to cloud games. According to the “Research Report on In-depth Observation and Trend Analysis of the Global Cloud Game Industry” by the China Academy of Information and Communications Technology, after three years, the market size of China’s cloud games will increase by more than four times, and the number of users will more than double.

What we have to face is that cloud native will be linked to the final experience of cloud games.

In this regard, Intel provides full-stack hardware product support and solutions covering PC Farm, server virtualization and server containers for Windows and Android cloud gaming platforms, and with its AI-driven XeSS super-sampling technology, helps games achieve near-native The 4K resolution picture quality brings a higher quality user experience to the majority of players.

In addition, Intel also released a new self-developed hardware terminal with Alibaba’s cloud-network-terminal integration new computing architecture “Wuying” cloud computer, which is based on the cloud-integrated optimized protocol stack design, which can give full play to Intel’s local AI. Computing power, AVC/HEVC low-power and low-latency encoding multimedia capabilities and high-speed link capabilities create a seamless access experience that integrates software and hardware.

With the strong alliance of Intel and Alibaba, cloud gamers will have a perfect gaming experience.

Intel at the 2022 Yunqi Conference

There are only hard bones left in the development so far

In fact, cloud native has been developed for many years, but even so, it is inevitable that there will be bottlenecks in the development process.

Up to now, the challenges of cloud native technology have been gradually overcome. To achieve better performance, there are only hard bones left to chew on. These problems require the cooperation of cloud service providers and infrastructure providers to solve.

First, if cloud native is the foundation of applications, then computing power will be the foundation for all development of cloud native. At the same time, how to make applications make better use of these computing power is the key.

Nowadays, the amount of data has exploded, and the computing power demand has continued to rise. Application performance must expand with the increase of user requests and system scale.

For cloud-native systems, there are two key points: First, high scalability. Currently, most parallel applications cannot achieve effective acceleration performance on more than 1,000 processors. Many parallel applications in the future must be able to scale effectively. To thousands of processors, the CPU must be able to coordinate all of this [5] ; in addition, the software architecture, cloud native has extremely high requirements on the flexibility, efficiency, and openness of the computing system, and the computing system is also From the bus as the center of gravity to the evolution of the software-defined interconnect structure as the center of gravity. [6]

To deal with this problem, choosing the right platform is the key.

Intel’s Xeon Scalable processor platform is a good choice. As can be seen from the name, scalable performance is its specialty, and the fourth-generation Intel Xeon scalable processor code-named Sapphire Rapids has full-featured full-stack support.

At the Yunqi Conference, Intel announced that it will work with members of the cloud computing open source community to introduce Sapphire Rapids in the Anolis OS operating system, including all processor built-in accelerators, as well as the Linux kernel, basic libraries, user-mode toolkits, and interfaces to upper-layer applications. Fully optimized. Based on this system, Alibaba Cloud will launch a new version of Alibaba Cloud Linux for production environment support.

In addition, Intel, Alibaba and other ecological partners have joined the Universal Chip Interconnection Open Specification (UCIe) alliance, aiming to jointly create an open ecosystem that integrates and operates chips designed and produced by different process technologies through advanced packaging technologies. grain.

In terms of software definition, the network programmability based on Intel technology has been developed to have the ability of end-to-end full-link programmability, which will build a complete network programmability platform for network users under the condition of general hardware architecture. And further enhance the flexibility and scalability of the network.

As one of the founding members of the open source community of cloud computing, Intel actively participates in the construction of the dragon lizard open source community. This year, it was rated as the first excellent cooperative enterprise of the dragon lizard community.

In order to allow more enterprises to join in, Intel has joined hands with Kingdee and Alibaba Cloud to jointly build the Kingdee Cloud Cosmic platform based on Intel architecture. As the cache component of the PaaS platform, the in-memory database Tair accelerates the construction of an efficient, agile, and more cost-effective PaaS platform with the advantages of stronger durability and lower TCO.

Intel, Kingdee and Alibaba Cloud jointly attended the signing ceremony of the Kingdee Cosmic Project

Second, cloud native not only has a great demand for computing power, but also has a large number of different types of data, including scalar, vector, matrix, and space, which requires a powerful computing system to cope with various types of calculations.

In fact, the computing complexity of cloud native is much higher than that of other fields, and general computing systems based on traditional homogeneous multi-core processors can no longer meet application requirements.

As the name implies, heterogeneous computing is to put devices of different structures together for calculation. It is based on a heterogeneous multi-core structure computing system, and reasonably divides and maps application tasks according to the computing characteristics or advantages of each computing unit to pursue index optimization. parallel and distributed computing models. This technology has long been widely used in cloud native, especially in the field of AI.

At the Yunqi Conference, Intel and Alibaba supported Alibaba’s heterogeneous computing acceleration platform with Intel’s data center Flex series GPUs. The cooperation between the two parties expanded Intel’s footprint in the AI field.

The high performance and excellent TCO of the Flex series GPUs are ideal for cloud workloads such as media transfer, cloud gaming, artificial intelligence, metaverse and other emerging visual cloud usage scenarios. It can help customers break through the constraints of isolated and closed development environments, reducing the need for data centers to have to use multiple separate, independent solutions.

It is worth mentioning that in terms of computing power, Intel can also provide a variety of heterogeneous computing products, including CPU, GPU, IPU, FPGA, etc., with a comprehensive product portfolio from cloud to end, providing customization for different business and application scenarios. Managed computing services. At the same time, its cross-platform programming framework oneAPI enables customers’ designs to be smoothly transplanted on various heterogeneous computing power, ensuring that customers’ design assets are effectively reused.

Third, at this stage, it is not so easy for Java to efficiently use SIMD (Single instruction, multiple data) vectorized operations, which will directly affect cloud-native performance.

In order to solve this problem, Alibaba and Intel announced that they will jointly build the Vector API, which effectively solves the challenges brought by the use of vectorized computing in the Java field while improving the CPU computing efficiency, and greatly improves the Java cloud-native computing capabilities.

What is SIMD and what does it do? In the current mainstream CPU hardware, there are generally vectorized units that can efficiently perform SIMD operations. SIMD vectorization operations have been proven to greatly improve computing efficiency. In the fields of artificial intelligence, machine learning, multimedia, and big data, SIMD vectorization operations can achieve several times the performance improvement compared to traditional scalar operations.

But getting Java to use SIMD vectorization efficiently is no easy task. Intel technical experts introduced the current measures: one is to rely on JVM to achieve automatic vectorization, but it will make it difficult for developers to precisely control the entire process; the other is to call local implementation through JNI, but it will introduce additional overhead of JNI and increase the system The complexity of integration and transplantation; the third is to use the Java Vector API, which can solve most of the above problems.

Combining the advantages of both parties, the Vector API came into being. First, the Intel team has the authors of the native Vector API, and the Alibaba JDK team also has rich experience in Vector API; secondly, Alibaba has a complete CI/CD system and a complete test set; finally, Alibaba has Rich application scenarios to verify the effect of Vector API.

The Vector API can directly bring performance improvements. Using the BLAS library implemented by the Vector API can achieve a performance improvement of 2.2 to 4.5 times; in the field of image processing, using the Sepia filter of the Vector API can achieve a performance improvement of up to 6 times; in the database field, database repartitioning and linear detection Hash table can be improved several times in performance; in the acceleration of Bit-Packing encoding and decoding, the decoding part can be improved by 3 to 5 times.

In order to make the Vector API easier to use and solve the problem of low version incompatibility, Alibaba and Intel are currently conducting a cooperation project to help the industry migrate the Vector API to mainstream low versions of Open JDK, such as Open JDK11, and from Applications and frameworks start to push to support higher versions of the JDK.

achieve bigger goals

When all technical problems are solved, it does not mean the end.

Today, the issue of data center energy consumption has received widespread attention. Sustainable development, carbon neutrality, and carbon peaking have not only become national policies of many countries, but also important development strategies of many companies. In the case of Amazon, last year it planned to build a data center worth 350 million euros in Ireland, but the plan was shelved due to environmental concerns.

Many policies have also been introduced in China to realize the “3060” overall plan of carbon neutrality and carbon peaking. In May last year, a number of ministries and commissions jointly released the calculation of East and West, which will change the physical location layout of my country’s data centers in the medium and long term. The state also put forward clear requirements for this plan, and stipulated the target value of PUE.

Therefore, whether for social responsibility or following planning, cloud-native carbon neutrality should be done well.

A Google analysis shows that the energy expenditure of cloud data centers mainly comes from computer equipment, uninterruptible power supplies, power supply units, cooling devices, fresh air systems, humidification equipment and auxiliary facilities. Among them, IT equipment and cooling devices consume a large proportion of energy. Therefore, it is necessary to start with IT equipment energy consumption and cooling system, to optimize the total energy consumption of the data center or to find the best compromise between performance and energy consumption. [7]

As early as 2015, Intel and Alibaba launched cooperation and exploration in immersion liquid cooling, in order to help create green and sustainable future data through its ultra-high heat dissipation efficiency, high reliability, high performance, and high density. center.

After that, it has made continuous strides in green and sustainable development. In 2016, Alibaba launched an immersion liquid-cooled prototype, and in 2020, the first 5A-level green liquid-cooled data center will be put into production.

But in the process of development, it will inevitably encounter challenges. While immersion liquid-cooled servers have gradually begun to be deployed on a large scale in recent years, they still face multiple challenges such as the compatibility of server hardware with liquid cooling technology materials, electrical signal integrity, and heat dissipation characteristics.

In this regard, Intel started from processor customization and server system development and optimization, and broke through the two key issues of chip power consumption and cooling that affect the improvement of computing power, and helped Alibaba data centers successfully deploy immersion liquid cooling service systems, improving heat dissipation and energy efficiency. At the same time, it effectively reduces the total cost of ownership (TCO) of the data center.

Telling the story of Intel and Alibaba is a history of cloud data centers for more than ten years. In the future, the “cloud story” between them will continue.

Text/Fu Bin

references:

[1] FOSTER I, YONG Z, RAICU I, et al. Cloud Computing and Grid Computing 360-Degree Compared[Z]. 2008.1-10.

[2] Luo Junzhou, Jin Jiahui, Song Aibo, et al. Cloud Computing: Architecture and Key Technologies [J]. Journal of Communications, 2011, 7.

[3] Wan Xiaolan, Li Jinglin, Liu Kebin. Cloud native network creates a new era of intelligent applications [J]. Telecommunications Science, 2022, 38(6): 31-41.

[4] Lu Gang, Chen Changyi, Huang Zelong, et al. Research on intelligent cloud-native architecture and key technologies for cloud-network integration [J]. Telecommunications Science, 2020, 36(9): 67-74.

[5] Yao Jifeng. Cloud computing is needed in the future [J]. Development and Application of High Performance Computer, 2009, 1(8).

[6] Lv P, Liu Q, Wu J, et al. New generation software-defined architecture[J]. Sci Sin-Inf, 2018, 48: 315, 2018, 328.

[7] HOELZLE U, BARROSO L A. The Datacenter as a Computer: An

Introduction to the Design of Warehouse-Scale Machines[M]. 1st ed.

Morgan and Claypool Publishers, 2009.

This article is reproduced from: http://www.guokr.com/article/462837/

This site is for inclusion only, and the copyright belongs to the original author.