Original link: https://jw1.dev/2023/09/11/bun-node-perf-diff.html

On September 8, 2023, Bun.js released its first stable version: Bun 1.0

Bun 1.0 is finally here.

Bun is a fast, all-in-one toolkit for running, building, testing, and debugging JavaScript and TypeScript, from a single file to a full-stack application. Today, Bun is stable and production-ready.

…

Bun is a drop-in replacement for Node.js. That means existing Node.js applications and npm packages just work in Bun.

According to the description on the official website, Bun is an all-in-one JS toolbox, which includes running, building and debugging JS and TS code. It is also a JS runtime like NodeJS and Deno, but NodeJS has dominated the world. For a long time, Deno has been tepid. Just when we thought that NodeJs would always be firmly on the throne, this guy who suddenly caught our eyes a few days ago, Bun, seemed to have caused a huge discussion in the circle. At present, it seems that Let’s look at the reasons. One is that it is basically fully compatible with NodeJs. The official word is “drop-in”, which I translate here as “brainless”. Yes, brainless replaces NodeJs. There is another one that is the most discussed – performance.

API Serving performance comparison

First of all, the most common scenario I can think of is API serving. Through the http module of NodeJs and the Serve module of Bun, I made two APIs with exactly the same functions. When GET is executed, “Hello” is returned.

World”:

|

|

The writing methods on both sides are not quite the same, but basically they use very short code to complete a simple API. Then we write the test code like this:

|

Node tested it three times, calling 1,000 APIs each time, and the average was about 300ms. Let’s look at Bun again:

Basically maintained at around 80ms, that is to say, in this test, Bun is about 3.75 times faster than NodeJS.

Encryption performance testing

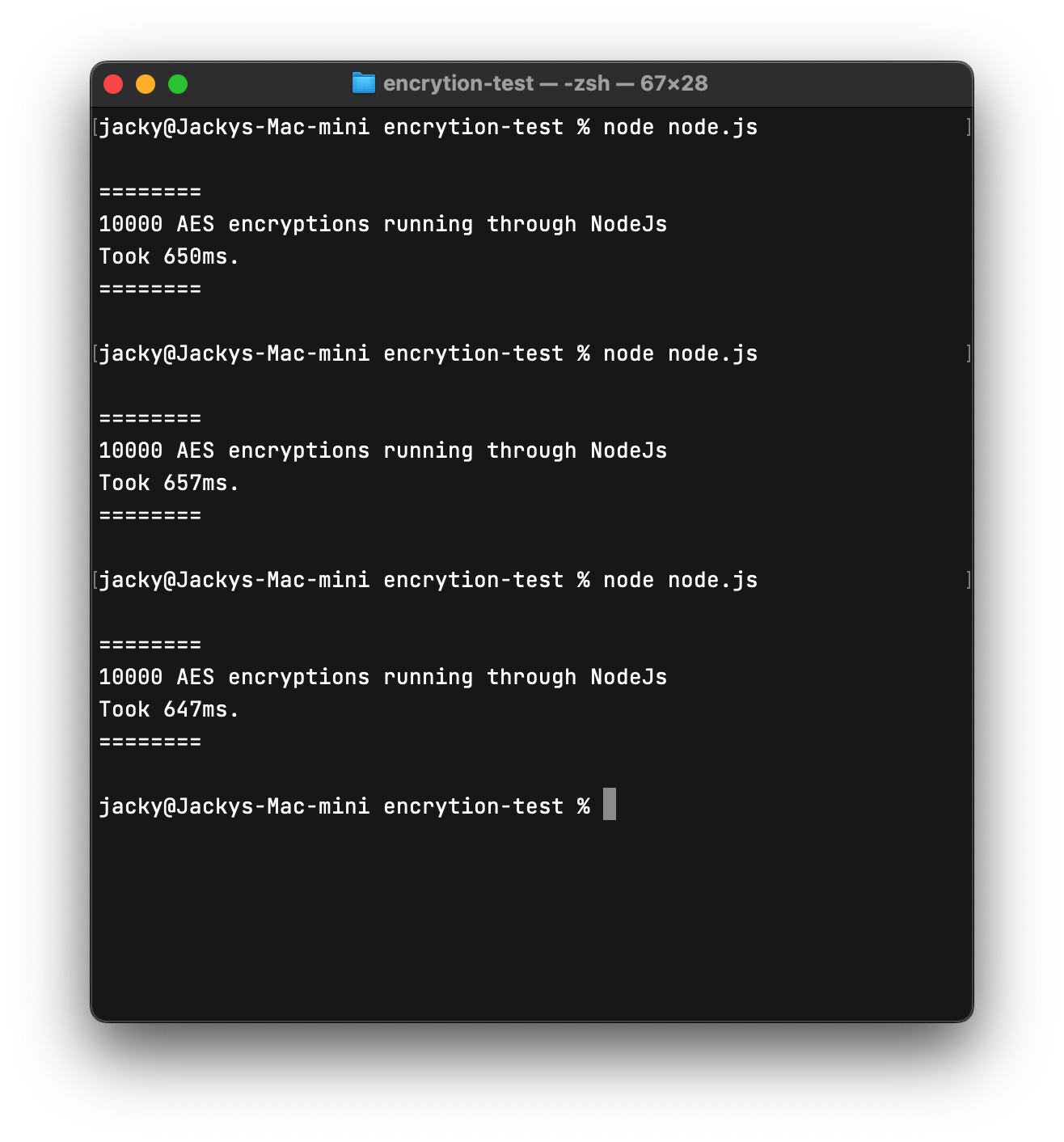

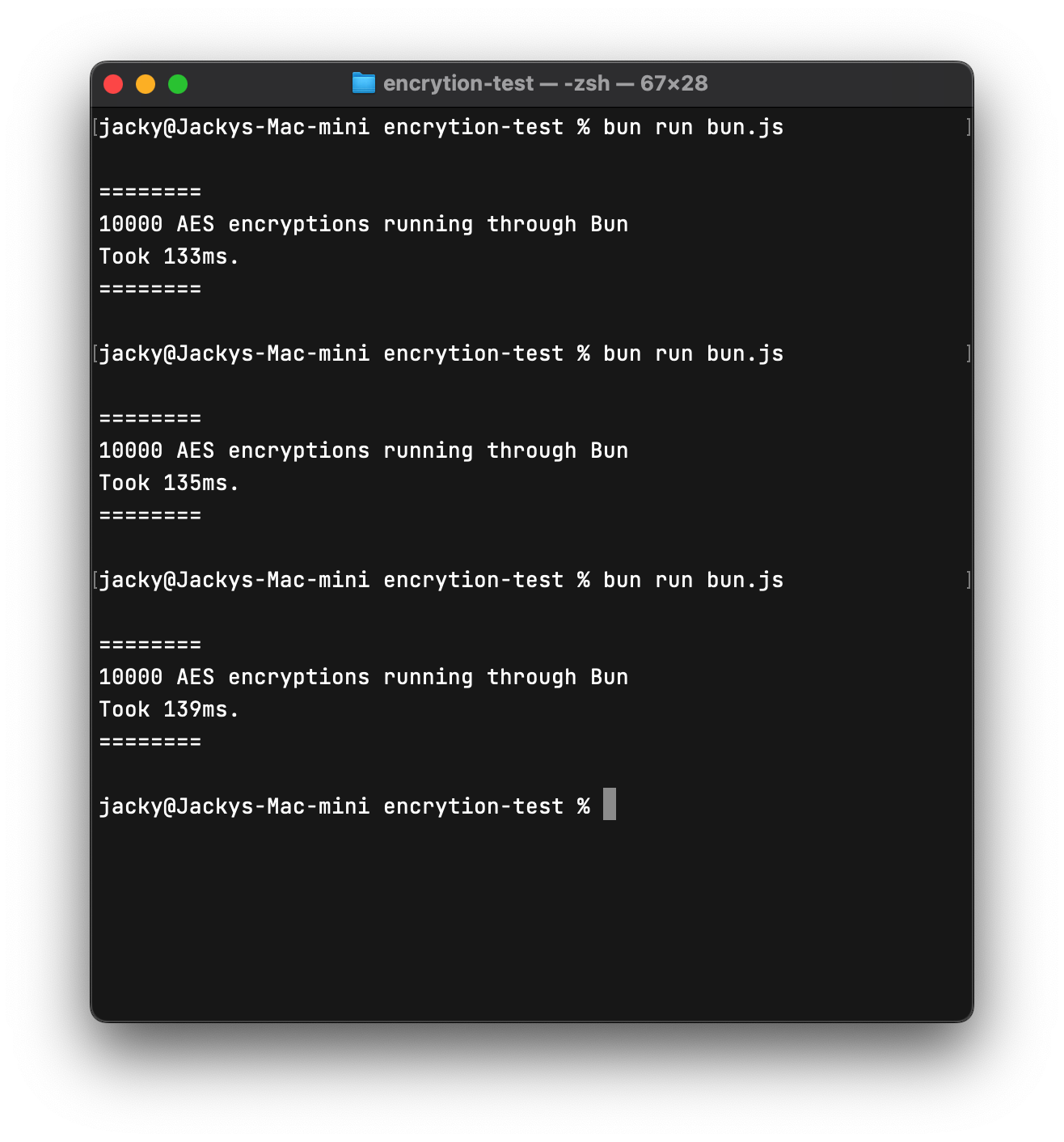

In the API test, we saw that Bun is indeed faster than NodeJs. Now let’s see if there is any difference in encryption speed:

|

The consumption time of 10,000 AES encryptions on NodeJs is about 650ms. Let’s take a look at Bun:

Basically around 140ms. We have also seen in the above test that Bun is indeed faster than NodeJS. Is Node really overwhelmed?

All I can say is, not entirely.

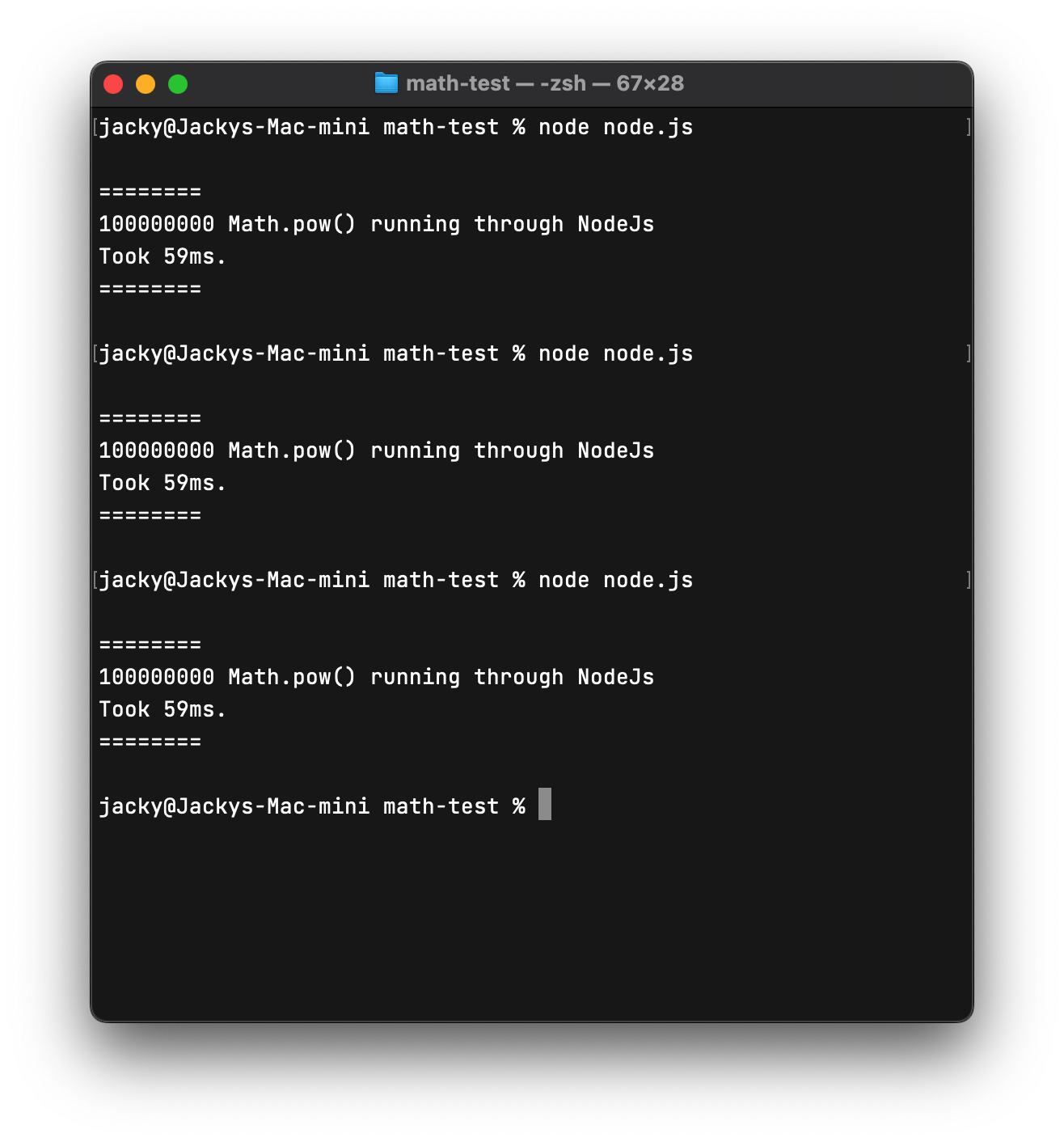

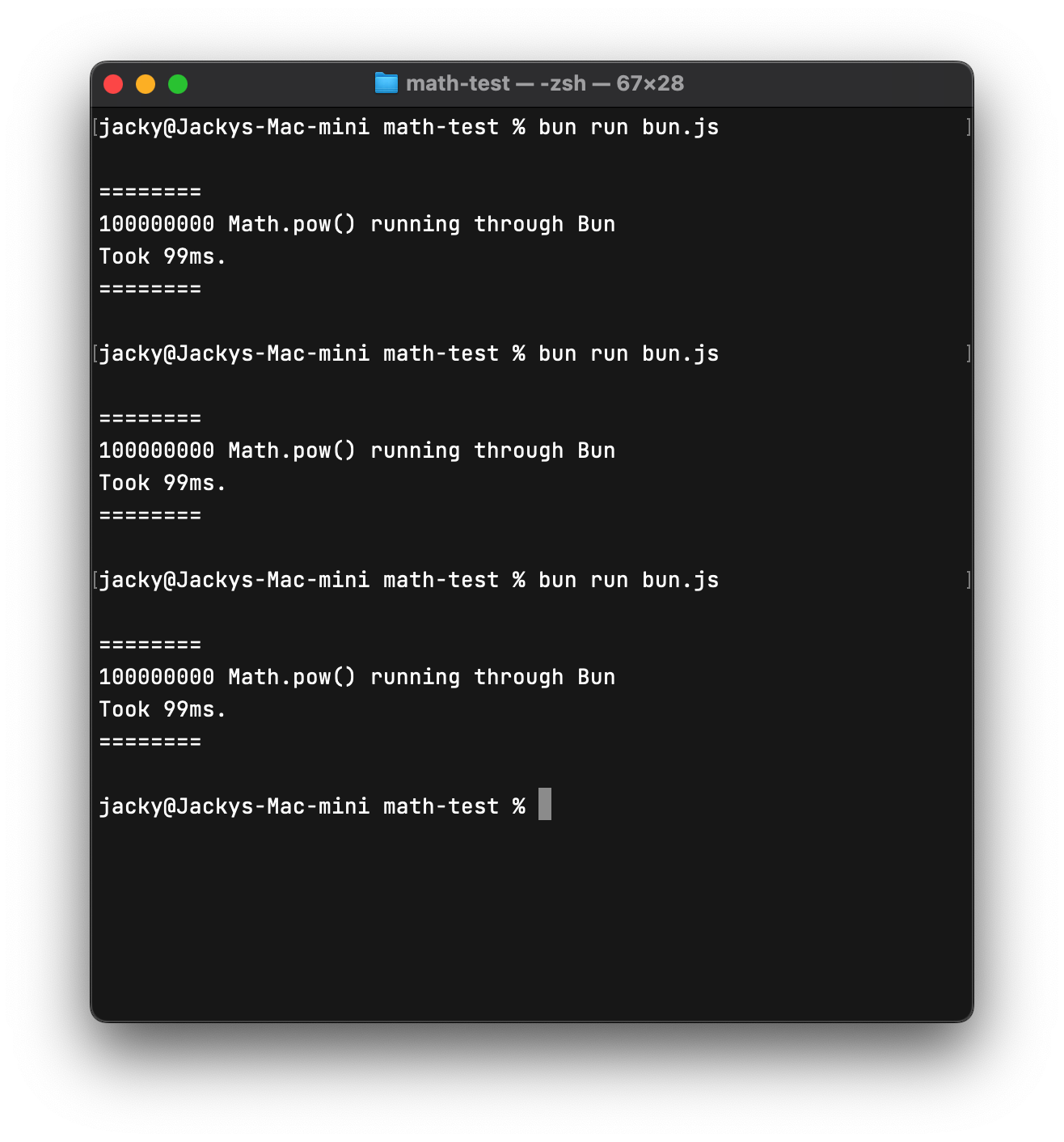

Arithmetic Performance Test

We use a program to randomly generate two numbers a and b, and then calculate the square of b of a 100 million times.

|

Let’s take a look at the results after running:

Basically maintained at 60ms, let’s take a look at Bun:

Bun is actually 40ms slower than NodeJs.

How should I put it, with my IQ I want to explain this to you… it is still too difficult for me, but after 100 million calculations, the 40ms difference does not seem to be so obvious in a production environment. After all, it is the first It’s a stable version, I believe the Bun team will solve it later!

my thoughts

Just when I was basically embracing Serverless, Bun appeared. At first I felt that its appearance was a bit inappropriate, but after using it myself, it can be said that Bun inspired me to write Server-ful

The interest in the App is not only due to performance, but also because of the development experience of Bun. Many of the refactored APIs are simply beautiful!

Read file :

|

WebSockets :

|

Taken together, Bun is indeed faster than NodeJs, and it is a multiple of speed. In addition, it almost perfectly inherits the ecology of NodeJs. I am really optimistic about this runtime and look forward to the Bun team doing a lot of work in the future!

This article is reproduced from: https://jw1.dev/2023/09/11/bun-node-perf-diff.html

This site is for inclusion only, and the copyright belongs to the original author.