Original link: https://www.52nlp.cn/rpa%E7%95%8C%E9%9D%A2%E5%85%83%E7%B4%A0%E5%AE%9A%E4%BD%8D%E4%B8%8E%E6%93%8D%E6%8E%A7%E6%8A%80%E6%9C%AF%E8%AF%A 6%E8%A7%A3-%E8%BE%BE%E8%A7%82%E6%95%B0%E6%8D%AE

Introduction to RPA

What is RPA? RPA is an abbreviation for Robotic Process Automation. In “Intelligent RPA Combat”, we define it like this: through specific technologies that can simulate human operations on the computer interface, the corresponding process tasks are automatically executed according to the rules, replacing or assisting humans to complete related computer operations. To sum it up in one sentence: use software robots to automate tasks that were previously done by humans.

So what is IPA? IPA (Intelligent Process Automation) = RPA+AI. Simply put, it is to use AI to strengthen the various capabilities of RPA. For example: strengthening the interface element recognition ability of RPA, empowering RPA to complete intelligent decision-making during the execution of RPA tasks, etc. We usually compare RPA to the gripper and AI to the brain. AI is responsible for thinking, RPA is responsible for action. RPA is a digital platform that can call all the software and hardware capabilities of computers. AI can exist as one of the intelligent components and be invoked by RPA. AI can also be empowered as part of RPA, such as intelligent robot scheduling logic.

At present, there are mainly two AI technologies in the fields of CV and NLP, which are widely used in RPA.

1. Computer Vision

CV (Computer Vision) Computer vision is a field that specializes in how to extract useful information from digital images. In RPA, common CV technologies include template matching, optical character recognition, target detection, etc.:

- Template Matching Template Matching: Template matching is an algorithm for locating small images (templates) in large images. For example, locate the position of an icon on the desktop, find a button in a form, and so on.

- OCR (Optical Character Recognition) Optical Character Recognition: By inputting an image, the OCR model analyzes and processes it, and outputs structured character information on the image (character coordinates, character specific values). For example, verification code identification, extraction of information on invoices, etc.

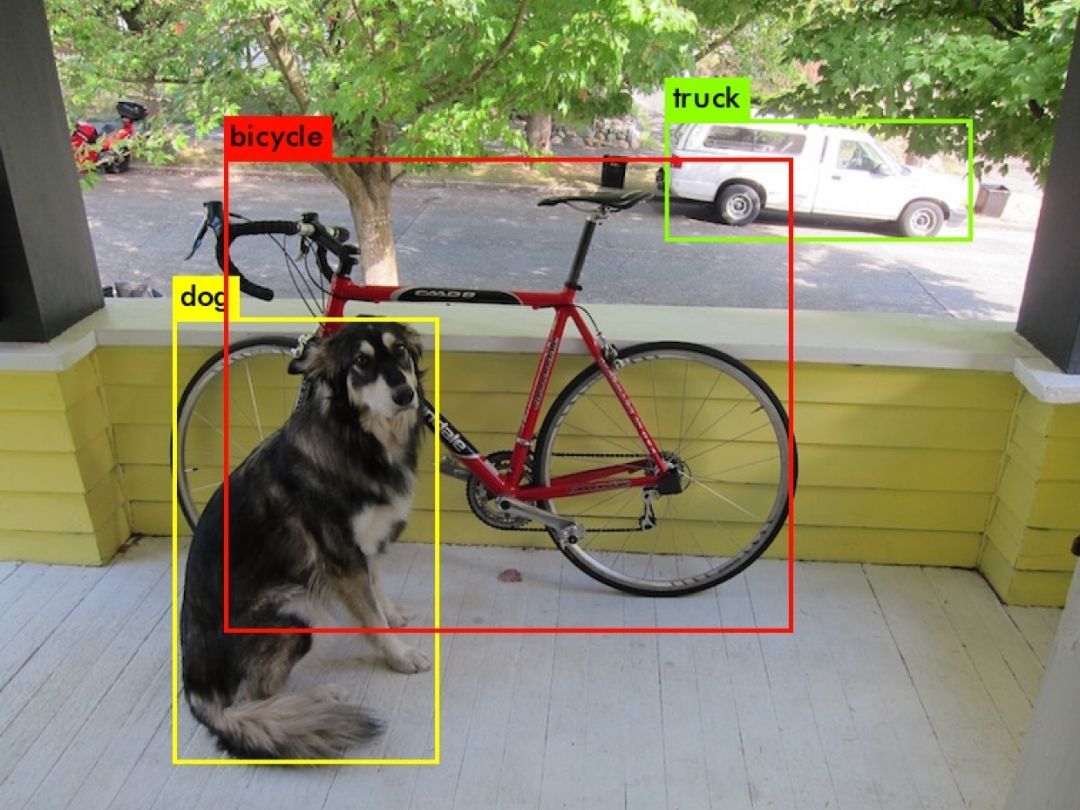

- OD (Object Detection) Target detection: By inputting an image to the computer, let the computer analyze the objects in it. For example, analyze all controls (buttons, edit boxes, etc.) in an application form for subsequent RPA operations.

2. Natural Language Processing

NLP (Natural Language Processing) Natural language processing is a field that specializes in how to extract useful information from text. In RPA, common scenarios are:

- IDP (Intelligent Document Processing) intelligent document processing: such as automatic parsing of contract documents: automatic processing of a large number of contract documents, rapid response to review, search, proofreading, etc. Document information analysis and extraction: analyze long documents such as enterprise bidding documents and internal documents. Accurate matching of HR personnel and positions: extract key information from resumes to construct talent portraits, and match precise positions.

- Text review: yellow anti-review, identifying vulgar information such as pornography in texts; identifying reactionary information such as religion and guns. Political-related identification, identifying political-related and other sensitive information in the text. Advertising filtering, identifying and blocking advertising information, and identifying and analyzing user speeches. Spam detection, identifying spam information such as cursing and brushing posts in the text.

3. Application value

The application value of RPA is mainly reflected in the following aspects:

- Improve enterprise efficiency: In terms of time, human players 5 x 8h = 40h. Robot player 7 x 24h = 168h is 4.2 times that of human! After all, RPA robots have 3 working days a day, and work one day and two days overtime… In terms of speed, how fast the business system responds is how fast RPA can execute. And there will be no problems such as fatigue or decreased accuracy due to extended working hours.

- Reduce human risk: Humans are good at abstraction and reasoning. Machines are good at repetition. Human beings are prone to misoperation and misjudgment when they are tired, external interference, and subjective emotional fluctuations. RPA robots are good at performing specific jobs over and over again.

- Get through the business system: No need to transform the original system, no need to develop interfaces or SDK to achieve docking. That is what you hear “non-intrusive”. A typical application is to complete data transfer between isolated applications.

4. Development History

The development process of RPA can be roughly summarized into the following stages:

- Attended stage: In this stage, RPA appears as a “virtual assistant”, covering almost the main functions of robot automation and all operations of existing desktop automation software. Deployed on employee PCs to improve work efficiency. The disadvantage is that it is difficult to realize end-to-end automation and cannot be applied on a large scale. However, it has been able to effectively reduce the average business processing time, which can effectively improve customer experience and save costs.

- Unattended stage: At this stage, RPA is called “virtual workforce”, and the main goal is to realize end-to-end automation and virtual employee classification. Mainly deployed on the VMS virtual machine, it can arrange work content, centrally manage robots, and analyze robot performance. The disadvantage is that the work of RPA software robots still requires manual control and management. Unattended RPA robots can work 24 * 7, and replace human-computer interaction with business processes, releasing greater possibilities for increasing efficiency and reducing costs.

- Autonomous RPA stage: In this stage, the main goal of RPA is to achieve end-to-end automation and multifunctional virtual workforce at scale. Usually deployed on cloud servers and SaaS, it is characterized by automatic grading, dynamic load balancing, context awareness, advanced analysis and workflow. The disadvantage is that it is still difficult to deal with unstructured data. However, with the integration of more technologies, autonomous RPA can fundamentally improve business value and bring more advantages to users.

- Cognitive RPA stage: This stage will be the direction of RPA development in the future. Start to use technologies such as artificial intelligence, machine learning, and natural language processing to realize unstructured data processing, prediction and analysis, and automatic acceptance of task processing. With cognitive capabilities, the decision-making process is all performed by robots, thereby automating all long and complex tasks.

The core module of RPA

1. Development platform

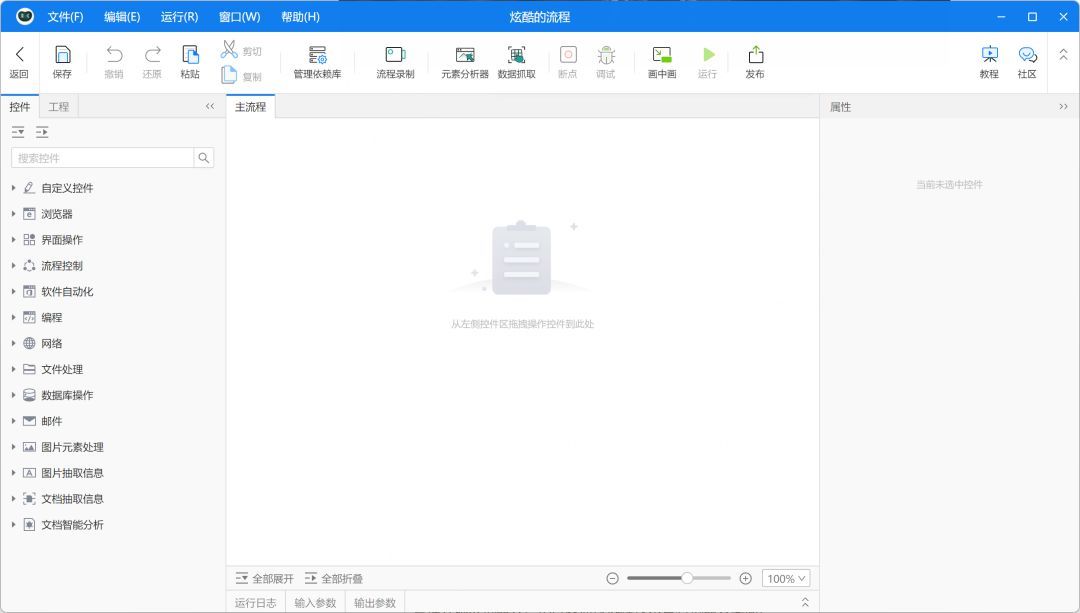

Daguan RPA Development Platform

Daguan RPA Development Platform

The development platform is a tool for process designers to design processes. It mainly has the following functions:

- Process Design: When we have an idea and we want to make it a process that can be executed by ourselves, we need to use it to design a process. Visual programming interface, low-code programming, provide drag-and-drop method for business logic design. Must have the ability to design complex processes, such as processes, sub-processes, third-party libraries, custom components and other functions. It is also necessary to have the ability to “encapsulate”, from small processes to large processes. And provide the concept of “reuse” to avoid reinventing the wheel. There are also several essential tools in the process design process:

- Element analyzer: On the interface, the buttons, edit boxes, etc. that we see with the naked eye are all “elements” in the RPA world. Many visual components take an element as an input parameter to perform corresponding actions, such as “click a button”, “enter text in an edit box”, and so on. Through the element analyzer, we can easily select a target element on the screen. With the element analyzer, the process designer does not need to have too much technical knowledge of the underlying interface elements to identify and locate the elements, pointing to where they are.

- Element Recorder: As the name suggests, a bunch of visual components are automatically generated by recording a user operation. It is often useful when the environmental interference is relatively small. Combined with debugging and fine-tuning, a process prototype can often be designed quickly.

- Data scraping: This is a particularly interesting feature that grabs structured information on the screen: any data that looks like a list, table, tree should support being scraped.

- Smart component package: that is, some visual components powered by AI. Through simple drag and drop, some functions like the following can be completed:

- Image extraction information: ID card extraction, invoice extraction, business license extraction, bank card extraction, train ticket extraction, etc.

- Document extraction information: procurement contracts, banking retail loan contracts, civil judgments, bond prospectuses, etc.

- Document intelligent analysis: text classification, text review, text summarization, label extraction, opinion extraction, sentiment analysis, etc.

- Process debugging: During process development, we may need to perform real-time debugging to find problems in the process. Such as random breakpoints, single-step debugging, single-step into, single-step out, view the current state (such as variables) and other information. With the debugging function, we can easily stay at any step of the process and check the current context state in order to adjust and optimize the process.

- Version management: After the process is developed, we need to publish it to the console. This process is also called “publishing”. A mature RPA product should have complete versioning support, and can release a specific version for the current process. Rollback to any historical version should also be supported. Of course, it should also allow multiple process designers to modify the same process at the same time.

- Picture-in-Picture: The main purpose is to provide environmental isolation. By providing a layer of virtual session, the operation of the robot is isolated from the current host machine. While the robot is executing, the business teacher can also use the computer normally without interfering with each other.

- Code support: It is also an expert mode, directly calling the underlying API, Block API, etc. It provides expansion of arbitrary complexity, allowing process design students with programming ability to expand the capabilities of RPA.

2. Control Center

Daguan RPA Control Center

Daguan RPA Control Center

The control center is the brain of the RPA robot, and all tasks are issued from here, which can also be said to be the central processing unit of the RPA robot. It mainly has the following functions:

- Process management: manage the published visual processes, import and export, view specific process versions, etc.

- Bot Management: Manage RPA bots. It includes functions such as reviewing robots online, enabling and disabling robots, whether to share robots, etc. You can also directly view the robot desktop through remote desktop technology.

- Task management: A task is a template (static, static) for a robot to run a process. A task can be created by selecting a specific process and configuring specific parameters. After the task is created, the control center will schedule the RPA robot according to the scheduling information of the task.

- Job management: When a task starts to run, a “job” (runtime, dynamic) will be generated. Contains various information about the robot’s runtime. Such as running logs, running status, etc.

- Data assets: A simple understanding is a secure database in the cloud. When the permissions are satisfied, the process can add, delete, check, and modify the specified data. At the same time, there is also the concept of secure fields, for example, when a field is of type “encrypted”, we should not be able to print out its value directly on the development platform.

- Rights management: role-based rights management model. Different roles can be assigned to different accounts, and different functions can be assigned to different roles.

- Tenant management: Multi-tenancy is a software architecture in which a single software instance can serve multiple different user groups. SaaS is an example of a multi-tenant architecture. Data is completely isolated between different tenants.

- Operation and maintenance management: manage the RPA server itself. Server resource monitoring, system operation monitoring, etc. can be performed.

- Report analysis: The control center provides all data about the robot, and we can use these data to perform various statistical analysis. For example, analyze the execution success rate of a certain process, the execution efficiency of a certain robot, etc.

- Log audit: Every operation should leave traces. It should include related logs of RPA robots, as well as related logs of user operations.

3. Robots

RPA robot: Simply put, it is to execute the tasks sent by the control center. Actuator for RPA!

RPA Elemental Analyzer

Two concepts need to be understood: user interface tree, element selector.

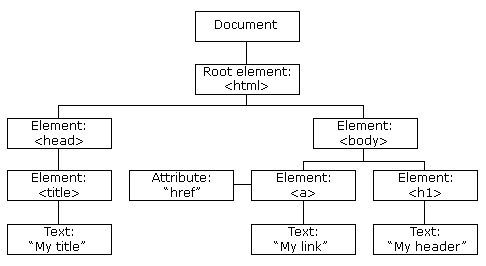

- User interface tree: the abstract data structure presentation of the user interface, including static data and dynamic data. Each node on the tree is an element on the user interface. For each element, we can view its attributes and operate tests.

- Element selector: A means of locating an element or group of elements on the user interface tree. You can precisely locate an element, such as finding a form titled X; you can also vaguely locate an element, finding an element whose button name starts with X.

So why do you need elemental analyzers? The reason is that there are too many UI framework technologies! Each technology requires a different way of adaptation. For example, to automate the Web, we need to understand Web automation technology; to automate Java programs, we need to understand Java automation technology; to automate SAP programs, we need to understand SAP automation engine technology. The adaptation of this UI framework should be left to the RPA vendor to complete. We need a unified, simple way to automate every UI element we see. Process designers only need to understand: Oh! Here’s a button and I need to click it!

Looking at the development history of element analyzers from the positioning method:

- Absolute coordinate positioning: automatic medicine-drinking auxiliary plug-in. Monitor the color change of a specific coordinate point on the screen. When the color of the blood bar turns gray, drink a bottle of golden sore medicine (press the button to use the golden sore medicine item)

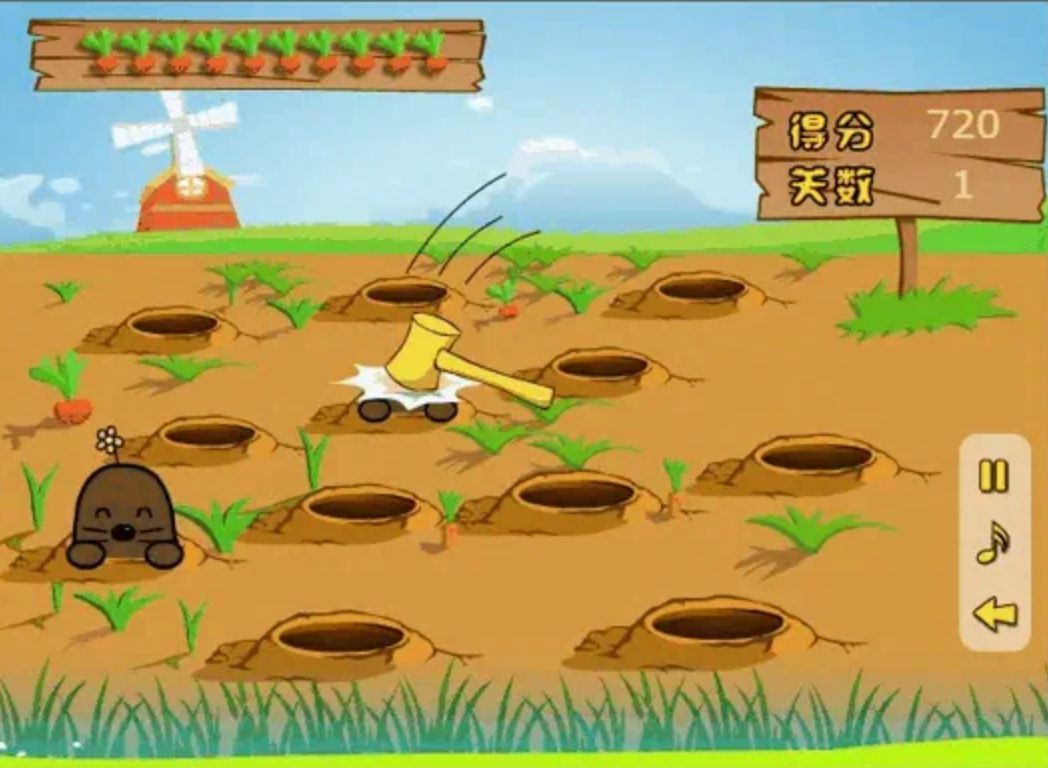

Image recognition diagram 1

Image recognition diagram 1 - Image recognition: Find small images in large images. Check whether a “gopher” appears on the screen in a loop, and when it appears, operate the mouse to click on the target position.

Image recognition diagram 2

- Selector positioning: DOM (Document Object Model) document object model abstracts the entire UI interface into a “tree” data structure, and uses XPath, CSS Selector and other syntax to locate. We can write the selector very “precisely”, or we can write the selector very “fuzzily”.

Schematic diagram of selector positioning DOM tree

- Intelligent positioning: use AI to empower RPA. For example, target detection related algorithms are used for positioning.

Schematic diagram of target detection The two core tasks of RPA: element positioning and element manipulation

Schematic diagram of target detection The two core tasks of RPA: element positioning and element manipulation

Positioning of elements:

- Based on the screen coordinate point (HitTest): mainly the capture function of the element analyzer, which allows the process designer to easily select a target element.

- Selector-based: Select an element or a group of elements on the DOM through a simple selector syntax. Mainly during the running process, in order to find the target element in the running environment.

- CV-based: Locate target elements through template matching or related AI algorithms. It is useful when the business program does not support capture!

Operations on elements:

- Method-based AT (Assistive Technology): When the business program supports AT, we can directly call the AT method to operate. For example, calling the click function of a button, setting the content of the text box, etc. Most business programs support the AT method!

- Message-based: On Windows, forms can communicate through messages. We can use Window Message to manipulate elements.

- Based on keyboard and mouse simulation: After obtaining the target element, if the target element does not support any AT or message, we can also use the keyboard and mouse simulation to operate simply and rudely. This is also the most artificial way, and it can be used in all scenarios. After all, people also interact with computers in this way. Keyboard and mouse emulation is also essentially based on Windows messages.

Automation technologies can be classified according to whether they have a GUI or not. RPA supports two types of automation at the same time, and supports business programs with or without GUI.

- Easy to write non-GUI automation: Test code is relatively easy to write and easy to debug. Stable operation, not easily affected by environmental changes. It is easy to maintain and does not need to update the test code frequently. High operating efficiency, compared to GUI automation, non-GUI automation is very fast.

- GUI automation mainly covers a wide range: most of them follow the underlying Accessibility specification of the UI framework. Non-intrusive, does not require source code or SDK access for business applications. (In fact, it is actually invaded…in the UI framework layer!) Simulate user operations to the greatest extent, and simulate how users operate.

- There are a few factors that make GUI automation unstable:

- Random pop-up windows: When the automation script finds that the control cannot be positioned normally or cannot be operated, the GUI automation framework automatically enters the “abnormal scene recovery mode”. In the “abnormal scene recovery mode”, the GUI automation framework detects various possible dialog boxes in turn, and once the type of the dialog box is confirmed, it immediately executes a predefined operation (for example: click the “OK” button), and then retries the steps that just failed.

- Control property changes: Absolute matching is more accurate, but “fuzzy matching” is more flexible, which can improve the recognition rate of controls. Often in the process design process, it is necessary to weigh the target element and the use of attributes before the adjacent level.

- A/B testing of the system under test: the interface seen during the development process is not the same as the interface seen during runtime. Branch processing needs to be done in the test case script to correctly identify different branches.

- There are also some random page delays that cause control recognition failures: introduce a retry mechanism, which can be at the step level, page level, or business process level.

Behind the scenes of the Elemental Analyzer

1. Win32 API

The underlying API of Microsoft’s Windows operating system. The advantage is that it looks very deep, powerful, and low-level, and it supports standard Windows controls well. The disadvantage is that it is very complicated and the development efficiency is low. Custom controls are not supported.

- Window recognition: You need to find the window handle through FindWindow and EnumWindows, and then call other APIs GetWindowText, GetWindowRect, GetWindowLong, etc. to get the window properties, so as to find the desired control or window.

- Manipulation and acquisition of properties: Use SetWindowText and GetWindowText to manipulate the text displayed on the control, set the top-level window through SetForegroundWindow, and get the current top-level window through GetForegroundWindow. Similar methods include GetActiveWindow and SetActiveWindow. Theoretically speaking, most controls or windows can be operated through Windows API and Windows Message, and some properties of some controls can also be obtained.

2. MSAA

MSAA (Microsoft Active Accessibility) is a technology that enhances Windows accessibility control capabilities. MSAA is not designed for automated testing by nature. Its significance is to provide a set of interfaces so that developers can easily develop software that can be used by disabled people, such as screen reading programs (when the mouse moves to the button, it can make a sound and assist people with visual impairments to operate computers), so as to realize Microsoft’s dream of popularizing computers in every family. MSAA is primarily a COM-based technology. Use IAccessible to represent the information of UI interface elements. APIs such as AccessibleObjectFromWindow are provided to get it. MSAA can query element information, such as element information at a specific location. Register for events to be notified when element information changes. For example when a button is disabled or a string changes. Manipulate interface elements, such as buttons, drop-down boxes, menus, etc.

- Advantages: Compared with Windows API, users only need to deal with IAccessible, the control information that can be obtained through this interface is relatively rich, and the basic operation does not need to be realized through Windows Message. Another big advantage is the support of custom controls. Of course, it does not mean that developing and writing a custom control can be recognized by MSAA, but that when developers implement custom controls, they can implement the IAccessible interface, and through this interface, some properties and operations are exposed. Testers can use this control as a standard control and automate it through MSAA. It seems a bit cumbersome, but at least it provides the possibility to automate custom controls.

- Disadvantages: Insufficient by nature, MSAA has never been designed for automated testing, so it will not consider the needs of automated testing. The obtained control information is more than that of Windows API, but it is far from enough compared to the needs of automated testing, and it only supports one basic operation, and other operations must pass through Windows Message. In addition, after Microsoft launched WPF, the limitations of MSAA became more and more obvious (this is also because WPF’s control properties are richer, more customizable, and more free, which is difficult to describe with MSAA), which is one of the reasons why Microsoft launched UIAutomation.

How it works: The application that provides information is called Server; it is responsible for handling the event notification NotifyWinEvent; it obtains element information WM_GETOBJECT. Server returns element information through IAccessible. Client can get IAccessible through AccessibleObjectFromWindow, AccessibleObjectFromPoint, AccessibleObjectFromEvent, accNavigate, get_accParent.

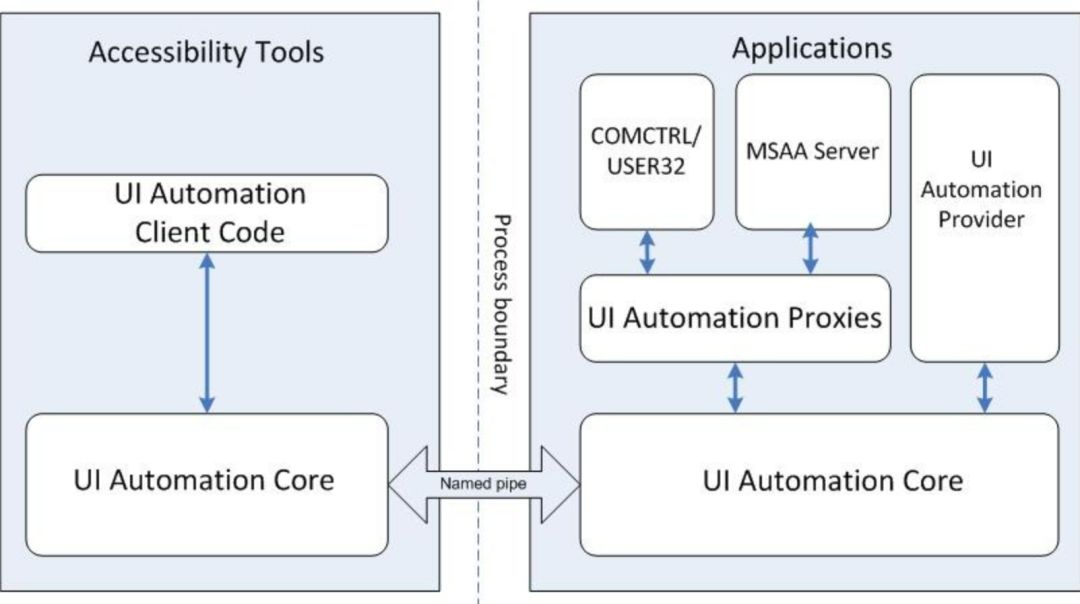

3. UIA User Interface Automation

UIA is a new generation of Microsoft Accessibility framework, which is supported on all operating systems that support WPF. UIA provides programmatic access to most UI elements. It abstracts the common properties of most UI frameworks, such as the Content property of WPF buttons, the Caption property of Win32 buttons, and the ALT property of HTML images, all of which are mapped to the Name property of UIA. In addition, UIA is also compatible with MSAA. In terms of architecture, when UIA targets standard controls, it calls MSAA Server through UI Automation Proxy, which basically covers the functions of MSAA.

UIA User Interface Automation

UIA User Interface Automation

4. JAB

JAB (Java Access Bridge) is mainly a framework that provides element information for Java applications. Primarily intended for screen readers or other secondary control programs. Using JAB, we can access business programs developed by manufacturers such as Oracle series applications, Kingdee, and UFIDA.

5. SAP

SAP provides Scripting Engine for user interface automation. SAP Scripting Engine is a COM-based interface that provides SAP’s comprehensive scripting support. The sapgui/user_scripting parameter needs to be set to TRUE under RZ11 transaction.

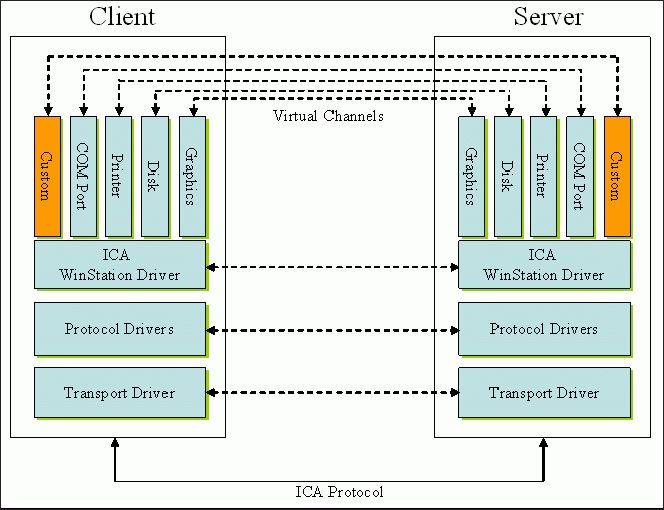

6. Citrix Virtual Channel

Citrix provides a Virtual Channel mechanism to meet the communication needs between Client and Server. Using Virtual Channel, we can send arbitrary commands to Citrix virtual desktops and obtain information needed for business. Citrix is implemented based on the ICA (Independent Computing Architecture) protocol.

Citrix Virtual Channel

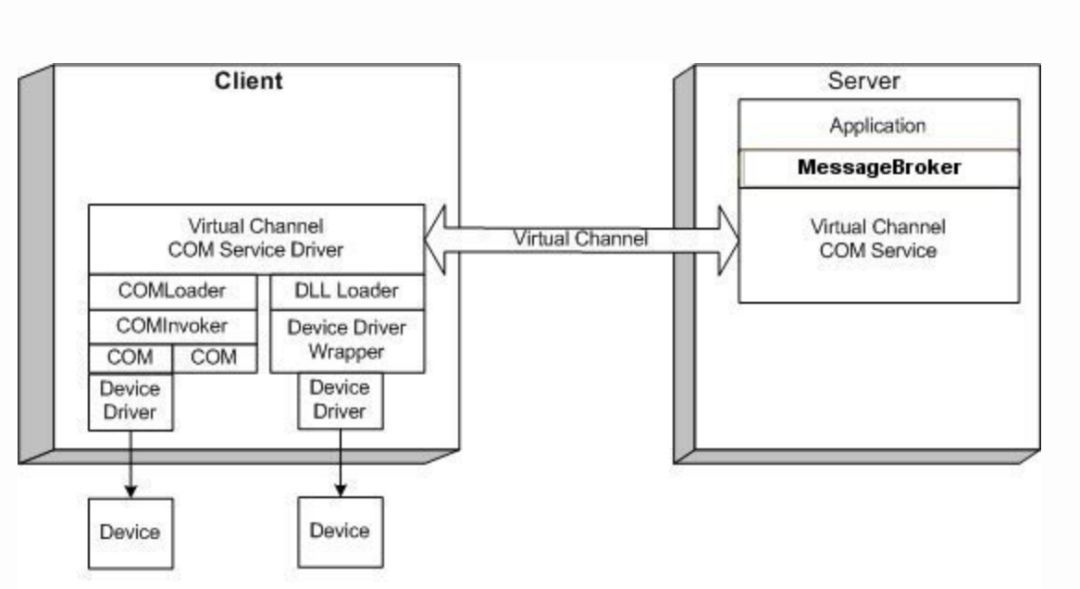

7. RDP Virtual Channel

RDP (Remote Desktop Protocol) is a remote desktop protocol developed by Microsoft. RDP also provides a Virtual Channel mechanism to meet the communication needs between Client and Server.

RDP Virtual Channel

RDP Virtual Channel

8. Browser Controls

- Selenium: An open source browser control framework that supports most browsers. Most of the web crawlers on the market are implemented with Selenium. In addition, Selenium is very friendly to programming languages and supports the access of most programming languages. But… Selenium is so famous that many business websites have done a lot of anti-automation operations for Selenium, which leads to verification processes such as verification codes and random verification forms when Selenium operates web pages. In addition, Selenium does not support IE very well.

- IE Automation: MSHTML/Trident – IWebBrowser2 interface. The IE browser uses the Trident browser engine, which was released in 1997 by IE4. Automate manipulation by providing the IWebBrowser interface. IE11 on Windows 10 has stopped maintenance on 2022-06-15.

- Chrome Extension: A Chrome browser extension is a technology that allows developers to customize the user experience. Web technologies such as HTML, CSS, JavaScript can be utilized to customize the browsing experience.

- CDP (Chrome Devtools Protocol): The DevTools protocol is a communication protocol supported by Chrome, Chromium, or any browser based on the Blink engine. The protocol can inspect, debug, monitor, etc. the browser. Blink is Chromium’s rendering engine.

9. Office automation

Microsoft Office automation is mainly realized through the IDispatch interface of COM technology. Through IDispatch, we can automate with any language that supports COM calls, such as Python, VBS, etc.

This article is reproduced from: https://www.52nlp.cn/rpa%E7%95%8C%E9%9D%A2%E5%85%83%E7%B4%A0%E5%AE%9A%E4%BD%8D%E4%B8%8E%E6%93%8D%E6%8E%A7%E6%8A%80%E6%9C%AF%E8%AF%A 6%E8%A7%A3-%E8%BE%BE%E8%A7%82%E6%95%B0%E6%8D%AE

This site is only for collection, and the copyright belongs to the original author.