Overview: Why Flow Control Matters

The advantage of going to the cloud is to pool resources, share them with multiple tenants, and allocate them on demand, thereby reducing costs. But proceed:

- Multi-tenant isolation : Users require that the traffic they buy can be used without being affected by other tenants.

- Resource sharing : Resources can only be separated logically, not physically, otherwise sufficient dynamic allocation (over-issue) cannot be achieved.

It is a pair of relatively contradictory things, and I think it is also the most important problem to be solved by cloud-native databases. If this problem is not solved, the database:

- Either the platform does not make money : for example, the static reservation of resources, although it can satisfy the user and can always use the resource quota sold to him at any time, there will be huge waste of resources, either the price is expensive, or the user does not pay.

- Either users are dissatisfied : multiple users share physical resources, but it is very easy to influence each other, resulting in users not being able to use the quota claimed by the platform.

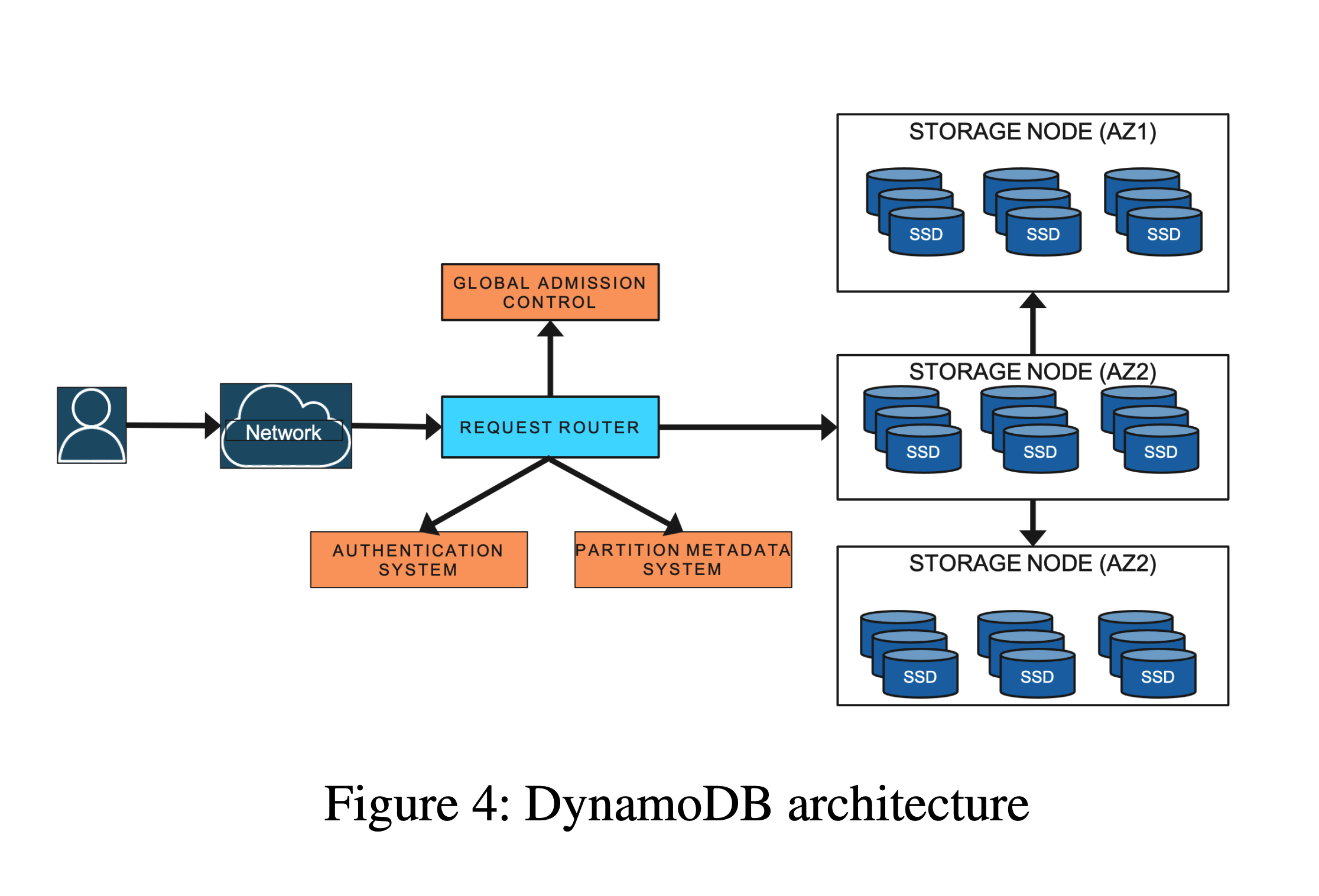

Starting from static allocation, DynamoDB has gradually evolved a set of global and local combined admission control mechanisms, thereby realizing physical resource sharing, but logically isolating users with quotas, thus realizing the true cloud native of the database. Below, based on the details disclosed in the paper Amazon DynamoDB: A Scalable, Predictably Performant, and Fully Managed NoSQL Database Service , I will sort out the evolution of its flow control mechanism for everyone.

The level is limited and the fallacy is welcome to point out at any time.

This article is reprinted from https://www.qtmuniao.com/2022/09/24/dynamo-db-flow-control/

This site is for inclusion only, and the copyright belongs to the original author.