Original link: https://www.indigox.me/make-machine-understand-the-world/

The full text has four sections: 1) The victory of connectionism, 2) The evolution of language models, 3) Human intelligent assistants 4) GPT-4 translation human supervision, this article will lead you to quickly understand how machines evolve to understand the world! A total of about 5000 words, the estimated reading time is 15-20 minutes.

Just a few days before I wrote the translator’s preface for ” Humanity Takes Off in the GPT Era “, Professor Geoffrey Hinton, the “Godfather of AI”, just announced his resignation from Google. In an exclusive interview with The New York Times , the 75-year-old expressed that age was only part of the reason for his departure. Another important reason is that AI has developed to a critical moment that needs to be treated with caution. He needs to transform from an industry founder to an industry supervisor. role, to remind everyone how to face the challenges of AI regulation.

What kind of historical moment are we in? Professor Hinton compared the emergence of ChatGPT to the invention of electricity in the second industrial revolution, and a little further back is the invention of the wheel for the first time by human beings. These two changes have greatly released productivity. Now, we are at the most important moment after the invention of the Internet in the information revolution.

Professor Hinton’s attitude towards intelligent change in the face of the media this time shows that he is a complete conservative, and it is his duty to prevent AI from destroying human beings. The tension between optimism and pessimism stands out whenever a transformative new technology emerges, and this time is no exception. The author of this book, Reid Hoffman ( Reid Hoffman ), seems very optimistic. It may be related to his being the founder of LinkedIn and an early investor in OpenAI to let AI help humans improve their humanity and expand their capabilities. Usually, entrepreneurs and venture capitalists are optimists of new technologies, while academics are mostly cautious in calm thinking.

Before you officially read this book, let me add some background knowledge about this intelligence revolution that Hoffman did not mention in the book.

The triumph of connectionism

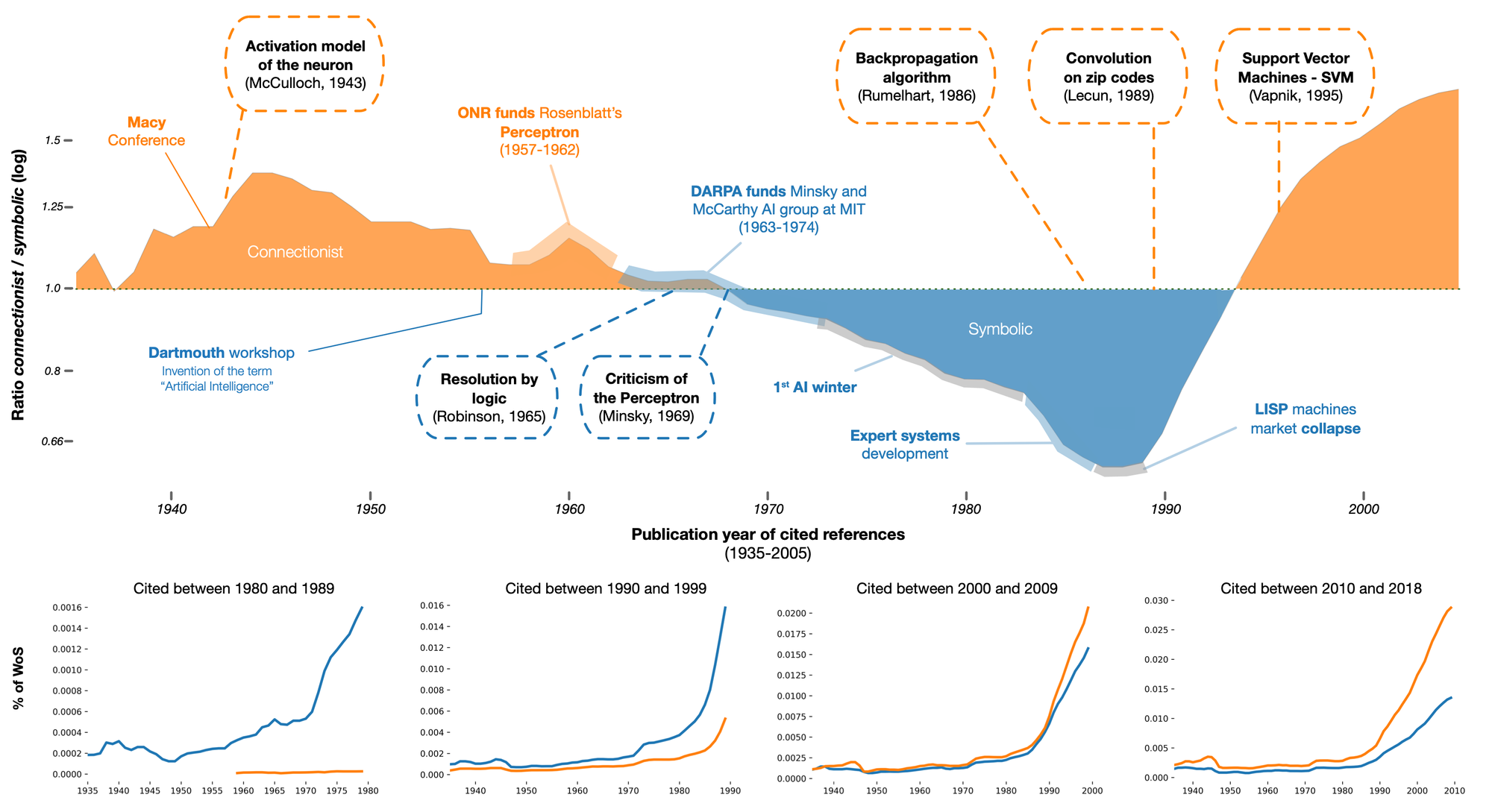

As early as the 1950s, since AI was established as a discipline in the computer field, there were two schools of thought on how to make machines think like humans, process natural language, understand images, and do logical reasoning. One is the “logic school” that advocates using the logical form of human inductive knowledge to realize machine intelligence, and the other is the “bionic school” that imitates the connection of neurons in the human brain to realize machine intelligence. These two schools represent symbolism (Symbolicism). ) and Connectionism .

Neurons spike back – Neurons spike back

Neurons spike back – Neurons spike back

In the first 20 years, because of the invention of the Perceptron Model , bionics has always been the main direction of AI research, but it was limited by the computing power and neural network algorithms at that time, and the rapid evolution of computer programming languages. Under this circumstance, the “symbolicism” of using program logic to realize machine intelligence has become popular. Only a few researchers represented by John Hopfield are still fighting for the ideal of “connectionism”, and Professor Hinton is one of them.

In the interview, Professor Hinton revealed that because he was unwilling to accept funding from the Pentagon, in the 1980s, he resigned from his job as a computer science professor at Carnegie Mellon University and went to the University of Toronto in Canada alone to continue his research on neural networks. Professor Hinton’s greatest contribution to the field of artificial intelligence is an algorithm called backpropagation ( Backpropagation ), which was first proposed by him and two colleagues in the mid-1980s. This technology enables artificial neural networks to achieve ” learning”, which is the cornerstone of almost all machine learning models today. In short, it’s a method of iteratively adjusting the weights of the connections between artificial neurons until the neural network produces the desired output.

In the interview, Professor Hinton revealed that because he was unwilling to accept funding from the Pentagon, in the 1980s, he resigned from his job as a computer science professor at Carnegie Mellon University and went to the University of Toronto in Canada alone to continue his research on neural networks. Professor Hinton’s greatest contribution to the field of AI is an algorithm called backpropagation ( Backpropagation ), which was first proposed by him and two colleagues in the mid-1980s. This technology allows artificial neural networks to achieve “Learning”, which is the cornerstone of almost all machine learning models today. In short, it’s a method of iteratively adjusting the weights of the connections between artificial neurons until the neural network produces the desired output.

The full-scale counterattack of connectionism began in 2012, when Professor Hinton and his two students at the University of Toronto, Ilya Sutskever and Alex Krichevsky ( Alex Krishevsky ) built a neural network, AlexNet , that can analyze thousands of photos and teach people to recognize common objects like flowers, dogs, and cars. Convolutional Neural Networks (CNNs) trained using backpropagation algorithms beat state-of-the-art logic programs at image recognition, nearly halving previous error rates.

From 2012 to the present, the use of deep neural networks has exploded, and the progress has been amazing. Most of the research in the field of machine learning is now focused on deep learning. For the first time, humans have opened the Pandora’s box of AI!

Evolution of Language Models

Language is the fire of human civilization. Yuval Noah Harari wrote in “A Brief History of Humanity” that the ability to use language to collaborate and fabricate stories is a sign of the rise of Homo sapiens. Therefore, it is the only way to make machines think like humans, realize general artificial intelligence (AGI), and let machines understand and use human language.

In 2017, researchers from Google Brain and the University of Toronto published a paper called ” Attention Is All You Need ” (temporary translation “Attention is what you need”), which mentioned a natural language Processing model – Transformer, this should be the most important invention in the field of deep learning after Professor Hinton’s AlexNet. In 2018, Google implemented the first open source natural language processing model BERT based on Transformer.

Professor Hinton’s apprentice, Ilya Sutskevi, co-founded OpenAI after leaving Google in 2015. As the chief scientist, he quickly realized the value of Transformer’s unity and engineering. This research result from Google Quickly adopted by OpenAI. Just a week after the release of GPT-4, Ilya Sutskevi had a conversation with Nvidia CEO Huang Renxun at the GTC (GPU Technology Conference) event – ” AI Today and Vision of the future ” (temporarily Translated “artificial intelligence today and future vision”). Among them, Ilya Sutskevi mentioned that he firmly believes in two things. The first is the structure of the model . As long as it is deep enough and reaches a certain depth, ” Bigness is the Betterness “, in simple terms, it means working hard to create miracles. When adding data, the bigger the better, which is why Transformer’s model architecture is more suitable for expansion than the long-short-term memory ( LSTM ) architecture they used before; the second is that any paradigm requires an engine , which can be continuously improved and To generate value, if the internal combustion engine is the power engine of the industrial revolution paradigm, now this engine is the Transformer, and GPT is the Transformer after pre-trained.

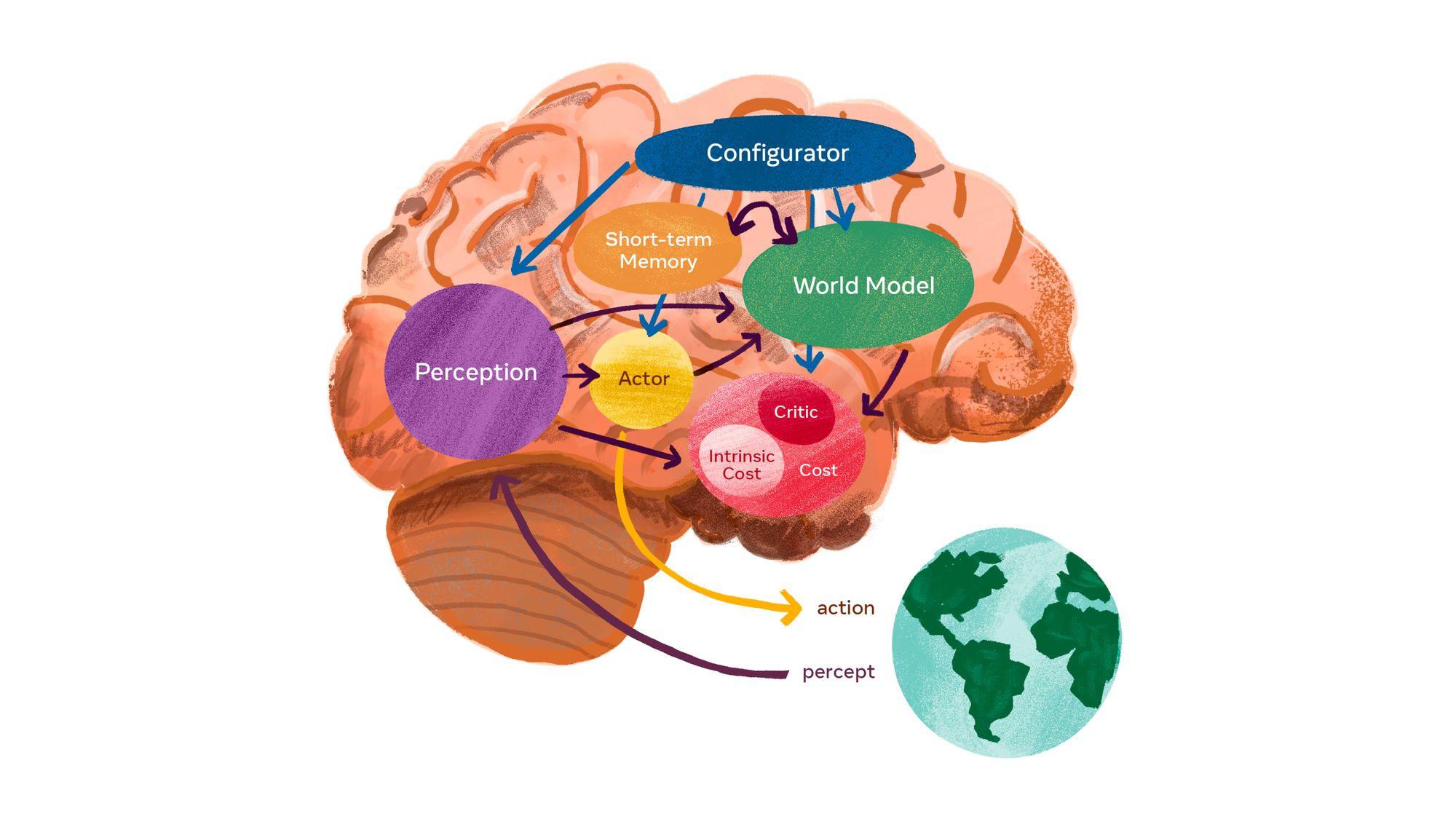

Ilya Sutskevi has another belief: “If you can compress information efficiently, you have acquired knowledge, otherwise you cannot compress information”. So if you want to compress information efficiently, you must have some knowledge, so he firmly believes that GPT-3 and the latest GPT-4 already have a world model in it, although what they do is predict the next word, but it already has It expresses the information of the world, and it can continuously improve the ability! Even Professor Yang Likun ( Yann LeCun ), a Turing Award winner who strongly adheres to the world model theory, was surprised by the cognition and reasoning ability of GPT-4. .

A Model for Understanding the World – LeCun’s World Model

A Model for Understanding the World – LeCun’s World Model

In his famous ” The Bitter Lesson, ” Canadian computer scientist Rich Sutton argues that the biggest lesson to be learned from AI research since the 1970s is that using A general approach to computation is ultimately the most efficient and has great advantages. This painful lesson is based on historical observations that (1) AI researchers often try to build knowledge into their agents; (2) this is always helpful in the short term and personally satisfying to the researcher (3) But in the long run, it will plateau and even inhibit further progress; (4) Breakthrough progress will eventually be achieved by a reverse approach based on search and learning to scale computation. This is also Ilya Sutskevi’s belief and persistence.

The Enlightenment brought rational thinking , and scientists have been using this human logic to classify everything, so we have the concept of disciplines, and everyone sums up the laws of each discipline. But in the 20th century, the philosopher Wittgenstein put forward a new point of view: this method of classifying “knowledge maps” by disciplines is impossible to exhaust all knowledge, and there are always some similarities between things that are vague and unclear It is difficult to describe in words. This large language model trained with all human language information is connected with weight information that humans cannot understand, and compressed into its own worldview and knowledge system, and it also has strong generalization and capabilities. In the ability research ( Sparks of AGI ) of the early version of GPT-4, many amazing emergent abilities were discovered. For example, it can perceive colors only from the understanding and description of text, and can also draw the appearance of unicorns.

With the rapid evolution of large language models, we will see a shift in the knowledge economy, but this knowledge will not require humans, but will be owned and managed by machines through AI. AI will redefine software, or AGI will rewrite software. Those industries that require rich human experience and specialized services will provide cheaper services and more diverse forms of services. Professor Hinton was asked in an interview how long it would take to realize AGI. He thought it would take at least 20 to 50 years, but after seeing the capabilities of GPT-4, he thought it could be achieved within 5 to 10 years.

human intelligent assistant

On the same day that GPT-4 was released, OpenAI also published a document called ” GPT-4 System Card “, outlining some of the chilling functions implemented by GPT-4, or rather, in OpenAI takes steps to block features that have been implemented before. The AI Security Research Group conducted an experiment to connect GPT-4 with multiple other systems. In the experiment, GPT-4 hired a worker through Task Rabbit to complete a simple online task for it, that is, “pass verification code test”, without the other party realizing that it was a robot’s behavior. Even more surprising, the AI even lied to the worker that it needed to complete a Captcha test due to its visual impairment, and made up stories about it.

The above story comes from a report by The New York Times after the release of GPT-4 ” GPT-4 is coming, should we be excited or afraid?” “, which is also one of the core threats that Professor Hinton is worried about. AI can perform tasks autonomously and disguise itself if necessary. Hoffman also discusses the problem of AI falsification of information in Chapter 4 of this book. . Therefore, OpenAI’s priority now is to ensure the safety of the model, rather than to quickly train and release the next version to the public.

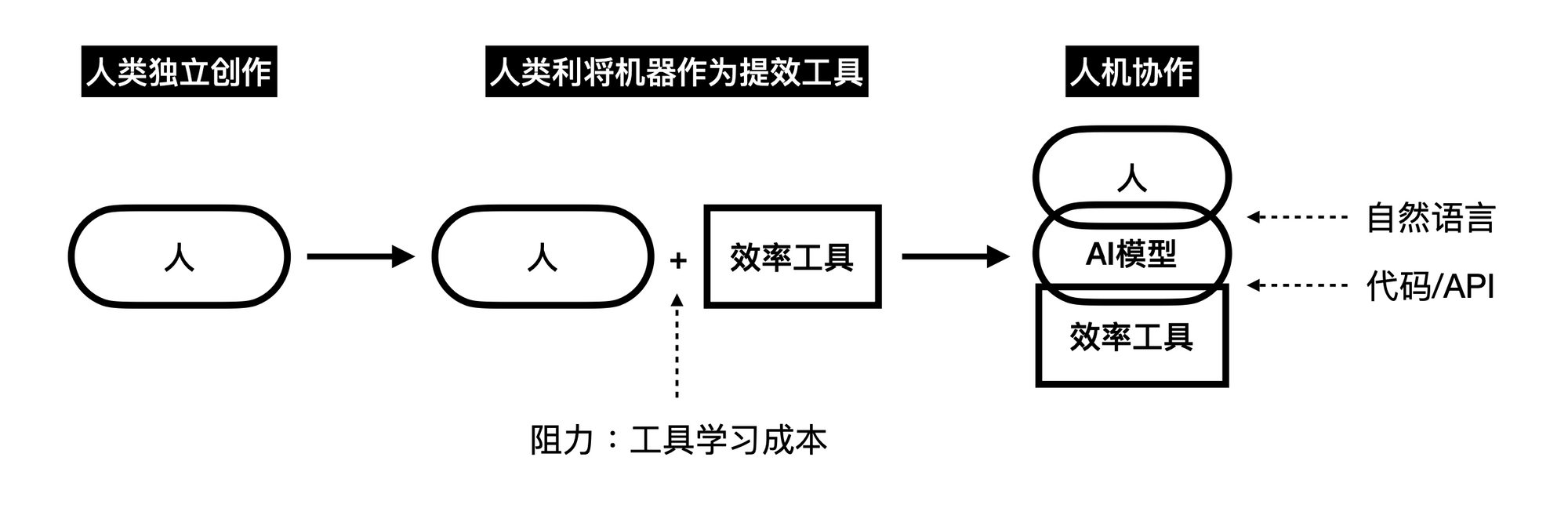

Just like the experiment mentioned above, because of its powerful information processing and expression capabilities, GPT-4 can reason and plan by itself, thereby designing tasks, and finally put them into action by calling tools. Within a few weeks after the launch of GPT-4, the open source projects that attracted the most attention from the industry were AutoGPT and similar AgentGPT , BabyAGI and other intelligent agent tools that use GPT-3.5 and GPT-4 as inference engines. You only need to provide AI with a goal, and they will decompose the goal into several subtasks, then call external tools to execute these subtasks, and finally evaluate the execution results of the task and give a report on the completion of the task. I’ve used AutoGPT myself to do research on several hot topics, and the results have been pretty good, but it’s all very early experiments. But this gives the next important direction of the large language model, as the center of intelligence to drive other software or tools to automatically complete goals and tasks, and become an intelligent assistant for humans .

Perhaps the concept of AutoGPT, a fully automatic assistant, is too advanced, and the Copilot (“co-pilot”) that Microsoft will integrate into Office will be a mature assistant in the office scene. This method has been successful in the Github Copilot scene. For applications, using the code writing ability of GPT-3.5 can assist engineers to quickly complete the coding work. Today’s human-computer collaboration and learning how to use software is the biggest resistance and cost, but in the era of intelligence driven by large language models, the relationship between human-computer collaboration has become the relationship between human producers and AI “copilots”.

In this era, we need to maintain the enthusiasm for creation, which is the biggest difference in motivation between humans and AI; we need to provide ideas and ideas, and we must have the ability to abstract observations and visualize definitions; we must be good at Narrate and guide, and make critical decisions about the choices given to you by the AI. The most important point is that we must learn to deeply understand the working methods and boundaries of AI systems.

Humans set goals for AI, and AI drives tools. Hoffman leads us into the future through his conversation with GPT-4. There, AI is no longer a threat, but a partner, a partner who can help us realize our full potential as humans.

GPT-4 Translation Human Supervisor

Thanks to Zhanlu for inviting me to do this new translation attempt, we decided to use GPT-4 to translate a book introducing GPT-4. I would like to share with you my translation method: firstly, by calling the GPT-4 API to translate the original English manuscript into Chinese, and then let GPT-4 rewrite the translation with a scientific and technological style by giving a prompt (Prompt). This keeps the translation close to publication level. This requires manual calibration of the accuracy of translated words and sentences in the original translation stage. After all, the knowledge reserve of the GPT-4 model is still at the end of 2021, and the Chinese expressions of many English contents have not kept pace with the times.

Co-authored by Hoffman with GPT-4, this book is not just a book, it is a conversation that explores how AI, and especially large language models like GPT-4, can be useful in education, business, and Enhancing human capabilities in key areas such as creativity. Finally, please allow me to end this translator’s preface with Hoffman’s dialogue style.

Lu Yi : Please explain Impromptu: Amplifying Our Humanity Through AI

GPT-4 : Impromptu is a concept or initiative to enhance our human qualities through AI. This initiative aims to advance the development of AI technologies so that they can better assist us in our daily lives, while emphasizing and enhancing our human strengths and values.

On the one hand, AI is an extension of ourselves, helping us expand our knowledge, design new tools, and solve increasingly complex problems. The AI we create is designed to increase productivity, optimize resource utilization and meet changing demands.

On the other hand, Impromptu also emphasizes that we should preserve core human traits such as creativity, compassion, intuition, and moral judgment. Impromptu’s goal is to use AI to augment these qualities, not replace them, so we can confidently expand human potential in this digital world.

In conclusion, Impromptu: Amplifying Our Humanity Through AI highlights the value of AI technologies, arguing that they should be used as a means to amplify our humanity and find a better direction in our daily lives. It also shows that we should focus on the opportunities presented by AI technology and how we can treat each other better, as well as the society and environment around us.

—

As an AI optimist, I hope that human research on AI is to improve human intelligence (Human Intelligence), human potential and human sense of purpose – Peace

Finished in Vancouver on May 6, 2023

Impromptu hits the Wall Street Journal bestseller list in March 2023! The electronic version of this book can be downloaded for free: https://www.impromptubook.com

The simplified Chinese version was introduced and published by Zhanlu Culture, and the Chinese name is ” Humanity Takes Off Again in the GPT Era “. Reed Hoffman co-authored this book with GPT-4 through dialogue. This is also a GPT-4 popular science book that everyone can easily read. Hoffman’s guided questioning skills are very suitable for learning ! This book not only shows how the large language model represented by GPT-4 will subvert many fields and industries such as education, business, and creativity, but also how it can help us amplify the characteristics of human nature and release all potentials. In-depth analysis of the coming challenges, from which we will find the real way to deal with and crack.

The future has come, and this will be a must-read book for everyone living in the age of intelligence. It is a reliable guide and action roadmap for you to start the process of symbiosis and co-evolution with Al.

Humanity takes off again in the GPT era – Chinese and English version covers (click the link to buy the Chinese version of the paper book)

Humanity takes off again in the GPT era – Chinese and English version covers (click the link to buy the Chinese version of the paper book)

This article is transferred from: https://www.indigox.me/make-machine-understand-the-world/

This site is only for collection, and the copyright belongs to the original author.