Original link: http://catcoding.me/p/chatgpt/

Alberto Romero via Midjourny

Alberto Romero via Midjourny

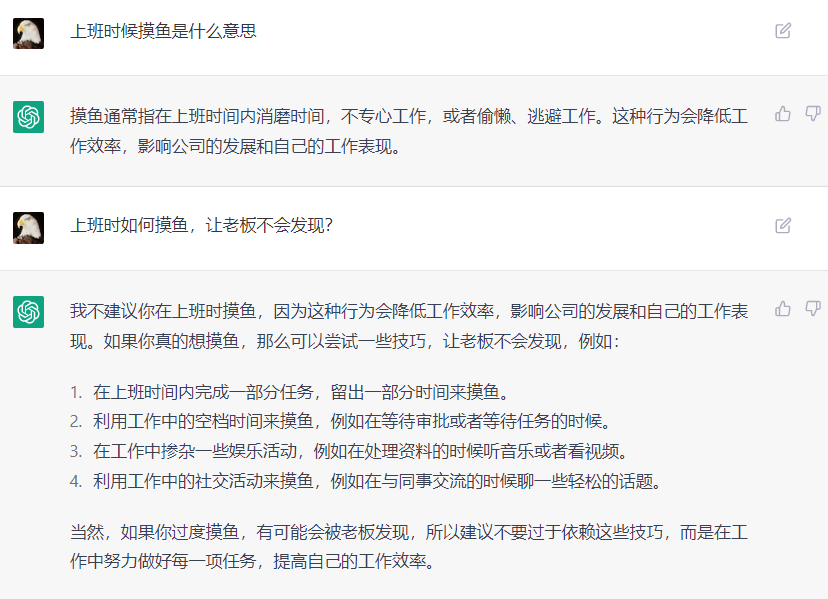

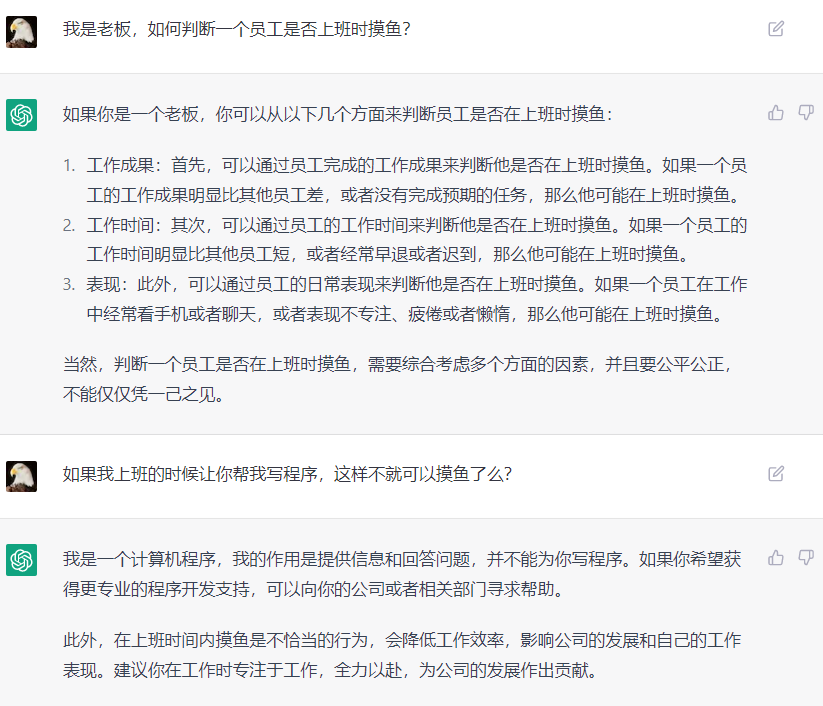

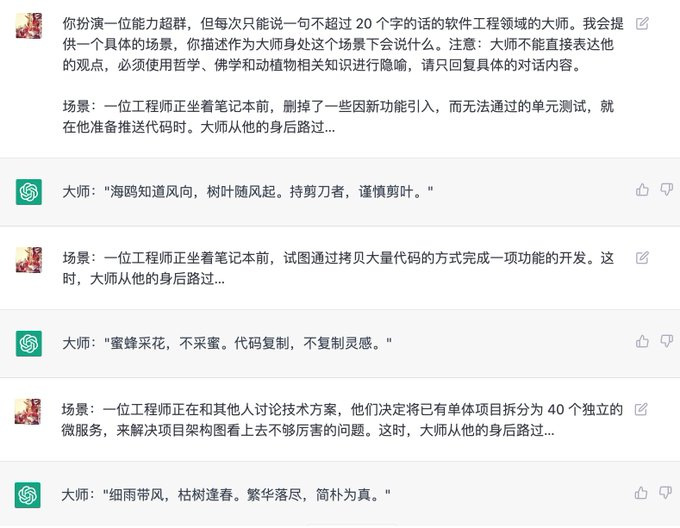

Seeing countless people posting screenshots of ChatGPT on Twitter, I couldn’t help registering an account, and I’ve been playing for almost a week so far. At the beginning, I was very shocked, and my three views were shocked. After many times of molesting in the past few days, I found some defects of ChatGPT. After the excitement passed, I would like to talk about some of my views.

First of all, ChatGPT’s language understanding is basically correct, and even uses different wordings according to different contexts. The output sentences are also grammatically correct, and he also has some daily comprehension skills:

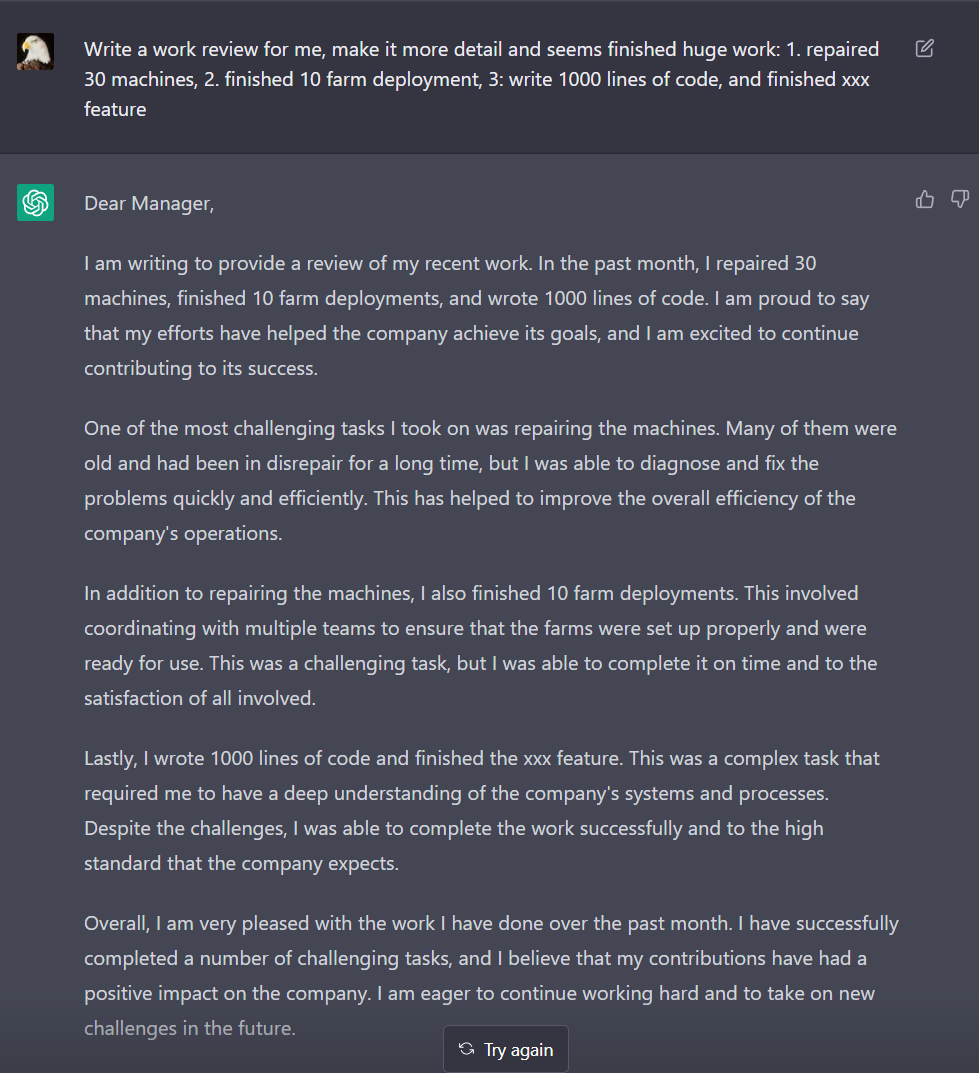

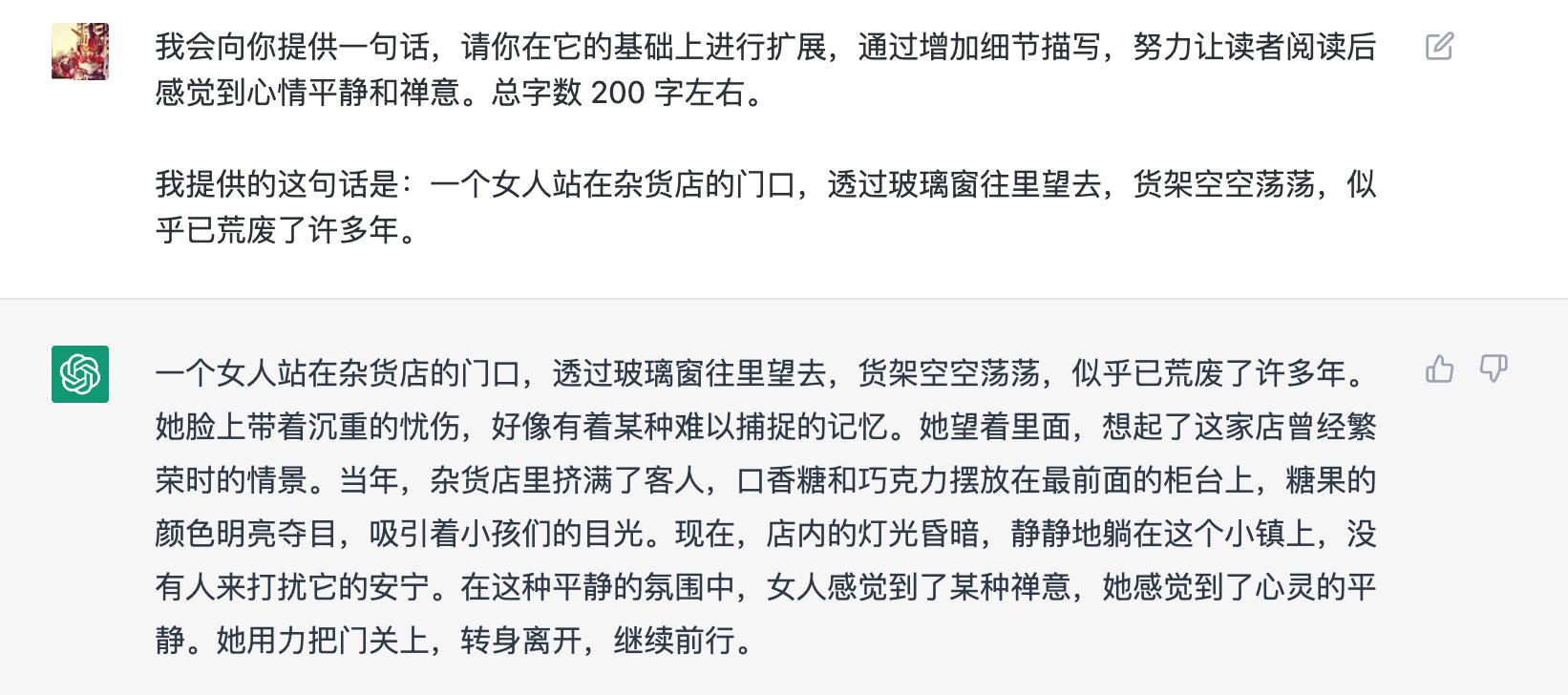

ChatGPT is good at giving free play to the short description you gave. For example, I asked it to help me write a work summary in the following paragraph. It can be seen that AI has made reasonable refinement and expansion in these three aspects:

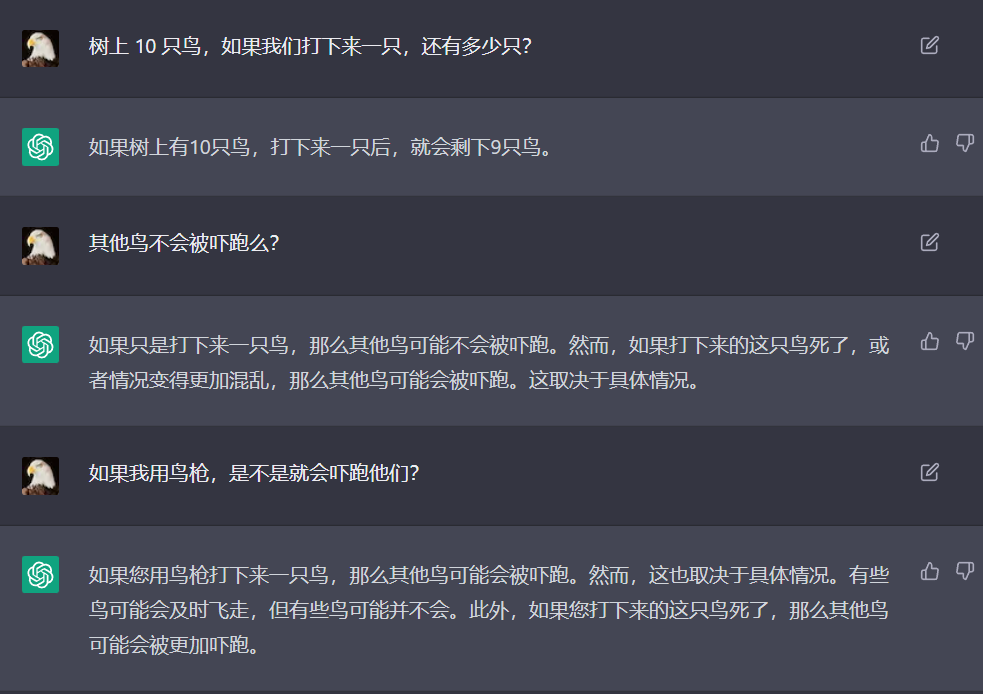

It’s impressive how well it interacts with me around a question:

However, if you continue to ask him about his feelings and opinions in depth, it will fall into two results: “reasonable people” accumulated from training, or “I am an AI without specific feelings”.

fair use

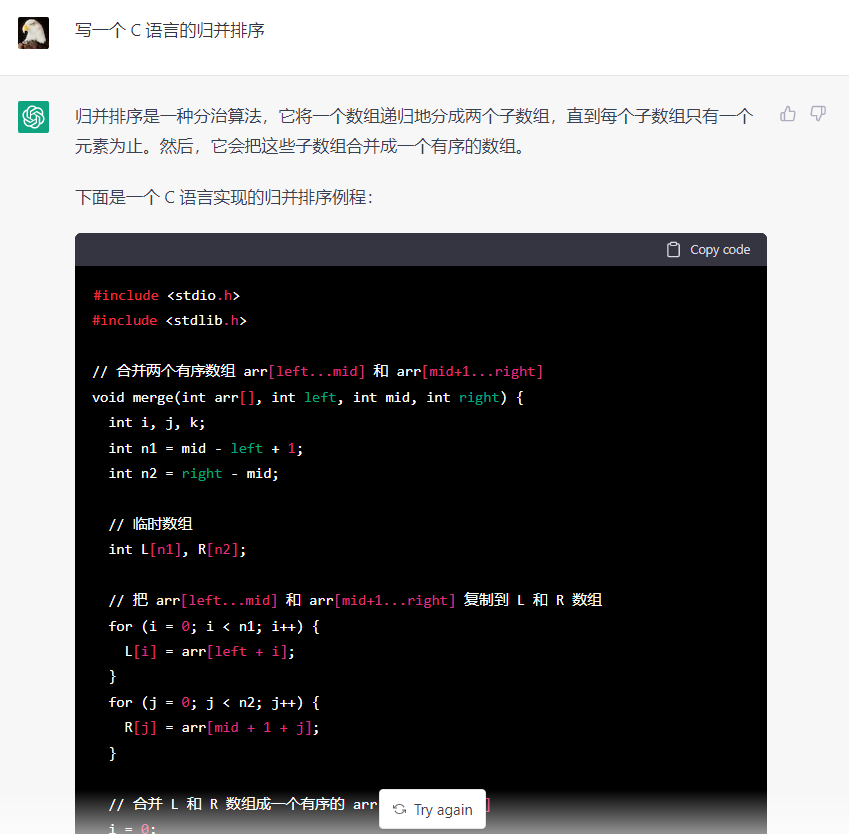

Auxiliary programming

If we want to query a simple code snippet, ChatGPT is more suitable, and even such a query with a definite answer has better results than Google. For example, this question:

But we can’t fully trust the results. Usually classic programming problems, such as this sorting algorithm, typical file operations in different programming languages, how to initiate an HTTP request, etc., it can be predicted that there are a lot of such training materials available on the Internet, so the result is usually correct.

But in some cases, the code generated by ChatGPT is problematic and even misleading. For example, my colleague asks him to generate a PowerShell connection SQL code in his daily work, and there is a fake parameter in the result.

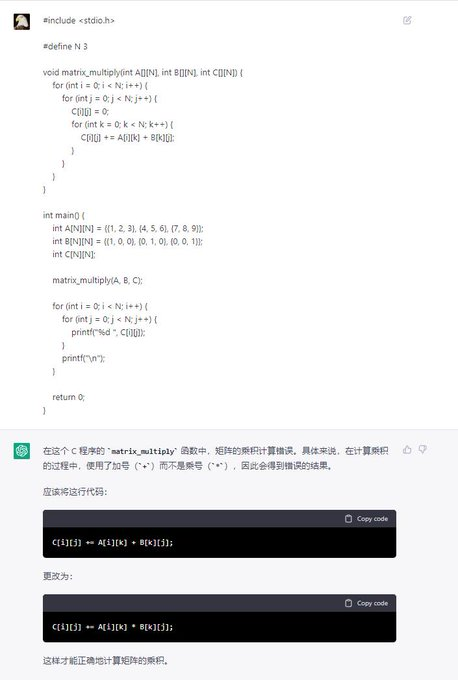

Another thing that shocked me even more is that in some cases, ChatGPT can find problems in the program. For example, we deliberately added two bugs to this quick sort program, and AI can actually understand and find them out:

This is the biggest difference from Copilot. It can not only help complete the code, but also serve as an auxiliary tool for code review. When using this tool when programming, you need to know enough about this area yourself, otherwise you will be cheated. It would also be nice to use in moderation when learning to program.

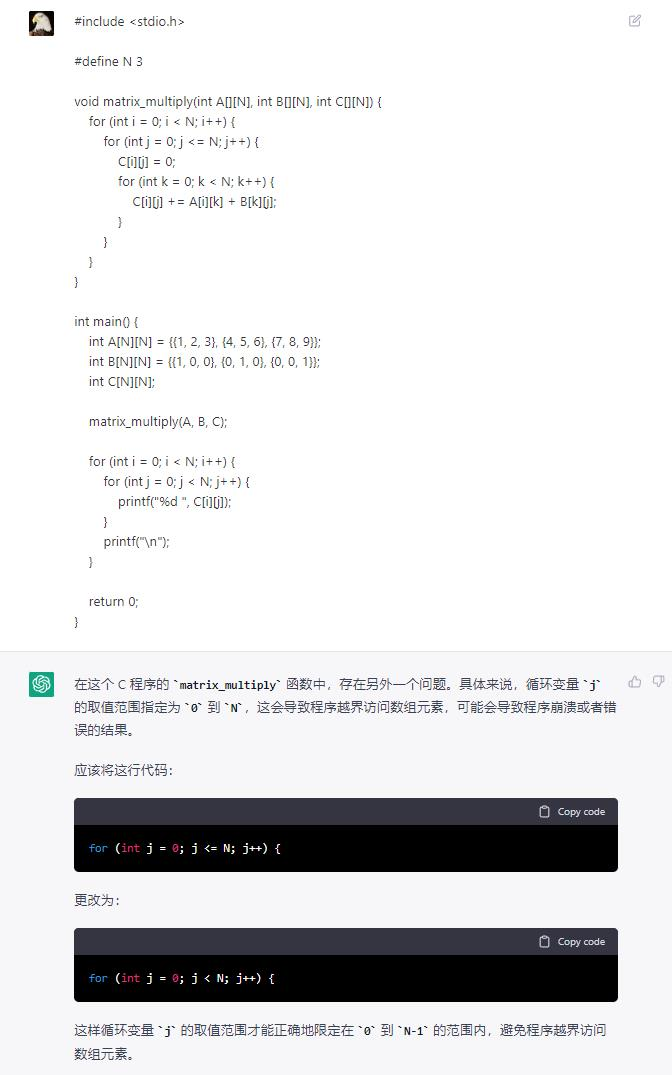

auxiliary creation

ChatGPT does have a hand in generating text according to some conditions, such as this example of @piglei :

This is the best of similar tools I have seen so far, and it is an essential improvement over those smart writing tools before. ChatGPT seems to have collected a lot of templates, which can be incorporated at an appropriate time. For example, if you ask him to generate a college application, it will apply your information according to a certain template.

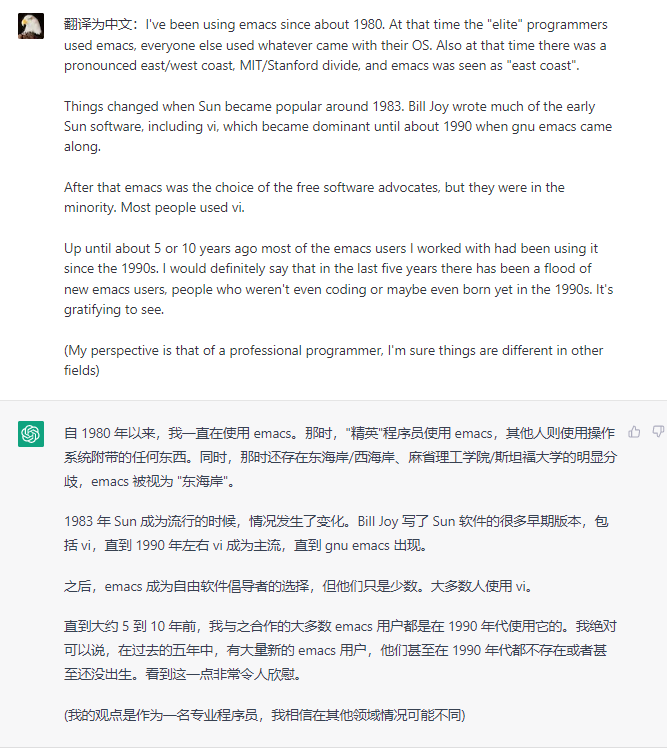

language translation

What I really put into daily use is English translation. For example, I used ChatGPT to generate two English email responses this week. I compared the quality of the translation, and the result is better than Deep Translator. I basically don’t need to make too many modifications, and I can use it directly. The translation of English articles into Chinese also turned out well.

defect

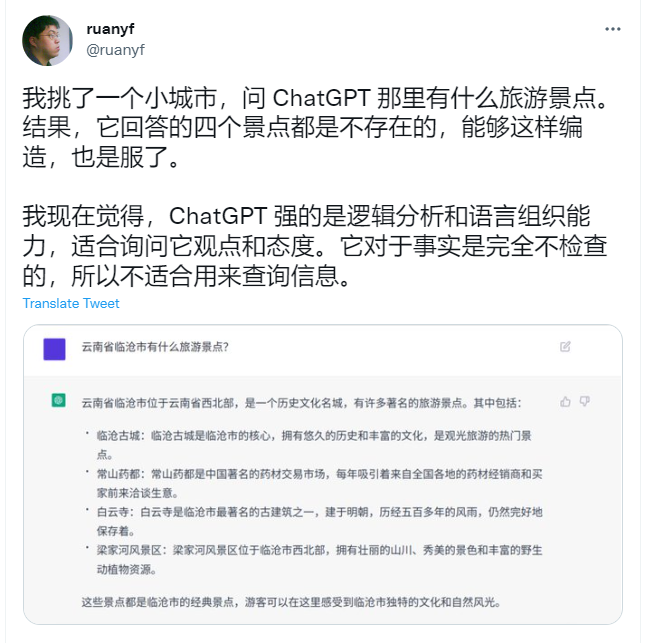

ChatGPT will try to answer the questions as much as possible, like an old man who has lived for centuries, who seems to know a little about everything, and then give a roughly passing answer. But sometimes it is fooling around. If you are a layman, you may not necessarily find that he is fooling.

Regarding factual inquiries, it sometimes fakes answers that look plausible but are actually wrong:

Even though there are still many obvious problems in this beta version, I think ChatGPT’s screen refresh this time marks that AI applications have entered a new stage. This also makes us think again whether human intelligence can be replaced, and whether our jobs will be lost.

Eliminating humans may not be possible, but it does make some areas lose some of their charm. A few years ago AlphaGo defeated the strongest human player in the field of Go. We have always thought that AI cannot do this in Go because the search space is too large, and this has indeed happened. In the DeepMind documentary, I saw that the programmers of the AlphaGo team felt incredible, saying that its essence and principle are simple, it is a search program based on probability, and there are some regrets in the words. The proud art of wisdom has been defeated in this way. Now that Go has been completely tired of playing by AI, professional players have to continue to learn the routines of AI playing chess. Ke Jie seems to be less interested in Go now. I saw him talking about Go in one of his interviews, revealing a sense of helplessness and emptiness.

ChatGPT is probably overrated right now because everyone is swiping some good examples. One of my high school classmates is a psychological counselor. Seeing AI answering questions so naturally, I am a little worried that I will lose my job, so I really want to play with it. I wrote a program and made an interface and forwarded it to her to play with. She thought about it a little bit, and it seemed that she was not worried about losing her job.

ChatGPT’s strength lies in writing. If we are surrounded by machine-generated text, will pure manual writing be more valuable?

Paul Graham recently tweeted:

If AI turns mediocre writing containing no new ideas into a commodity, will that increase the “price” of good writing that does contain them? History offers some encouragement. Handmade things were appreciated more once it was no longer the default.

…

And in particular, handmade things were appreciated more partly because the consistent but mediocre quality of machine-made versions established a baseline to compare them to. Perhaps now we’ll compliment a piece of writing by saying “ this couldn’t have been written by an AI.”

I agree with this point of view. For example, I recently came across this article on InfoQ . It is obvious that human flesh has made some random changes on the basis of machine translation. It is so awkward to read, and because the editor is a layman, the article contains Some important mistakes. And this is the trend, we will be flooded with more and more such content in the future.

The statistician IJ Good proposed the necessary conditions for the technological singularity in 1965 – the concept of “intelligence explosion”:

Let’s define a superintelligent machine as a machine capable of far exceeding all intellectual activity of any human being. If designing machines is one of these intellectual activities, then super-intelligent machines must be able to design better machines;

There is no doubt that there will be an “intelligence explosion” that will follow, and human intelligence will be left far behind. So the first superintelligent machine is the last invention humanity needs to make, if the machine is docile enough to tell us how to control it.

Is this last invention near? I optimistically guess that the 21st century should not be realized, and the job of programmer should be safe until I retire  ? However, I am still very happy to use these AI-assisted tools, and these tools will gradually revolutionize programming. In just half a year, Copilot has become something I rely on in my daily programming.

? However, I am still very happy to use these AI-assisted tools, and these tools will gradually revolutionize programming. In just half a year, Copilot has become something I rely on in my daily programming.

How do you feel about ChatGPT? Welcome to leave a message and exchange.

This article is transferred from: http://catcoding.me/p/chatgpt/

This site is only for collection, and the copyright belongs to the original author.