Recently made a fun tool called xbin.io. One of those jobs is to build Docker images for the different tools and have them all run in Docker (actually, other sandbox systems that are compatible with Docker images, not using Docker directly). More and more tools are supported. In order to save resources, the Docker image of Build should be as small as possible, with fewer files. In fact, the startup speed will be slightly faster and safer.

This article will introduce some techniques for making Docker Image.

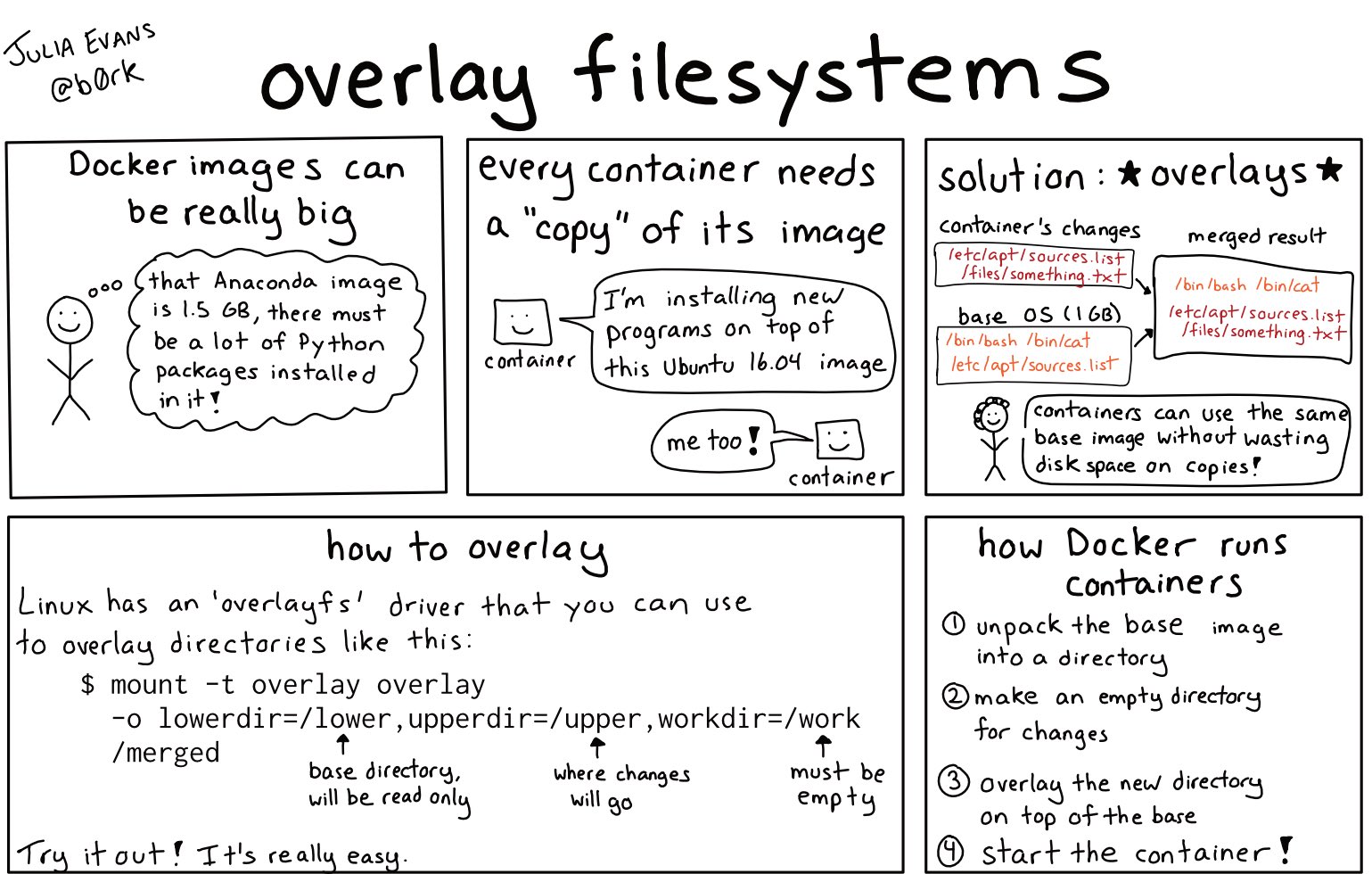

In the previous blog Docker (Containers) Principles , I introduced how Docker images work. To put it simply, it is possible to use Linux’s overlayfs and overlay file system to merge two file systems together, the lower file system is read-only, and the upper file system is writable. If you read, find the upper level and read the upper level, otherwise, find the lower level and read it to you. Then, if you write, it will be written to the upper layer. In this way, in fact, for the end user, it can be considered that there is only one file system after the merge, which is no different from the ordinary file system.

With this function, when Docker runs, it starts from the lowest file system, merges two layers, gets a new fs, then merges the upper layer, then merges the upper layer, finally gets the final directory, and then changes it with chroot The root directory of the process, start the container.

After understanding the principle, you will find that this design is very suitable for Docker:

- If both images are based on Ubuntu, then the two images can share the base image of Ubuntu, and only need to store one copy;

- If a new image is pulled, if a certain layer already exists, then the content before this layer does not need to be pulled;

The following techniques for building image are actually based on these two points.

In addition, a little mention, Docker image is actually a tar package . Generally speaking, we use the docker built command to build through the Dockerfile , but it can also be built with other tools. As long as the built image conforms to the Docker specification , it can be run. For example, the previous blog post Build a minimal Redis Docker Image was built with Nix.

Tip 1: Delete the cache

General package managers, such as apt , pip , etc., will download the cache when downloading the package. The next time you install the same package, you don’t need to download it from the network, just use the cache directly.

But in Docker Image, we don’t need these caches. So we generally use this command to download things in Dockerfile :

RUN dnf install -y --setopt=tsflags=nodocs \ httpd vim && \ systemctl enable httpd && \ dnf clean all

After the package is installed, go to delete the cache.

A common mistake is for someone to write:

FROM fedora RUN dnf install -y mariadb RUN dnf install -y wordpress RUN dnf clean all

Each RUN in the Dockerfile will create a new layer. As mentioned above, this actually creates three layers, the first two layers bring the cache, and the third layer deletes the cache. Just like git, you delete the previous file in a new commit. In fact, the file is still in the git history, and the final docker image is actually not reduced.

But Docker has a new feature, docker build --squash . The squash function will compress all layers into one layer after Docker completes the construction, that is to say, the final constructed Docker image has only one layer. Therefore, it is also possible to write the clean command in multiple RUN as above. I don’t like this method very much, because as mentioned above, the feature of sharing base image with multiple images and speeding up pull is not actually used.

Some common package manager ways to delete cache:

|

yum |

yum clean all |

|

dnf |

dnf clean all |

|

rvm |

rvm cleanup all |

|

gem |

gem cleanup |

|

cpan |

rm -rf ~/.cpan/{build,sources}/* |

|

pip |

rm -rf ~/.cache/pip/* |

|

apt-get |

apt-get clean |

In addition, the above command actually has a disadvantage. Because we write multiple lines in the same RUN , it is not easy to see what this dnf has installed. Moreover, the first line is different from the last line. If you modify it, the diff will see two lines of content, which is very unfriendly and error-prone.

It can be written in this form, which is relatively clear.

RUN true \ && dnf install -y --setopt=tsflags=nodocs \ httpd vim \ && systemctl enable httpd \ && dnf clean all \ && true

Tip 2: Move the infrequently changed content forward

Through the principles introduced above, we can know that for a Docker image, there are four layers of ABCD, and if B is modified, then the BCD will change.

According to this principle, we can write the system dependencies forward when building, because the installed things like apt and dnf are rarely modified. Then write the library dependencies of the application, such as pip install , and finally copy the application.

For example, the following Dockerfile will rebuild most layers every time the code changes, even if only the title of one webpage is changed.

FROM python:3.7-buster # copy source RUN mkdir -p /opt/app COPY myapp /opt/app/myapp/ WORKDIR /opt/app # install dependencies nginx RUN apt-get update && apt-get install nginx RUN pip install -r requirements.txt RUN chown -R www-data:www-data /opt/app # start server EXPOSE 8020 STOPSIGNAL SIGTERM CMD ["/opt/app/start-server.sh"]

We can change it to, first install Nginx, then copy requirements.txt separately, then install pip dependencies, and finally copy the application code.

FROM python:3.7-buster # install dependencies nginx RUN apt-get update && apt-get install nginx COPY myapp/requirements.txt /opt/app/myapp/requirements.txt RUN pip install -r requirements.txt # copy source RUN mkdir -p /opt/app COPY myapp /opt/app/myapp/ WORKDIR /opt/app RUN chown -R www-data:www-data /opt/app # start server EXPOSE 8020 STOPSIGNAL SIGTERM CMD ["/opt/app/start-server.sh"]

Tip 3: Build and Run Image Separation

We need a lot of build tools when compiling applications, such as gcc, golang, etc. But it’s not needed at runtime. It’s cumbersome to delete those build tools after the build is complete.

We can do this: use a Docker as the builder, install all the build dependencies, build, after the build is complete, re-select a base image, and then copy the build product to the new base image, so that the final image only contains the running needs s things.

For example, this is the code to install a golang application pup :

FROM golang as build ENV CGO_ENABLED 0 RUN go install github.com/ericchiang/pup@latest FROM alpine:3.15.4 as run COPY --from=build /go/bin/pup /usr/local/bin/pup

We use the golang image as large as 1G to install. After the installation is completed, the binary is copied to alpine. The final product is only about 10M. This method is especially suitable for some statically compiled programming languages, such as golang and rust.

Tip 4: Check the build artifacts

This is one of the most useful techniques.

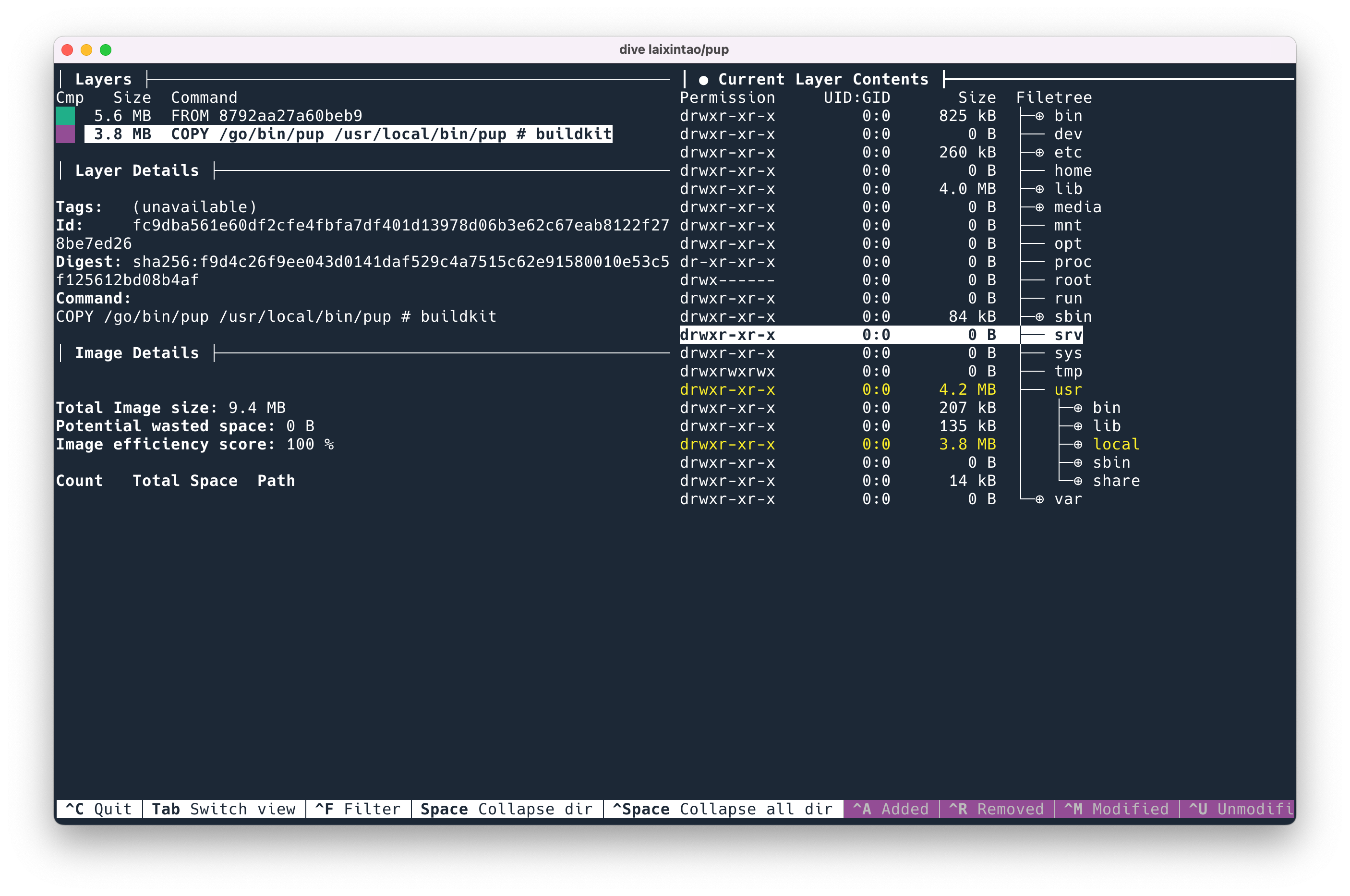

dive is a TUI, a command-line interactive app that lets you see what’s in each layer of docker.

dive ubuntu:latest command can see what files are in the ubuntu image. The content will be displayed on both sides, the information of each layer will be displayed on the left, the file content of the current layer (which will include all previous layers) will be displayed on the right, and the newly added files in this layer will be displayed in yellow. The left and right operations can be switched by the tab key.

A very useful feature is that pressing ctrl + U can only show the addition of the current layer compared to the previous layer, so that you can see whether the added files are expected.

Press ctrl + Space to collapse all directories and open them interactively for viewing, just like ncdu in Docker.

References:

- https://jvns.ca/blog/2019/11/18/how-containers-work–overlayfs/

- https://www.kernel.org/doc/html/latest/filesystems/overlayfs.html

- http://docs.projectatomic.io/container-best-practices/

The post Some tips for building Docker images first appeared on Kawabanga! .

related articles:

This article is reprinted from: https://www.kawabangga.com/posts/4676

This site is for inclusion only, and the copyright belongs to the original author.