Original link: https://blog.cyfan.top/p/d3c51290.html

In actual combat, use the black technology ServiceWorker to speed up your website.

Most of the content marked in this article can be directly copied and pasted. But there will still be a conflict between this and your service. Please read the last basic article to do it well, you must use it – on how to use ServiceWorker to make reasonable changes.

Let’s simply make a list, here is our accelerated list:

- Separate resources, static acceleration

- Speed up main page

- adjust response

- Above the fold acceleration

Front-end racing CDN

In the most traditional web page loading, once its static resources are independent, they are directly placed under the same domain server.

However, later, in order to reduce the overhead of the main server, we usually separate static resources to other nodes that load faster for users to reduce the overhead of the main server and improve the loading speed of web pages.

Now, various public welfare CDN services are flourishing, and their nodes are usually deployed globally and optimized for each country/region. Using public welfare CDNs, the quality and availability are far greater than those deployed by themselves.

In a web page load, the main web page only provides a html (the size of the web page you are looking at is about 15kb ), and the traffic overhead giant is a static resource (only js, this page is about 800kb ). When we accelerate a website, the first step should be to start from static acceleration.

For the choice of accelerated storage, there are usually three mainstream accelerated cdnjs / npm / gh on the market. Among them, personally, I still respect npm , which will increase the choice of racing nodes in the future.

For the selection of acceleration nodes, from the perspective of the mainland, first of all you have to follow a universal conclusion:不要用跨国节点. There is a strange rule in China itself that no website service can be launched in the mainland without the ICP filing license. This makes most of the traffic that should be scattered at the edge end fully pressed to the aggregation layer of the international egress. If you guide them to go overseas to pull resources and join in the crowd, its stability and speed cannot be guaranteed at all. Using it in a production environment is tantamount to suicide. Let me mention jsdelivr here, the record is gone, and the node is moved out of China, then your domestic-oriented website can be cut long ago. Using fastly, China Unicom and China Telecom are almost blocked by NTT at night, and I don’t even know my mother. It is a miracle that they can connect. Unless you really don’t care about acceleration or whether resources can be loaded normally, insisting on jsd in a mainland-oriented production environment is foolish behavior.

Of course, here I still respect the black technology ServiceWorker , which can use Promise.any to concurrently send several requests to different cdn nodes to improve front-end resource loading. With the help of the powerful js engine, the local processing efficiency is extremely high, and the performance loss is almost negligible.

Before this, we define a lfetch , its role is to initiate concurrent requests to all the URLs in the urls array, and interrupt the rest of the requests when any node returns to a normal value to avoid wasting traffic.

const lfetch = async (urls, url) => { let controller = new AbortController(); //针对此次请求新建一个AbortController,用于打断并发的其余请求const PauseProgress = async (res) => { //这个函数的作用时阻塞响应,直到主体被完整下载,避免被提前打断return new Response( await (res).arrayBuffer(), { status : res.status, headers : res.headers }); }; if (! Promise .any) { //Polyfill,避免Promise.any不存在,无需关注Promise .any = function ( promises ) { return new Promise ( ( resolve, reject ) => { promises = Array .isArray(promises) ? promises : [] let len = promises.length let errs = [] if (len === 0 ) return reject( new AggregateError( 'All promises were rejected' )) promises.forEach( ( promise ) => { promise.then( value => { resolve(value) }, err => { len-- errs.push(err) if (len === 0 ) { reject( new AggregateError(errs)) } }) }) }) } } return Promise .any(urls.map( urls => { //并发请求return new Promise ( ( resolve, reject ) => { fetch(urls, { signal : controller.signal //设置打断点 }) .then(PauseProgress) //阻塞当前响应直到下载完成 .then( res => { if (res.status == 200 ) { controller.abort() //打断其余响应(同时也打断了自己的,但本身自己已下载完成,打断无效) resolve(res) //返回 } else { reject(res) } }) }) }))}

In this, there is a difficulty: fetch returns the state rather than the content.

That is, when the Promise resolve of fetch resolves, it has already obtained the response, but the content has not been downloaded at this time, and early interruption will result in failure to return. This is the effect of PauseProgress .

Then we consider static resource acceleration. At this time, the benefits of using npm are reflected. Not only are there many mirrors, but the format is also fairly fixed.

Note that this method is carried out in a traffic-to-speed manner. Although the rest of the requests will be interrupted after any node returns the correct content, it will still cause unavoidable traffic consumption (+~20%). If you are targeting mobile traffic users, please think twice!

This code is similar to freecdn-js core functionality, but implemented differently, and fully supports dynamic web pages.

For example with jquery, you can speed it up like this:

原始地址: https: //cdn.jsdelivr.net/npm/jquery @3 .6 .0各类镜像: https: //fastly.jsdelivr.net/npm/jquery @3 .6 .0 https: //gcore.jsdelivr.net/npm/jquery @3 .6 .0 https: //unpkg.com/jquery @3 .6 .0 https: //unpkg.zhimg.com/jquery @3 .6 .0 #回源有问题https: //unpkg.com/jquery @3 .6 .0 https: //npm.elemecdn.com/jquery @3 .6 .0 https: //npm.sourcegcdn.com/jquery @3 .6 .0 #滥用封仓库https: //cdn1.tianli0.top/jquery @3 .6 .0 #滥用封仓库We can simply rub a small sw script to complete the front-end acceleration:

ServiceWorker complete code: I have read the above description in detail, and I will not copy it directly

const CACHE_NAME = 'ICDNCache' ; let cachelist = [];self.addEventListener( 'install' , async function ( installEvent ) { self.skipWaiting(); installEvent.waitUntil( caches.open(CACHE_NAME) .then( function ( cache ) { console .log( 'Opened cache' ); return cache.addAll(cachelist); }) );});self.addEventListener( 'fetch' , async event => { try { event.respondWith(handle(event.request)) } catch (msg) { event.respondWith(handleerr(event.request, msg)) }}); const handleerr = async (req, msg) => { return new Response( `<h1>CDN分流器遇到了致命错误</h1> <b> ${msg} </b>` , { headers : { "content-type" : "text/html; charset=utf-8" } })} let cdn = { //镜像列表"gh" : { jsdelivr : { "url" : "https://cdn.jsdelivr.net/gh" }, jsdelivr_fastly : { "url" : "https://fastly.jsdelivr.net/gh" }, jsdelivr_gcore : { "url" : "https://gcore.jsdelivr.net/gh" } }, "combine" : { jsdelivr : { "url" : "https://cdn.jsdelivr.net/combine" }, jsdelivr_fastly : { "url" : "https://fastly.jsdelivr.net/combine" }, jsdelivr_gcore : { "url" : "https://gcore.jsdelivr.net/combine" } }, "npm" : { eleme : { "url" : "https://npm.elemecdn.com" }, jsdelivr : { "url" : "https://cdn.jsdelivr.net/npm" }, zhimg : { "url" : "https://unpkg.zhimg.com" }, unpkg : { "url" : "https://unpkg.com" }, bdstatic : { "url" : "https://code.bdstatic.com/npm" }, tianli : { "url" : "https://cdn1.tianli0.top/npm" }, sourcegcdn : { "url" : "https://npm.sourcegcdn.com/npm" } }} //主控函数const handle = async function ( req ) { const urlStr = req.url const domain = (urlStr.split( '/' ))[ 2 ] let urls = [] for ( let i in cdn) { for ( let j in cdn[i]) { if (domain == cdn[i][j].url.split( 'https://' )[ 1 ].split( '/' )[ 0 ] && urlStr.match(cdn[i][j].url)) { urls = [] for ( let k in cdn[i]) { urls.push(urlStr.replace(cdn[i][j].url, cdn[i][k].url)) } if (urlStr.indexOf( '@latest/' ) > -1 ) { return lfetch(urls, urlStr) } else { return caches.match(req).then( function ( resp ) { return resp || lfetch(urls, urlStr).then( function ( res ) { return caches.open(CACHE_NAME).then( function ( cache ) { cache.put(req, res.clone()); return res; }); }); }) } } } } return fetch(req)} const lfetch = async (urls, url) => { let controller = new AbortController(); const PauseProgress = async (res) => { return new Response( await (res).arrayBuffer(), { status : res.status, headers : res.headers }); }; if (! Promise .any) { Promise .any = function ( promises ) { return new Promise ( ( resolve, reject ) => { promises = Array .isArray(promises) ? promises : [] let len = promises.length let errs = [] if (len === 0 ) return reject( new AggregateError( 'All promises were rejected' )) promises.forEach( ( promise ) => { promise.then( value => { resolve(value) }, err => { len-- errs.push(err) if (len === 0 ) { reject( new AggregateError(errs)) } }) }) }) } } return Promise .any(urls.map( urls => { return new Promise ( ( resolve, reject ) => { fetch(urls, { signal : controller.signal }) .then(PauseProgress) .then( res => { if (res.status == 200 ) { controller.abort(); resolve(res) } else { reject(res) } }) }) }))}

Station-wide NPM static

This method was originally created by Chen. It is a relatively wild method, but the acceleration effect is remarkable.

It is usually recommended to use static blogs such as Hexo, WordPress, etc. need to do a good job of pseudo-static, and configure the dynamic interface.

What are the benefits of hexo as a static blog, of course it is pure static. The generated static files can be easily moved to a web server and used.

Naturally, there is a follow-up operation, using npm to host the blog, and then using sw to hijack and divert traffic to the npm mirror when requesting, the effect is as shown in this blog, the loading speed (aside from the first screen) is close to flash open.

First of all, the CI of my blog uses GithubAction. It is the easiest thing to upload html to npm during the deployment process. Just add a block after the generated code block:

- uses: JS-DevTools/npm-publish@v1 with: token: $

Configure NPM environment variables, and then stack a new version when you need to update.

Then we have to solve the ServiceWorker acquisition problem. Let’s set up a listener first:

self.addEventListener( 'fetch' , async event => { event.respondWith(handle(event.request))}); const handle = async (req)=>{ const urlStr = req.url const urlObj = new URL(urlStr); const urlPath = urlObj.pathname; const domain = urlObj.hostname; //从这里开始}

First of all, we need to judge whether this domain name is the main domain name of the blog, otherwise it is not good to blindly intercept it elsewhere:

if (domain === "blog.cyfan.top" ){ //这里写你需要拦截的域名//从这里开始处理}

We must also remember to preprocess the url, strip out the path, and remove the parameters. Of particular note here is the handling of default routes.

Usually when we visit a URL, the web server will add the .html suffix at the end. For a default path it is index.html .

Also note that the # is stripped off, otherwise the fetch will fail again.

Define a fullpath function for preprocessing and stripping paths:

const fullpath = ( path ) => { path = path.split( '?' )[ 0 ].split( '#' )[ 0 ] if (path.match( /\/$/ )) { path += 'index' } if (!path.match( /\.[a-zA-Z]+$/ )) { path += '.html' } return path}

The result is similar to:

> fullpath('/')'/index.html'> fullpath('/p/1')'/p/1.html'> fullpath('/p/1?q= 1234 ')'/p/1.html'> fullpath('/p/1.html#QWERT')'/p/1.html'

Then define a mirror concurrent function to generate the URL to be obtained:

const generate_blog_urls = ( packagename, blogversion, path ) => { const npmmirror = [ `https://unpkg.com/ ${packagename} @ ${blogversion} /public` , `https://npm.elemecdn.com/ ${packagename} @ ${blogversion} /public` , `https://cdn.jsdelivr.net/npm/ ${packagename} @ ${blogversion} /public` , `https://npm.sourcegcdn.com/npm/ ${packagename} @ ${blogversion} /public` , `https://cdn1.tianli0.top/npm/ ${packagename} @ ${blogversion} /public` ] for ( var i in npmmirror) { npmmirror[i] += path } return npmmirror}

Next we fill it in the main route:

if (domain === "blog.cyfan.top" ){ //这里写你需要拦截的域名return lfetch(generate_blog_urls( 'chenyfan-blog' , '1.1.4' ,fullpath(urlPath)))}

Keep in mind, however, that the file format returned by npm is usually text rather than html, so we have to deal with headers further. The processing is also simple, just go on the chain then:

if (domain === "blog.cyfan.top" ){ return lfetch(generate_blog_urls( 'chenyfan-blog' , '1.1.4' ,fullpath(urlPath))) .then( res => res.arrayBuffer()) //arrayBuffer最科学也是最快的返回 .then( buffer => new Response(buffer,{ headers :{ "Content-Type" : "text/html;charset=utf-8" }})) //重新定义header }

Then, in addition to the first screen, your website is equivalent to being hosted on various mainstream cdn servers around the world (including China), and the speed-up effect is unparalleled. If you host the original website in cf, then you will get an unbeatable, domestic loading. Super fast (above the fold) website.

Free your hands – npm version custom update

Some people will ask, why can’t I directly use latest to get the latest version, but also manually specify it?

Simply put, specifying the latest version with @latest in production is a very unreasonable and very stupid operation. You never know when the opposite cache will be cleared, and you may get the version from a year ago.

In order to save costs and avoid back-to-origin, CDN service providers usually cache resources, especially those static resources. Taking jsd as an example, its latest cache is 7 days, but it can be refreshed manually. unpkg cf edge for 2 weeks, eleme has not been updated for nearly 6 months. As for the self-built ones. You don’t know when it will be updated at all. Using latest will result in random access to the version, which is almost impossible to use normally.

For the specified version, the cdn is usually cached permanently. After all, when the version is fixed, things will not change, and requesting the cdn of the specified version can also improve the access speed more or less, because the file is permanently cached, and the HIT is relatively hot.

sw side

Use npm registry to get the latest version, and its official endpoint is as follows:

https://registry.npmjs.org/chenyfan-blog/latest

Its version field is the latest version.

There are also many images of the npm registry. Take Tencent/Ali as an example:

https://registry.npmmirror.com/chenyfan/latest #阿里,可手动同步https://mirrors.cloud.tencent.com/npm/chenyfan/latest #腾讯,每日凌晨同步

Getting the latest version isn’t hard either:

const mirror = [ `https://registry.npmmirror.com/chenyfan-blog/latest` , `https://registry.npmjs.org/chenyfan-blog/latest` , `https://mirrors.cloud.tencent.com/npm/chenyfan-blog/latest` ] const get_newest_version = async (mirror) => { return lfetch(mirror, mirror[ 0 ]) .then( res => res.json()) .then(res.version)}

There is another pitfall here: the global variables of ServiceWorker will be destroyed after all pages are closed. The handle will be responded to the next time it is started, and its definition variables will not be executed until the handle responds. Therefore, for the latest version of storage, variables cannot be defined directly and need to be persisted. Here is another way to use CacheStorage storage:

self.db = { //全局定义db,只要read和write,看不懂可以略过read : ( key, config ) => { if (!config) { config = { type : "text" } } return new Promise ( ( resolve, reject ) => { caches.open(CACHE_NAME).then( cache => { cache.match( new Request( `https://LOCALCACHE/ ${ encodeURIComponent (key)} ` )).then( function ( res ) { if (!res) resolve( null ) res.text().then( text => resolve(text)) }).catch( () => { resolve( null ) }) }) }) }, write : ( key, value ) => { return new Promise ( ( resolve, reject ) => { caches.open(CACHE_NAME).then( function ( cache ) { cache.put( new Request( `https://LOCALCACHE/ ${ encodeURIComponent (key)} ` ), new Response(value)); resolve() }).catch( () => { reject() }) }) }} const set_newest_version = async (mirror) => { //改为最新版本写入数据库return lfetch(mirror, mirror[ 0 ]) .then( res => res.json()) //JSON Parse .then( async res => { await db.write( 'blog_version' , res.version) //写入return ; })}setInterval( async () => { await set_newest_version(mirror) //定时更新,一分钟一次}, 60 * 1000 );setTimeout( async () => { await set_newest_version(mirror) //打开五秒后更新,避免堵塞}, 5000 )

Then change the above generated urls from:

generate_blog_urls( 'chenyfan-blog' , '1.1.4' ,fullpath(urlPath))

change to

//若第一次没有,则使用初始版本1.1.4,或者你也可以改成latest(不推荐) generate_blog_urls( 'chenyfan-blog' , await db.read( 'blog_version' ) || '1.1.4' ,fullpath(urlPath))

After that, when users load, they will get the latest version as much as possible without manual update.

ci end

With the front-end automatic update, we also need to manually update the version field in package.json when uploading the package. In fact, it can also be directly handed over to ci for processing.

However, it should be noted that although npm version patch can update the z-digit version, its updates will not be uploaded to the repository. In other words, this can only be uploaded once. Therefore, I simply recommend using random numbers in this place. Anyway, the latest obtained through the api is the latest upload.

The case code is not shown here. In fact, this step is not difficult to do.

adjust response

Caching – The Great Invention of the Internet

Although I don’t know that a small number of people are so sensitive to such a small amount of CacheStorage cache usage, at least, caching is of great help in improving the loading speed of the website.

Usually, there are a large number of resources that are repeatedly required to visit a website. For these things, the browser will have its own MemoryCache or DiskCache, but this type of cache is not persistent, and the cache may not take effect the next time the website is opened. CacheStorage is a cache bucket (Key/Value) that comes with the browser. This bucket is persistent storage and is valid for a long time, and sw can control this bucket.

Different domain resources should adopt different caching strategies. For static resources with a certain version, it is directly cached for life; for time-limited static resources, it should not be cached or only be cached for a short period of time.

CacheStorage is not exclusive to sw, you can control it in the front end and sw at the same time, this is some sample code:

const CACHE_NAME = 'cache-v1' ; //定义缓存桶名称caches.open(CACHE_NAME).then( async function ( cache ) { const KEYNAME = new Request( 'https://example.com/1.xxx' ) //定义KEY,[Object Request] await cache.put(KEYNAME, new Response( 'Hello World' )); //自定义填入await cache.match(KEYNAME).then( async function ( response ) { console .log( await response.text()); //匹配并输出 }) await cache.put(KEYNAME, await fetch( 'https://example.com/2.xxx' ).then( res => res.arrayBuffer())); //使用fetch填入缓存await cache.matchAll().then( function ( responses ) { //列出所有(也可以根据内容列出指定的项for ( let response of responses) { console .log(response.url); } }) await cache.delete(KEYNAME) //删除指定项});

SetTimeout – millisecond-level control response

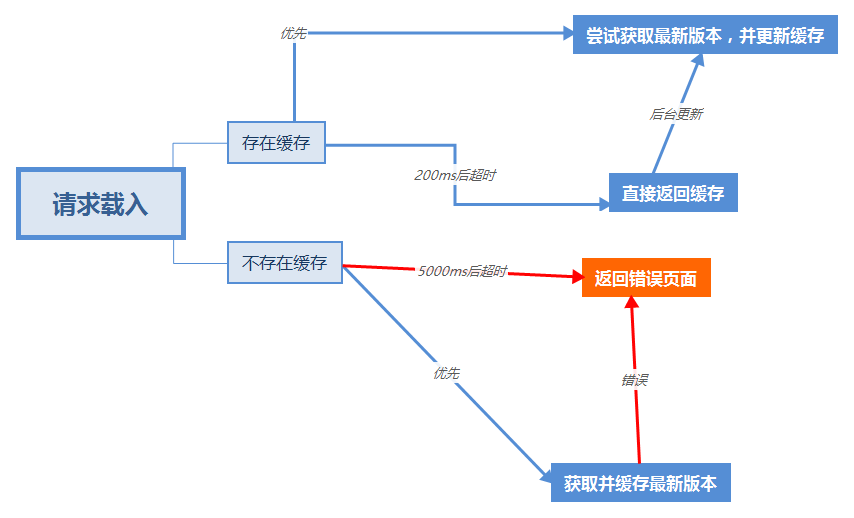

For the same web page, you need to reasonably implement the decision tree on it. This is the decision tree currently adopted by my blog [web page]:

There are two ancient functions for time control in js: SetTimeout and SetInterval . Here I use SetTimeout to execute tasks in parallel.

It’s easier to explain with code snippets here:

if (请求的网址是博客) { //这里一定要用Promise,这样在之后settimeout就不需要回调,直接Resolve return new Promise ( function ( resolve, reject ) { setTimeout( async () => { if (存在缓存) { setTimeout( () => { resolve(获取当前页面缓存(请求)) }, 200 ); //200ms表示下面的拉取失败后直接返回缓存,但下面的拉取不会被踢出,更新会在后台执行,会填入缓存,但也不会返回 setTimeout( () => { resolve( 拉取最新版本的网页(请求) .then(填入缓存并返回内容) //返回缓存 ) }, 0 ); //表示立刻执行,优先级最高 } else { setTimeout( () => { resolve( 拉取最新版本的网页(请求) .then(填入缓存并返回内容) //返回缓存 ) }, 0 ); //表示立刻执行,优先级最高 setTimeout( () => { resolve(返回错误提示()) }, 5000 ) //5000ms后如果没有返回最新内容就直接返回错误提示,如果成功了此次返回是无效的 } }, 0 ) //这里需要一个大settimeout包裹以便于踢出主线程,否则无法并行处理 })}

Optimize the first screen

window.stop – brush with death

In fact, the most significant disadvantage of ServiceWorker is that it requires a refresh to activate after first loading the web page for installation. That is, the visitor’s first visit is not controlled by sw. In other words, the visitor above the fold is not accelerated, and its loading speed is directly linked to your server [Although it took off after the installation was completed, it was crumbs before installation] .

And I am a minor, Zhejiang’s regulations are that minors are not allowed to file, and after adding, it is troublesome to switch the theme of the filing. My decision is that at least I will not file before the college entrance examination. Then the consequence is very direct, I cannot use the domestic cdn node. In addition, I don’t have the economic strength to use Hong Kong CN2 or pull the iplc dedicated line, so there is very little that can be done to optimize the first screen server.

What’s even more maddening is that when the first screen is loaded, the static resources will be directly acquired and not cached, and after the sw is activated, it will be forced to acquire it for the second time, which will slow down the speed.

But we can minimize this disadvantage as much as possible. We can use js to interrupt all requests to ensure that only one html and one sw.js are loaded on the first screen, and the rest of the resources will not be loaded, reducing the loading delay of the first screen.

We refer the breaking code to <head> as much as possible to ensure that it is not blocked by other resources. For hexo, open the head.ejs of the theme and add it in the first position of the <head> tag:

( async () => { //使用匿名函数确保body已载入/* ChenBlogHelper_Set 存储在LocalStorage中,用于指示sw安装状态0 或不存在未安装1 已打断2 已安装3 已激活,并且已缓存必要的文件(此处未写出,无需理会) */ const $ = document .querySelector.bind( document ); //语法糖if ( 'serviceWorker' in navigator) { //如果支持sw if ( Number ( window .localStorage.getItem( 'ChenBlogHelper_Set' )) < 1 ) { window .localStorage.setItem( 'ChenBlogHelper_Set' , 1 ) window .stop() document .write( 'Wait' ) } navigator.serviceWorker.register( `/sw.js?time= ${ranN( 1 , 88888888888888888888 )} ` ) //随机数,强制更新 .then( async () => { if ( Number ( window .localStorage.getItem( 'ChenBlogHelper_Set' )) < 2 ) { setTimeout( () => { window .localStorage.setItem( 'ChenBlogHelper_Set' , 2 ) //window.location.search = `?time=${ranN(1, 88888888888888888888)}` //已弃用,在等待500ms安装成功后直接刷新没有问题window .location.reload() //刷新,以载入sw }, 500 ) //安装后等待500ms使其激活 } }) .catch( err => console .error( `ChenBlogHelperError: ${err} ` )) }})()

Of course, this time it will lead to a white screen of 500ms. If you don’t think it looks good, you can also load a waiting interface like me and change document.write() to:

document .body.innerHTML = await ( await fetch( 'https://npm.elemecdn.com/[email protected]/public/notice.html' )).text()

Just

Master server optimization – the last straw in despair

In fact, I always thought that the optimization of the main server was the most necessary and the least important in the whole website. The necessary reason is that it is related to the initial loading speed and is also the most important part of the visitor; what is not important is that compared to the recording of static resources, the rendering of pages and the compilation of scripts, the loading of the first screen is too small for the overall effect. .

Especially after sw is hosted, all subsequent accesses, except for sw updates (and after all mirror sources are fully bombed), are basically disconnected from the main server, and normal access has nothing to do with it. Accelerating the origin site is actually unnecessary.

Of course, if you say it is useful, it must be useful, at least the first loading will not cause you to get stuck. My requirements are very simple, it is basically no problem to complete the first html download within 600ms.

The best one is Hong Kong CN2, but we don’t have the ability. The next best thing is that we can also accept ordinary Hong Kong servers. However, I still use the idea of prostitution first, so I used Vercel. Vercel can also choose nodes (mainly Amazon and Google), I tested a little [mainly focus on connectivity, followed by latency. Packet loss and speed are the last time to build a website (especially with only one 10kb webpage), and decided to use the following strategy:

电信104.199 .217 .228 【台北谷歌Cloud】 70 ms (绕新加坡但是是质量最好的)联通18.178 .194 .147 【日本亚马逊】 45 ms ( 4837和aws在tyo互联)移动18.162 .37 .140 【香港谷歌Cloud】 60 ms (移动去香港总是最好的选择)Here, the reasons for not recommending other regions are:

Telecom: Basically, except for Taipei, all people who go to Google Cloud go to Japan ntt ×; Amazon has most of them around the United States, and the delay to Japan is abnormally large.

China Unicom: The route to Hong Kong is around the United States. There is a direct interconnection point for the route to Japan. The load is relatively small and the delay is not bad.

Mobile: There is nothing to say, mobile Internet is garbage except for the Asia-Pacific.

postscript

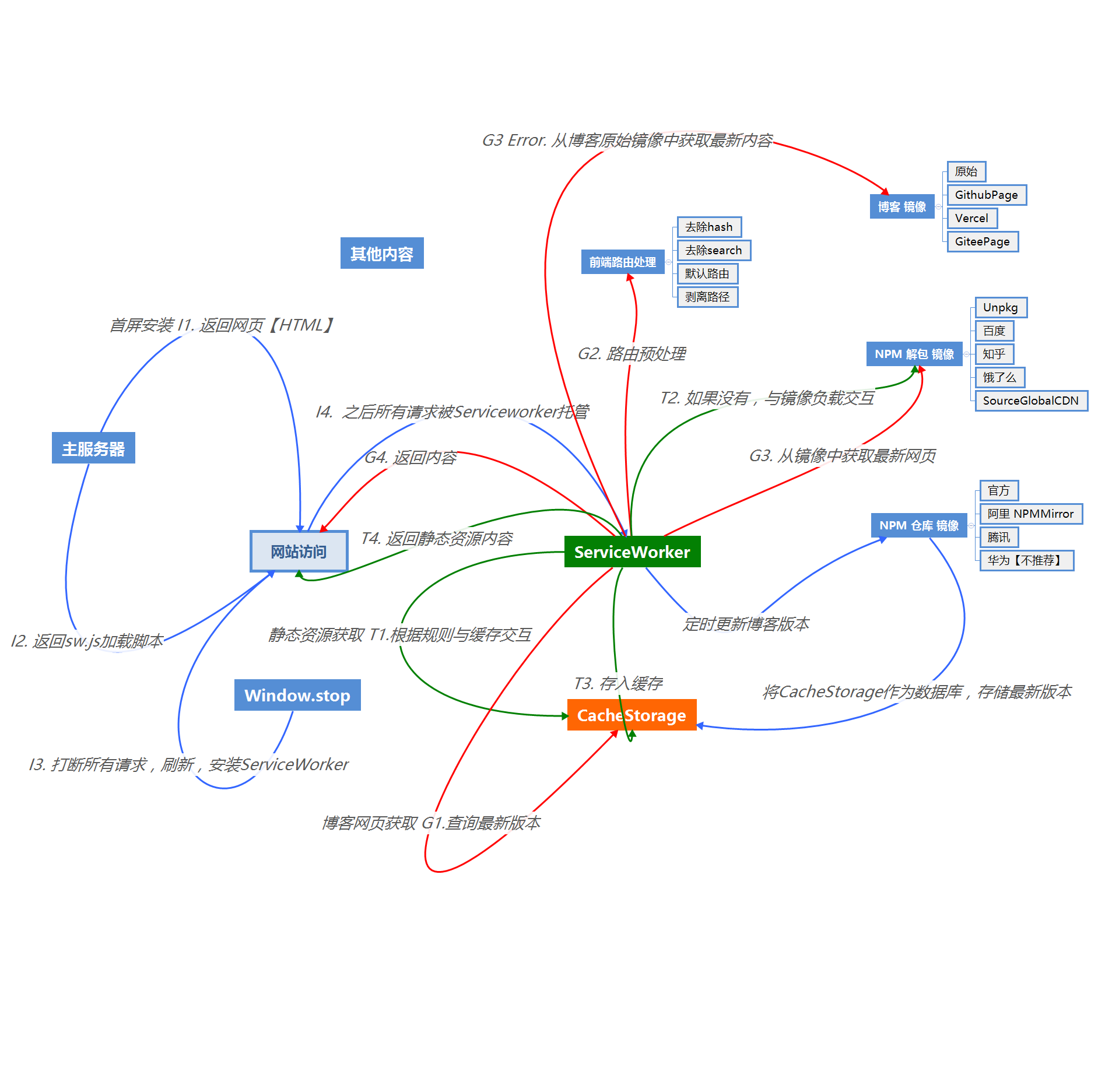

A mind map summarizes the full text, have you lost your studies?

This article is reproduced from: https://blog.cyfan.top/p/d3c51290.html

This site is for inclusion only, and the copyright belongs to the original author.