Original link: https://www.kawabangga.com/posts/4689

The overload mentioned in this article is easy to be confused with avalanche. Avalanche refers to that in a distributed system, a certain dependency cannot provide services normally, but the system cannot block this dependency, and eventually the entire system cannot provide services.

Overload is when a service receives more requests than it can handle.

So the following discussion uses the word overload, although sometimes we are discussing the same problem, but using the word “avalanche”.

For overload, there seems to be a very simple solution: many systems are now designed with current limiting in mind. That is, if the QPS that a certain system can handle is 1000, it will only allow 1000 requests to be processed at the same time. When the 1001st request comes, it will quickly get an error and return directly. This way the protection system can always successfully handle 1000 requests.

This is Rate limit, a typical overload protector. Rate limit has many implementation methods: Token bucket , Leaky bucket , and so on.

But is this enough?

Imagine a situation: There is a website (such as this blog you are currently reading) that can handle 1k/s HTTP requests. But there are currently 10,000 visitors visiting, and then a gateway (such as Nginx is triggered) ) current limiting mechanism, directly access 429 Too many requests for requests after the 1000th per second.

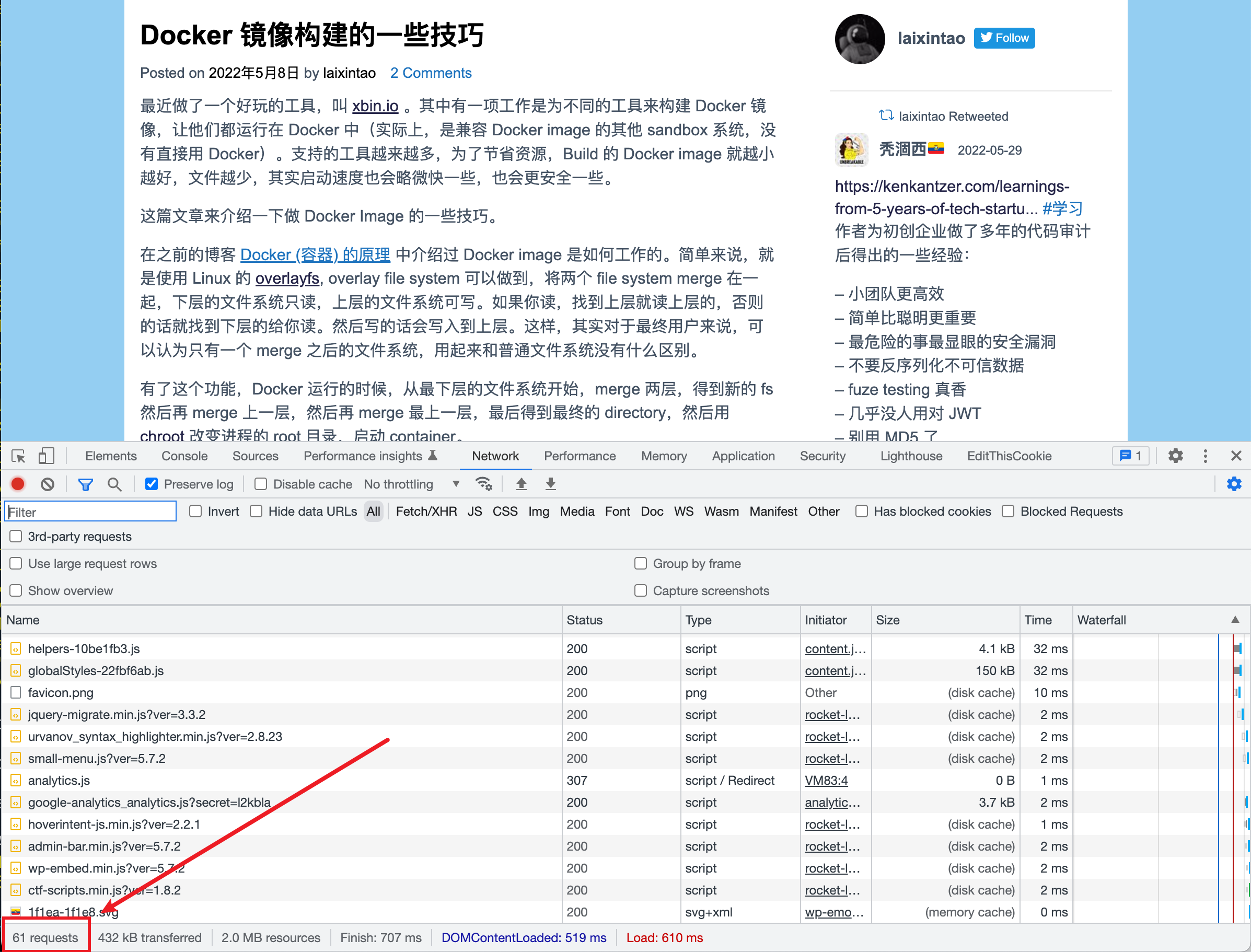

In this case, the website itself is processing 1000 requests per second, which seems to be working properly, but what about the end user? Can they browse the web normally? The answer is no. Because, taking the homepage of the blog as an example, it takes a total of 61 HTTP requests to display properly. That is to say, each user needs to successfully get 61 HTTP responses to see a complete web page. Otherwise, some css files may be missing and the format of the website may be displayed incorrectly; or some images may be missing and the content may not be known. What are you talking about; or the inability to request some js causes some functionality to be missing. In order to see the full page, they may re-refresh the page, further adding to the current total traffic to the site.

Consider this situation again. In a microservice system, in order to display the detailed information of a commodity, the “commodity microservice” needs to call the “user microservice” 3 times for the following purposes:

- Get the region where the user is located and use it to calculate the shipping fee;

- Get the user’s membership level and render discounts;

- Get the points information in the user’s current account, and render out how much money can be discounted if the points are used;

If the “user microservice” is overloaded, and the user microservice restricts the flow of all request sources, now each of the three requests may fail, and each failure cannot successfully render the product details.

There is another situation. Suppose a service discovery system uses Etcd, a database with lease, as a backend dependency. After a service is registered, it needs to keep renewing the lease to keep it online. If it hangs up and does not renew the lease, then when the lease expires, Etcd will delete it from the database.

One day, the system rebooted, all the services started re-registering themselves, and the volume of requests overloaded Etcd. In order to protect itself, Etcd also has a rate limit mechanism, which only allows 3000 requests to be processed at the same time, and returns an error directly for the 3001st request. At this time, some services can be successfully registered, but subsequent renew lease (keepalive) requests may fail. In this way, even if Etcd can successfully process 3000 registration requests, the services of these registration functions have to re-register themselves because keepalive requests cannot be accepted. It will cause Etcd to be in a state of overload, which can never be recovered.

In the above cases, the system will still be overloaded when there is a Rate limiter.

When overloading, the total request volume is larger than the capacity, so our solution is to ensure that the total request volume continues to decrease over time, and finally, the total request volume is within the capacity that can be processed. Although some requests may fail, their eventual retry will succeed. In other words, to ensure that the requests we process are all “valid” requests.

To give a counter example: a website uses a queue to cache HTTP requests that exceed the capacity limit, and then continuously takes requests from the queue for processing. The reason this is a bad solution is because in the HTTP scenario, if a user can’t open the page, he will refresh instead of waiting here. Therefore, the request that the website server takes out of the queue is always the request that the user has abandoned, so it has been processing the “invalid” request.

Some ideas to consider are as follows.

Throttling based on context

When overloaded, we need to ensure that the number of users still within the capacity limit can be served normally. However, current limiting cannot be performed purely from the point of view of requests. Instead, it should be added to the dimensions of the business up and down. for example:

- If a user opens a certain page, it is necessary to ensure that subsequent operations are successful, and at least one transaction can be completed; if a user has been failed by the rate limiter, then we will let this user’s request fail, don’t do it again Processing his request is a waste of resources;

- Or perform sharding according to user id to protect only a certain part of users; protect another part of users after a period of time;

Atomic call

In the second example above, consider turning three requests into one. In this way, the current limit according to the request dimension is still effective.

switch to asynchronous link

For some cases, consider using the “counterexample” above. For example, if the user’s payment request fails, the user is likely to abandon the transaction. We can consider using a queue to queue payment requests to give the payment request a better chance of being processed successfully:

- Display clear words in processing, try to appease users;

- If the user attempts to cancel, a pop-up confirmation prompt can cancel the payment, the transaction has not yet occurred, but encourages to wait;

- For users with good credit, or orders with low unit price, consider advancing the transaction first and deduct money from the account asynchronously;

Temporary downgrade (circuit breaker)

In the case of an avalanche, there can be an emergency way to temporarily block some resource-consuming features.

For example, in the above Etcd scenario, we can consider that when the service is successfully registered for the first time, set the lease time to 20 minutes, and start trying to renew at the 15th minute. If it fails, it will take an exponential time to back off and retry. If the number of retries is relatively small and the renew is successful, set the lease time to 10 minutes; next time, shorten the lease until the lease is short enough to meet the service health check time. This can give Etcd enough time to recover in a short period of time to ensure that the requests it is currently processing are the final requirements (meaning that registering the service is the first priority, and the health check kicking out the abnormal service is the secondary task , can be temporarily downgraded).

The post System Overload and how to deal with it first appeared on Kawabanga! .

related articles:

This article is reprinted from: https://www.kawabangga.com/posts/4689

This site is for inclusion only, and the copyright belongs to the original author.