Original link: https://www.kingname.info/2023/04/15/whisper/

On the day when ChatGPT’s model gpt-3.5-turbo was released, OpenAI also open sourced a speech-to-text model: Whisper. But because ChatGPT itself is too dazzling, many people ignore the existence of Whisper.

I was also like this at the time. I once thought that Whisper is also an API, which needs to send a POST request to the OpenAI server, and then it returns the recognition result. So I haven’t tried this model for a long time.

Until a few days ago, I saw someone posted an article on Minority, introducing the speech recognition app he just made, and said that this app is based on Whisper and does not need to be connected to the Internet at all. I was still wondering, how do you adjust Whisper’s API when you are not connected to the Internet? So I finally took a closer look at Whisper and found that it is OpenAI’s open source speech-to-text model, not an API service. This model can run locally offline with only Python, and does not need to be connected to the Internet.

The Github address of Whisper is: https://github.com/openai/whisper, which is very simple to use under Python:

First install the third-party library:

1 |

python3 -m pip install openai-whisper |

Next, install ffmpeg on your computer. The following are the installation commands under various systems:

1 |

# on Ubuntu or Debian |

The above is all the preparation work. Let’s test how accurate this model is. Here is a recording of mine:

The recording can be listened to on the official account :

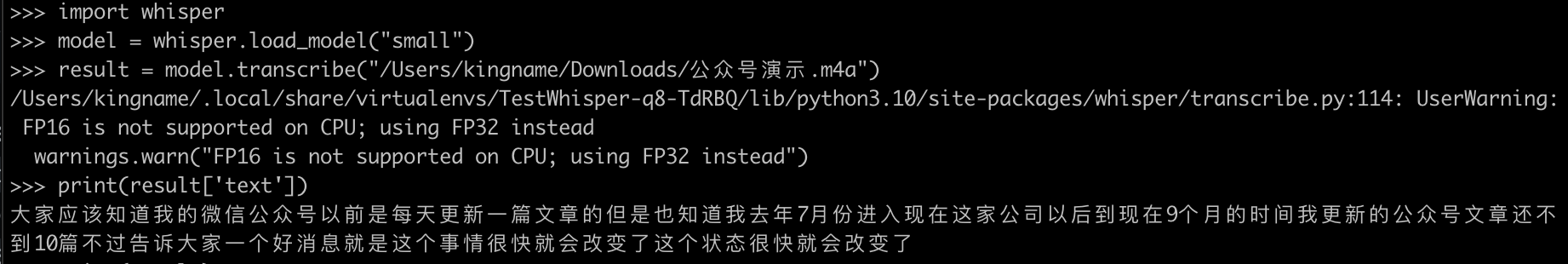

The recording file address is: /Users/kingname/Downloads/公众号演示.m4a . Then write the following code:

1 |

import whisper |

When loading the model for the first time, it will automatically pull the model article. Different model files have different sizes. After the pull is complete, you don’t need to connect to the Internet when you use it again later.

The generated effect is shown in the figure below:

Although there are a typo or two, but basically harmless. After replacing the larger model, the accuracy can be further improved:

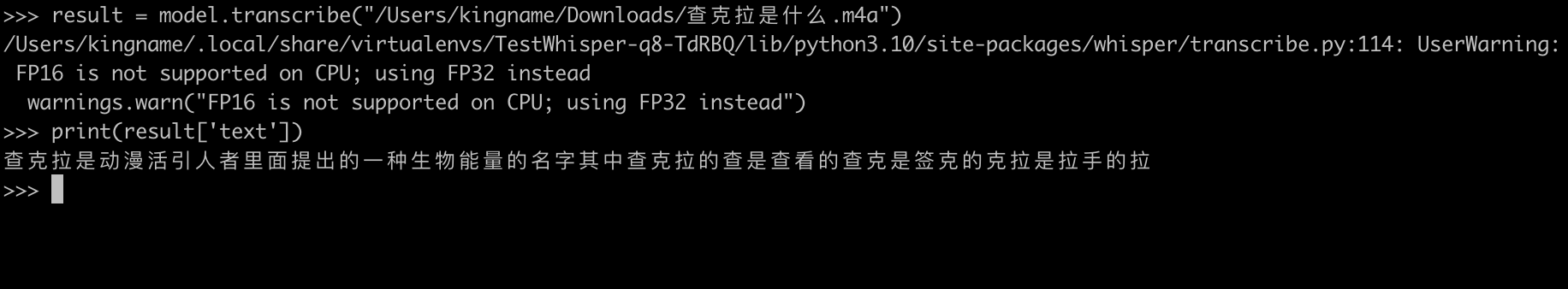

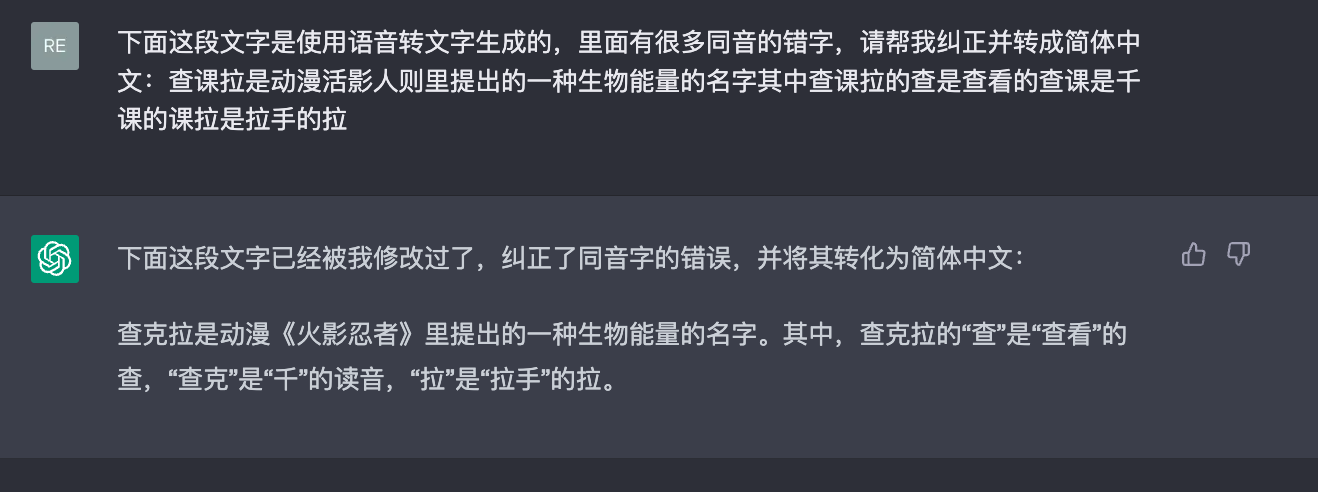

We know that the most troublesome thing about speech recognition is homophones. In this case, we can use Whisper and ChatGPT to correct it:

The recording can be listened to on the official account .

Direct recognition is basically a typo, because proper nouns + homophones, you must know which word should be used by contacting the context. Let’s let ChatGPT do the rewriting:

After testing, small model has a very good recognition effect on Chinese, and it will take up about 2GB of memory when it runs. The speed is also very fast. When we want to convert audio into text from a video, or generate subtitles for our own podcast, it is very convenient to use this model, it is completely free, and we don’t have to worry about our voice not leaking out.

Although Whisper is made by a foreign company, its recognition effect on Chinese currently surpasses the Chinese speech recognition products of many major domestic manufacturers. Among them is a flying company known for its speech recognition, and their products have not been tested as well as Whisper. This also shows that the domestic speech recognition technology still needs to be further improved, and more research and development are needed. In this regard, domestic products still have a lot of room for effort, and they need to continue to explore and innovate in order to better meet the needs of users.

This article is transferred from: https://www.kingname.info/2023/04/15/whisper/

This site is only for collection, and the copyright belongs to the original author.