Original link: https://www.ixiqin.com/2023/08/18/how-to-debug-the-problem-of-crawlers-unable-to-successfully/

When writing a crawler, the most common problem we will encounter is that browser access is normal, but when the code is written, we find that it cannot be crawled normally.

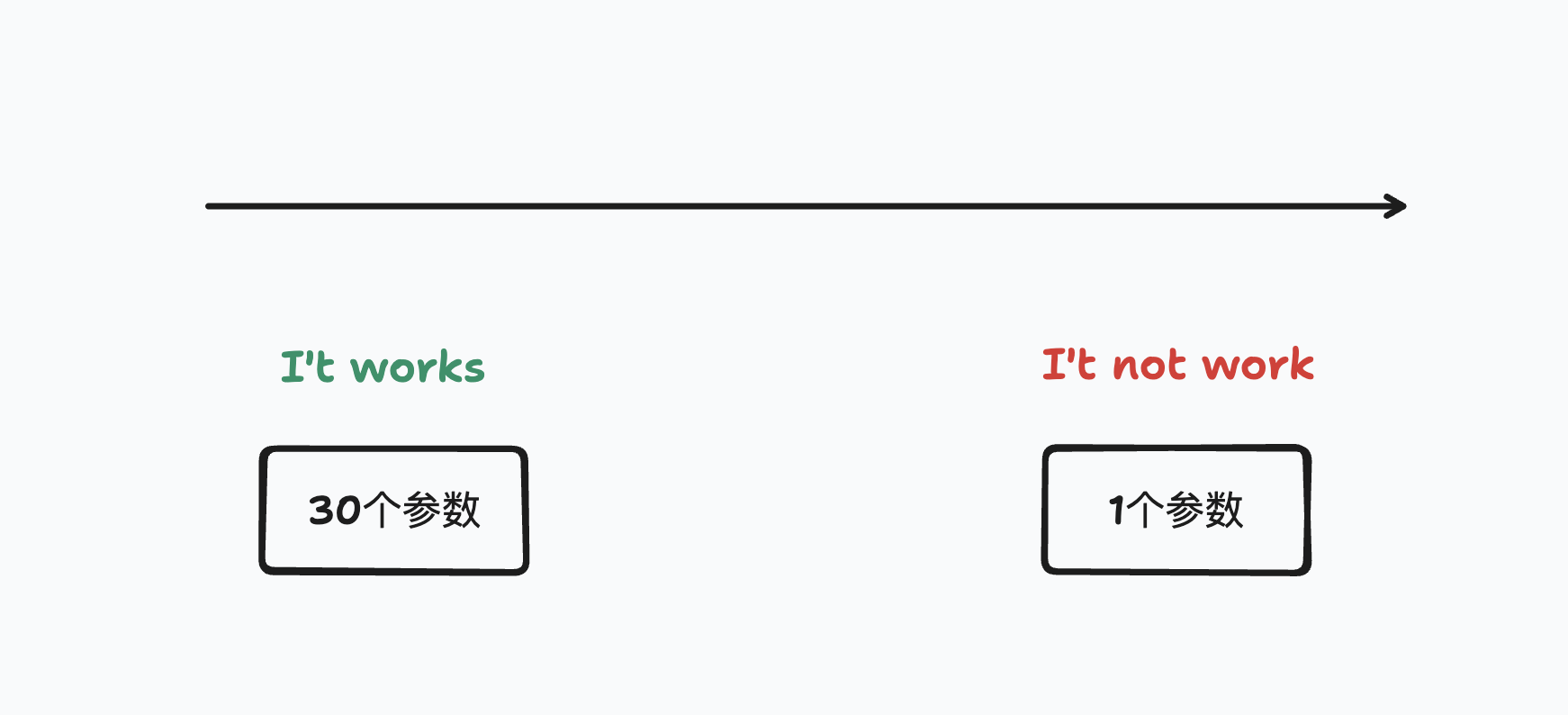

At this time, the behavior simulated by our crawler is often different from the code (for example, the browser has 30 parameters, but our code has only one parameter), which leads to different final execution effects. If you want to achieve the same effect as the browser in the code, the most important thing is to completely copy the behavior of the browser so that the code can simulate it .

So the key is to find the key parameters from 30 parameters that work normally to 1 parameter that doesn’t work . After all, we don’t want to add too many Magic Values to the code to solve some problems. So to find the key parameters.

get live

First of all, we need to obtain the same data as the browser, because this is the basis for our follow-up, with it, we can reduce from 30 parameters to 1 parameter. When performing this step, you need to use Chrome Devtools to get to the scene.

Use F12 to open Chrome DevTools, switch to the “Network” Tab, and refresh the page to reload the request. After the page is loaded normally, you can find the request you want to debug. Right-click on this request, select ” Copy “, and select ” Copy as cURL “. After copying, you will get the following content. This is what you actually send to the server when you use your browser.

You can run this command in Terminal, and you will see the same output as in the browser.

At this point, we look at the copied command, which contains a link (one of our parameters) and a large number of headers. One of these headers may be the header that the server regards us as a crawler and then rejects us.

curl 'https://www.baidu.com/' \ -H 'Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7' \ -H 'Accept-Language: zh-CN,zh;q=0.9,en;q=0.8,en-US;q=0.7' \ -H 'Cache-Control: no-cache' \ -H 'Connection: keep-alive' \ -H 'Cookie: BIDUPSID=1B455AFF07892965CF63335283C0BD80; PSTM=1690036933; BD_UPN=123253; BDUSS=BDcmp1YzFSeTRDLXVGZlNBbDJKZ08ya1lMQUpBVTlEaWM5WE9mV25YWn5EfmxrRVFBQUFBJCQAAAAAAAAAAAEAAADwPbowsNe084aq4MIAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAH-C0WR~gtFkan; BDUSS_BFESS=BDcmp1YzFSeTRDLXVGZlNBbDJKZ08ya1lMQUpBVTlEaWM5WE9mV25YWn5EfmxrRVFBQUFBJCQAAAAAAAAAAAEAAADwPbowsNe084aq4MIAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAH-C0WR~gtFkan; delPer=0; BD_CK_SAM=1; ZFY=sMxi79JqlMJjPMSJ3gQr5ht5g0MCtkIqefc2OTMspV4:C; BD_HOME=1; BDRCVFR[feWj1Vr5u3D]=I67x6TjHwwYf0; rsv_jmp_slow=1691762441727; BAIDUID=1B455AFF07892965CF63335283C0BD80:SL=0:NR=10:FG=1; sug=0; sugstore=1; ORIGIN=2; bdime=0; BAIDUID_BFESS=1B455AFF07892965CF63335283C0BD80:SL=0:NR=10:FG=1; PSINO=2; COOKIE_SESSION=161794_0_4_3_2_11_1_0_4_4_1_0_161839_0_9_0_1691922269_0_1691922260%7C4%230_0_1691922260%7C1; MCITY=-131%3A; H_PS_PSSID=36558_39217_38876_39118_39198_26350_39138_39100; BA_HECTOR=8gah01agag8g2kala0a12l2o1idr2ba1o; RT="z=1&dm=baidu.com&si=8e7a4596-4b9f-45c3-bf30-f7dffaddd79d&ss=llewny2r&sl=3&tt=1ag&bcn=https%3A%2F%2Ffclog.baidu.com%2Flog%2Fweirwood%3Ftype%3Dperf&ld=89j&ul=104t&hd=105p"' \ -H 'DNT: 1' \ -H 'Pragma: no-cache' \ -H 'Sec-Fetch-Dest: document' \ -H 'Sec-Fetch-Mode: navigate' \ -H 'Sec-Fetch-Site: none' \ -H 'Sec-Fetch-User: ?1' \ -H 'Upgrade-Insecure-Requests: 1' \ -H 'User-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36' \ -H 'sec-ch-ua: "Not.A/Brand";v="8", "Chromium";v="114", "Google Chrome";v="114"' \ -H 'sec-ch-ua-mobile: ?0' \ -H 'sec-ch-ua-platform: "macOS"' \ --compressed

Test Header using dichotomy

If you want to find out which headers are key parameters, a more convenient way is to use the dichotomy method to debug these headers.

In specific operations, you only need to delete half of the Header, and send cURL again to see if you can get the content we want correctly. If you find that the deleted half does not cause an error in the return result you get, you can continue to use the dichotomy method to delete the Header until you locate the Header that really affects our correct results.

When you test that a certain header is removed and the content cannot be returned correctly, then you can continue to use the dichotomy method to delete the remaining headers, and test the specific headers that will affect the results until there is nothing to delete. It means that we have got the simplest parameters that can work normally.

At this point, you can safely put the above logic in your code for maintenance.

In addition, you can also use the above logic to put your crawler logic into unit tests, so that when the target website adjusts the crawler logic, you will quickly find out.

This article is transferred from: https://www.ixiqin.com/2023/08/18/how-to-debug-the-problem-of-crawlers-unable-to-successfully/

This site is only for collection, and the copyright belongs to the original author.