Original link: http://www.zmonster.me/2023/05/13/llm-prompt-engineering-practice-prototype.html

With the help of ChatGPT, I gave the project a name like xorius. This word does not actually exist, so the meaning is completely defined by me. It has given meaning, and it will be more appropriate to talk about it when it becomes bigger and stronger in the future.

After choosing a name, I am going to implement the simplest prototype first, and discuss some issues during the process. All the content in the following is a summary of my development log, and subsequent articles will basically adopt this model.

In order to realize the prototype, there are two options, one is to directly call the official interface or SDK of OpenAI, and the other is to choose a third-party package such as langchain . For convenience, I chose langchain.

First of all, whether it is to directly call the official interface, SDK, or use langchain, it needs to be accessed through a proxy in China:

import openai openai.proxy = 'http://localhost:8080'

After setting up the agent, you can simply call the OpenAI interface to get the answer with langchain, for example:

from langchain.chat_models import ChatOpenAI from langchain.schema import HumanMessage user_message = HumanMessage(content= 'Translate this sentence into Chinese: "to be or not to be"' ) #SafetyConsider here The real API KEY is hidden, so I won't emphasize it later llm = ChatOpenAI(temperature=0, openai_api_key= 'sk-xxxxxxxxxxxxxxxxx' ) answer = llm([user_message]) print (answer. content)

The above code does not specify the model to be used. By default, gpt-3.5-turbo will be used. After running, this result is obtained

be or not

Of course, this code is not a dialogue mode, and does not consider the chat history. If you want to chat, you need to maintain a chat history

from langchain.memory import ChatMessageHistory memory = ChatMessageHistory()

After that, each user input and answer can be added to this memory in order. For example, the previous user_message and answer can be added to it.

memory.add_user_message(user_message.content) memory.add_ai_message(answer.content)

History can be obtained through memory.messages

print (memory. messages)

result

[HumanMessage(content='Translate this sentence into Chinese: "to be or not to be"', additional_kwargs={}, example=False),

AIMessage(content='"To be or not to be"', additional_kwargs={}, example=False)]

and then use it in the next round of dialogue

user_message = HumanMessage(content= 'translate into Japanese' ) answer = llm(memory. messages + [user_message]) print ( 'Answer:' , answer. content) memory.add_user_message(user_message.content) memory.add_ai_message(answer.content) print ( 'History' ) print (memory. messages)

result

Answer: "生きるか死ぬか"

History:

[HumanMessage(content='Translate this sentence into Chinese: "to be or not to be"', additional_kwargs={}, example=False),

AIMessage(content='"To be or not to be"', additional_kwargs={}, example=False),

HumanMessage(content='translate into Japanese', additional_kwargs={}, example=False),

AIMessage(content='"生きるか死ぬか"', additional_kwargs={}, example=False)]

Combining the above code, as long as you write a loop, you can have a continuous dialogue

from langchain.chat_models import ChatOpenAI from langchain.memory import ChatMessageHistory memory = ChatMessageHistory() llm = ChatOpenAI(temperature=0, openai_api_key= 'sk-xxxxxxxxxxxxxxxxx' ) while True : user_message = input ( 'You: ' ).strip() if not user_message: continue if user_message.lower() in ( 'quit' , 'exit' ): break memory. add_user_message(user_message) answer = llm(memory. messages) print ( 'BOT:' , answer. content) memory.add_ai_message(answer.content)

However, the above code is problematic, because in essence, I am splicing historical messages and current input every time to input to the OpenAI interface, and the OpenAI interface has a limit on the total length of the processed text, gpt-3.5 – The maximum length of turbo is 4096 tokens, and gpt-4 supports a maximum of 32768 tokens (this token is the smallest unit after text segmentation in the language model, so don’t go into details first), and add user_message and answer to memory as above , then as long as there are enough dialogue rounds, this limit will definitely be exceeded, and an error will be reported if it exceeds, so it must be limited. It should be noted that the maximum text length is calculated by adding the input and output together. For example, if you use gpt-3.5-turbo and input 4000 tokens, you can only output the result of the longest 96 tokens.

To sum up, in order to ensure that the length limit of the interface is not exceeded, only a part of memory.messages needs to be taken. Assuming that the total number of tokens in this part of memory.messages taken is L1, we also need to ensure that the total number of tokens in the output result can reach L2, then you must ensure that L1 + L2 < 4096, L2 can be set by the parameter max_tokens when initializing ChatOpenAI, the default is unlimited if not set, then in order to better calculate L1, you need to set it explicitly, for example, set it to 1024:

max_tokens = 1024 llm = ChatOpenAI( temperature=0, openai_api_key= 'sk-xxxxxxxxxxxxxxxxx' , max_tokens=max_tokens )

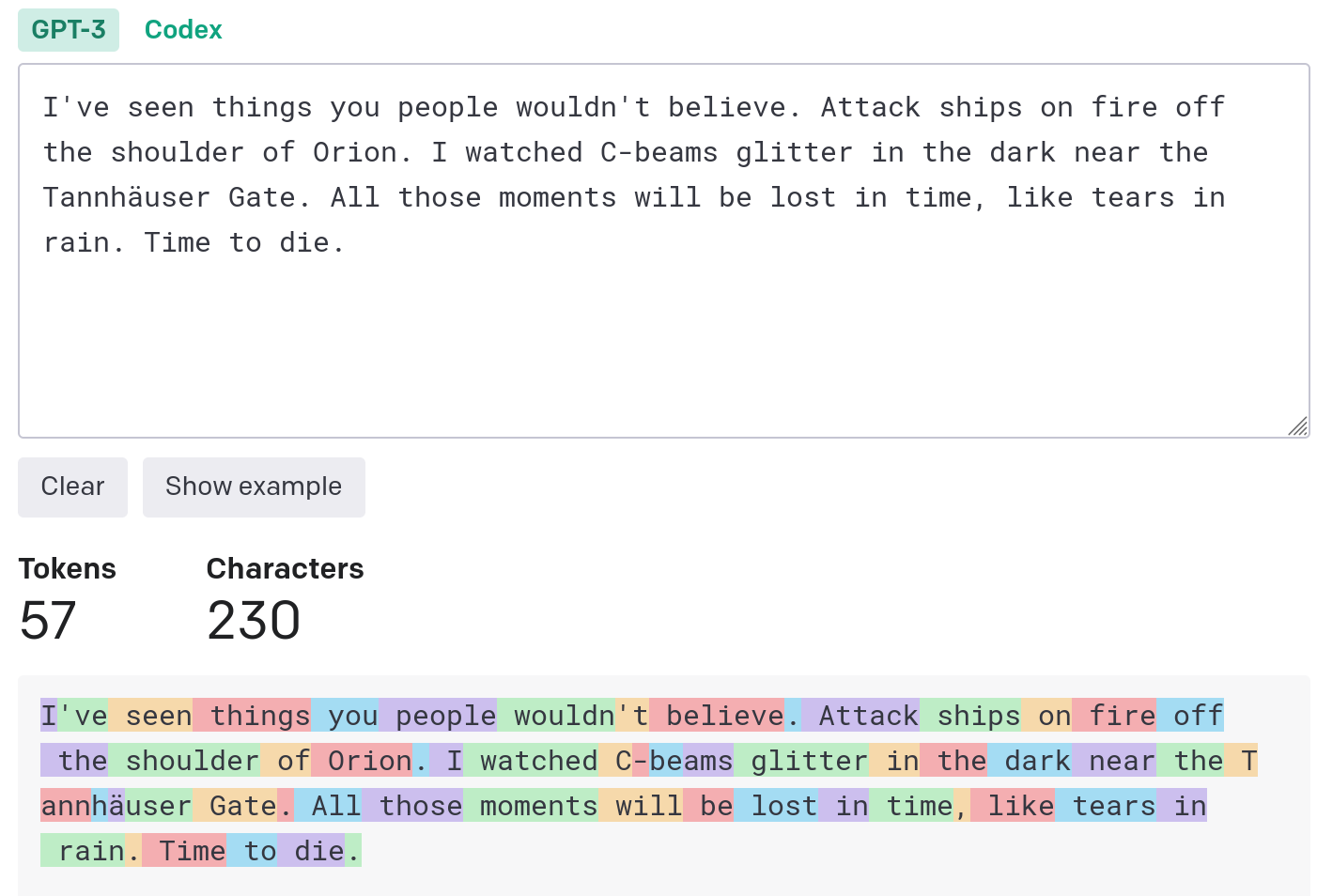

In the case of L2=1024, L1 < 4096 – 1024 = 3072 can be obtained. The next problem is to select memory.messages according to the maximum number of tokens. To do this, you must first be able to calculate the number of tokens for a given text. The tokens in the language model are not exactly equal to words. OpenAI provides An online tool shows the effect of converting text into tokens:

-

English is basically a word and a token, and sometimes a word is split into several tokens. For example, in the following example, the word Tannhäuser is split into five tokens T/ann/h/ä/user, but anyway Calculated by the number of words, the final number of tokens in English is less than the number of words. The total number of words in the text of the following example is 230, and the number of tokens is only 57

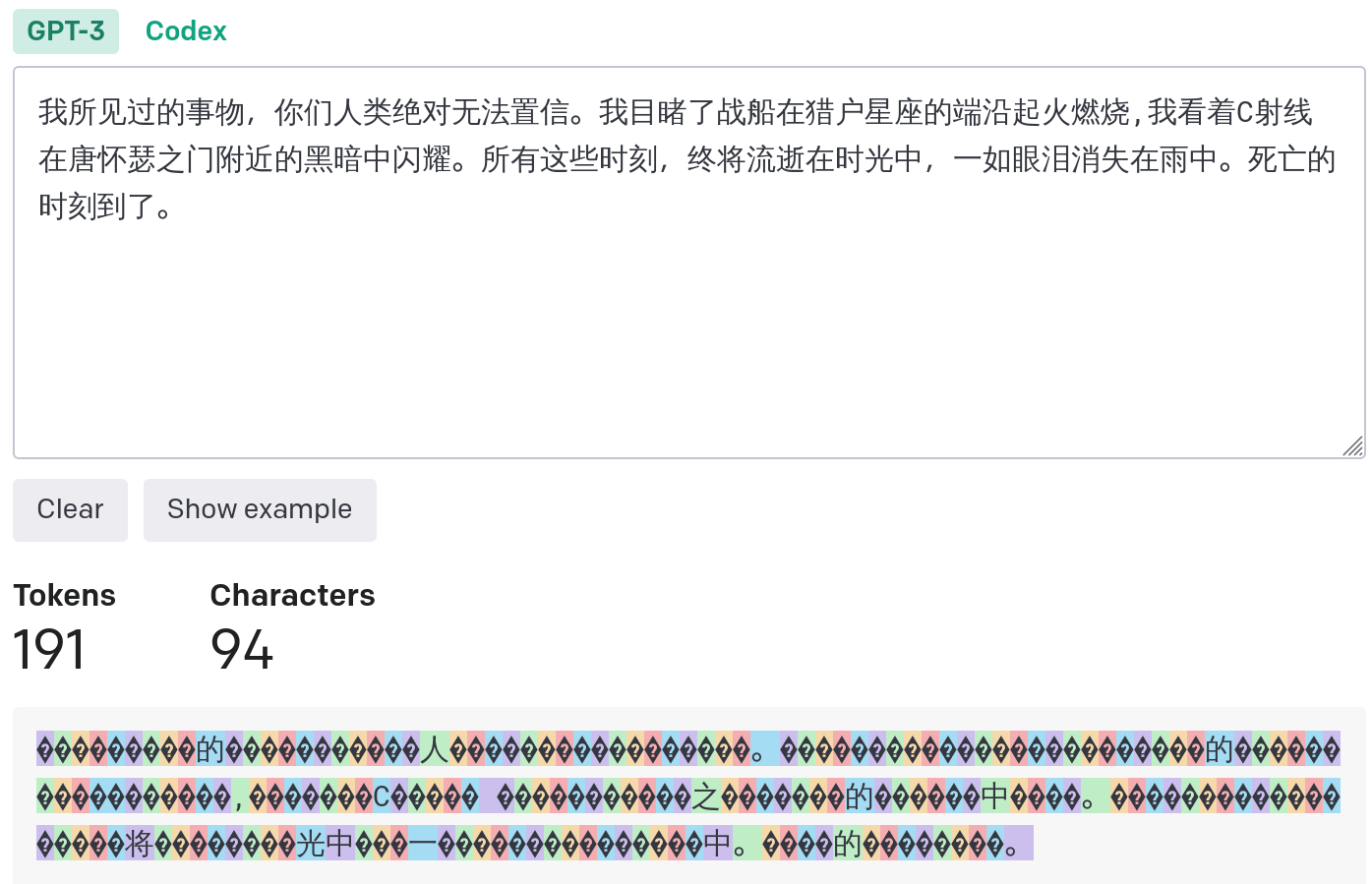

-

In Chinese, one word may be split into multiple tokens. Most of these tokens are incomprehensible, so in general, the number of tokens will be greater than the number of words. In the example below, the number of words in the text is 94, and the number of tokens is 191.

Note that the Chinese example above is the effect of the GPT3 encoder. After replacing it with the gpt-3.5-turbo encoder, the number of Chinese tokens drops to 124.

OpenAI provides the tiktoken python library for text-to-token encoding conversion

from tiktoken.model import encoding_for_model encoder = encoding_for_model( 'gpt-3.5-turbo' )

Since the input of the Chat Completion interface used by the gpt-3.5-turbo model is not in an ordinary text format, calculating the number of input tokens cannot simply add up the number of tokens input by the user and the result text of the interface response. OpenAI has given its own calculation method :

import tiktoken def num_tokens_from_messages (messages, model= "gpt-3.5-turbo-0301" ): """Returns the number of tokens used by a list of messages.""" try : encoding = tiktoken.encoding_for_model(model) except KeyError : encoding = tiktoken.get_encoding( "cl100k_base" ) if model == "gpt-3.5-turbo-0301" : # note: future models may deviate from this num_tokens = 0 for message in messages: num_tokens += 4 # every message follows <im_start>{role/name}\n{content}<im_end>\n for key, value in message.items(): num_tokens += len (encoding. encode(value)) if key == "name" : # if there's a name, the role is omitted num_tokens += -1 # role is always required and always 1 token return num_tokens else : raise NotImplementedError ( f "num_tokens_from_messages() is not presently implemented for model {model} .\n" "See https://github.com/openai/openai-python/blob/main/chatml.md" "for information on how messages are converted to tokens." )

Note: In order to facilitate the calculation of the length of a single message, I made some changes to num_tokens_from_messages and deleted the line num_tokens += 2.

Note 2: After testing, the function provided by OpenAI, the calculated number of tokens is 1 less than the actual number.

Based on this function, the selection of chat history can be realized. Here we simply implement a method that takes the latest chat history

from langchain.schema import AIMessage, HumanMessage def select_messages (messages, max_total_tokens=4096, max_output_tokens=1024): tokens_num = 0 selected = [] for message in messages[::-1]: role = 'system' if isinstance (message, AIMessage): role = 'assistant' elif isinstance (message, HumanMessage): role = 'user' cur_token_num = num_tokens_from_messages([{ 'role' : role, 'content' : message. content}]) if tokens_num + cur_token_num + 2 + max_output_tokens > max_total_tokens: break selected. append(message) tokens_num += cur_token_num selected = selected[::-1] if isinstance (selected[0], AIMessage): #Make sure the first one is a user message selected = selected[1:] if not selected: #Assume that the last item in messages is the current user input selected = message[-1] return selected

Then the previous continuous dialogue code can be transformed into this look

from langchain.chat_models import ChatOpenAI from langchain.memory import ChatMessageHistory memory = ChatMessageHistory() max_output_tokens , max_total_tokens = 1024, 4096 llm = ChatOpenAI( temperature=0, openai_api_key= 'sk-xxxxxxxxxxxxxxxxx' , max_tokens = max_output_tokens, ) while True : user_message = input ( 'You: ' ).strip() if not user_message: continue if user_message.lower() in ( 'quit' , 'exit' ): break memory. add_user_message(user_message) messages = select_messages( memory. messages, max_total_tokens=max_total_tokens, max_output_tokens = max_output_tokens ) print (f "selected messages: {messages} " ) answer = llm(messages) print ( 'BOT:' , answer. content) memory.add_ai_message(answer.content)

Setting max_output_tokens larger will select fewer histories, e.g.

-

When max_output_tokens=200, max_total_tokens=4096, the maximum number of input tokens can reach 3896, so you can select as many historical messages as possible

You: Translate this sentence into Chinese: “to be or not to be” selected messages: [HumanMessage(content='Translate this sentence into Chinese: "to be or not to be"', additional_kwargs={}, example=False)] BOT: "To be or not to be" You: Then translate into Japanese selected messages: [HumanMessage(content='Translate this sentence into Chinese: "to be or not to be"', additional_kwargs={}, example=False), AIMessage(content='"Survive Or destroy"', additional_kwargs={}, example=False), HumanMessage(content='translate into Japanese', additional_kwargs={}, example=False)] BOT: "生きるか死ぬか" -

max_output_tokens=4050, max_total_tokens=4096, the maximum number of tokens input is only 46, the historical information of the previous round cannot be used in the second round

You: Translate this sentence into Chinese: “to be or not to be” selected messages: [HumanMessage(content='Translate this sentence into Chinese: "to be or not to be"', additional_kwargs={}, example=False)] BOT: "To be or not to be" You: Translate into Japanese selected messages: [HumanMessage(content='Translate into Japanese', additional_kwargs={}, example=False)] BOT: もう一刻日本语に改言してください。

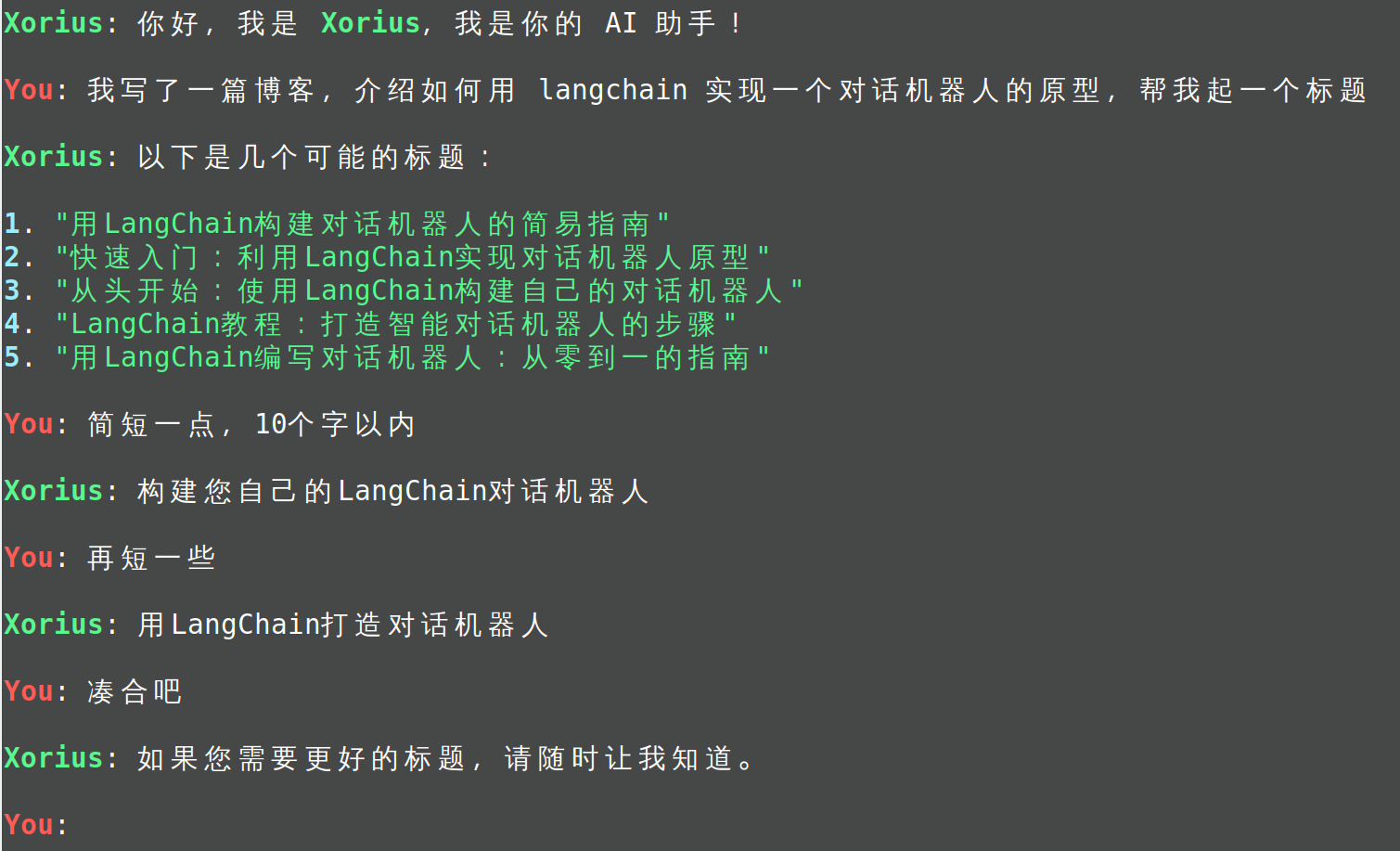

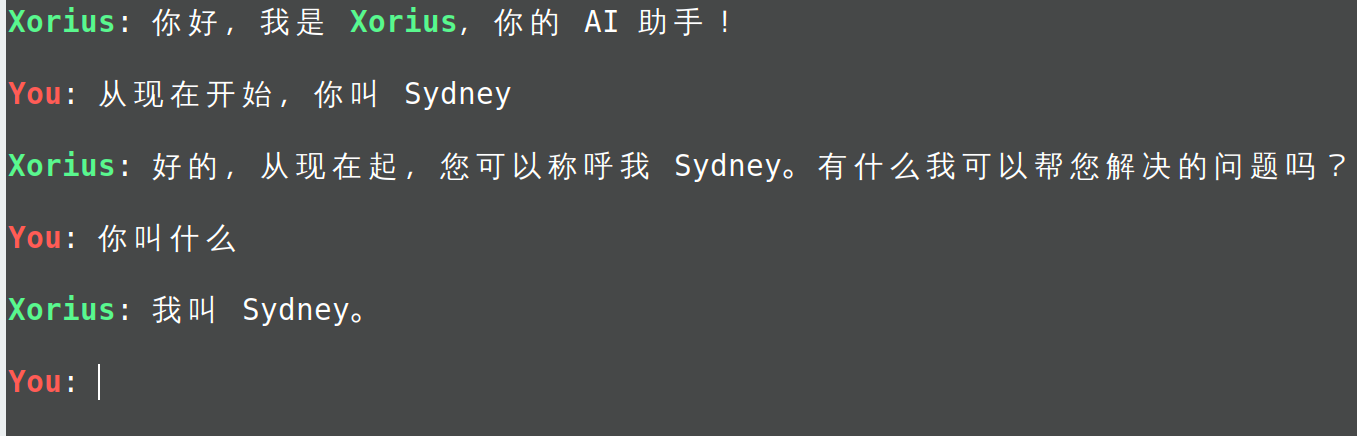

After realizing the selection of the chat history, the most basic prototype is actually fine. Further, you can also set some attributes for this chat robot, such as what is the name, what is the personality, etc. The specific method is in Every time the language model is called, in addition to the chat history and current input, an additional prompt describing the properties of the robot is input. In the OpenAI interface, the role of this prompt is required to be set to system. If you use langchain, just use SystemMessage directly. I Here simply put this SystemMessage at the top of the input, and transform the code into this:

from langchain.chat_models import ChatOpenAI from langchain.memory import ChatMessageHistory from langchain.schema import SystemMessage memory = ChatMessageHistory() system_message = SystemMessage( content=( "You are an AI assistant. Your name is xorius." "You can discuss any ideas and topics with your users, " "and you will help your users solve their problems as much as you can." ), ) max_total_tokens = 4096 - num_tokens_from_messages([{ 'role' : 'system' , 'content' : system_message.content}]) max_output_tokens = 1024 llm = ChatOpenAI( temperature=0, openai_api_key= 'sk-xxxxxxxxxxxxxxxxx' , max_tokens = max_output_tokens, ) while True : user_message = input ( 'You: ' ).strip() if not user_message: continue if user_message.lower() in ( 'quit' , 'exit' ): break memory. add_user_message(user_message) messages = select_messages( memory. messages, max_total_tokens=max_total_tokens, max_output_tokens = max_output_tokens ) print (f "selected messages: {messages} " ) answer = llm([system_message] + messages) print ( 'BOT:' , answer. content) memory.add_ai_message(answer.content)

In this way, let it introduce itself and it will answer according to the setting.

You: Introduce yourself selected messages: [HumanMessage(content='Introduce yourself', additional_kwargs={}, example=False)]

BOT: Hello, I'm Xorius, an artificial intelligence assistant. I can discuss various topics with you and help you solve problems. I can provide information on various topics such as technology, culture, history, health, travel and more. I can also perform various tasks such as setting reminders, sending emails, searching for information, and more. Please let me know what help you need and I will do my best to support you.

If you do not add this system_message, it will emphasize that it is developed by OpenAI in the answer obtained for the same input, as shown below:

You: Introduce yourself selected messages: [HumanMessage(content='Introduce yourself', additional_kwargs={}, example=False)]

BOT: I am an AI language model developed by OpenAI. I can do natural language processing and generation, help users answer questions, translate, write, etc. I have no entity, only exist in the computer, but I can interact with humans through text and voice. I don't get tired or make mistakes, but I also don't have emotions and awareness. I'm just a program, but I hope to be able to help and facilitate humans.

Finally, I made some adjustments to the above code and realized it as a command line tool in the project. See https://github.com/monsternlp/xorius for the code, and also released it to pypi. Execute pip install xorius to install it. After installation You can execute xorius-cli on the command line to enter the dialogue.

The command line parameters are described as follows:

- –api-key: set the API key of OpenAI

- –temperature: Set the temperature parameter, which is used to control the randomness of the generated results. If it is 0, it will not be random and the result with the highest probability will be generated every time. The default is 0.7

- –max-tokens: Set the maximum number of tokens allowed for the generated result, default 512

- –proxy: Set http proxy, mainly for domestic network environment

The prototype is realized, but on this prototype, some direct problems can be seen

- ChatMessageHistory is a memory-based historical message recording method. Once you exit the historical conversation message, all messages will be lost

- When the topic of discussion has been discussed earlier, the practice of selecting recent historical news cannot take advantage of previous discussions

- The method of fixedly setting the maximum number of tokens to output takes into account the situation of long output, which will lead to the inability to use more historical messages in the case of short output

- A globally set temperature parameter will affect the performance of the robot in handling different tasks. For example, tasks such as translation, classification, and factual question and answer do not require randomness, while writing and idea generation have a certain degree of randomness.

-

In the current mode, the practice of setting robot properties at the very beginning cannot guarantee the consistency and continuity of the settings, and users can always break through this setting through intentionally constructed inputs

These issues will be discussed further in subsequent articles.

This article is transferred from: http://www.zmonster.me/2023/05/13/llm-prompt-engineering-practice-prototype.html

This site is only for collection, and the copyright belongs to the original author.