Original link: http://gaocegege.com/Blog/modelz-beta

We are pleased to announce that ModelZ , a serverless GPU inference platform, has entered Beta. Users do not need to care about the underlying infrastructure to obtain high-performance and low-cost GPU inference services.

characteristic

ModelZ is a hosted service that provides users with a simple API and easy-to-use UI to deploy their machine learning models. ModelZ is responsible for all underlying infrastructure, including servers, storage and networking. Users can focus on developing models and deploying them on the platform without worrying about the underlying infrastructure.

ModelZ provides the following features:

- Serverless : The serverless architecture allows us to easily scale up or down according to your needs, providing you with a reliable and scalable solution to deploy and prototype machine learning applications at any scale.

- Reduced costs : Pay only for the resources you consume, no additional charges for idle servers or cold starts. Join us and get 30 minutes of free L4 GPU usage. Connect a payment method and get an extra 90 minutes of free usage.

- OpenAI Compatible API : Our platform supports OpenAI Compatible API, which means you can easily integrate your new open source LLM into your existing applications with just a few lines of code.

- Support demo frameworks such as Gradio and Streamlit : We provide a powerful prototyping environment that supports Gradio and Streamlit. Accessing pretrained models and launching demos just got easier with our integration with HuggingFace Space. This way, you can quickly test and iterate on your models, saving time and effort during development.

quick start

Using Model Z is very easy to get started, and you can experience the functions of Model Z in just three steps:

- Register for an account on the site.

- Use the template provided by ModelZ to create a model service.

- Send a request, or access the user interface (Gradio and Streamlit only).

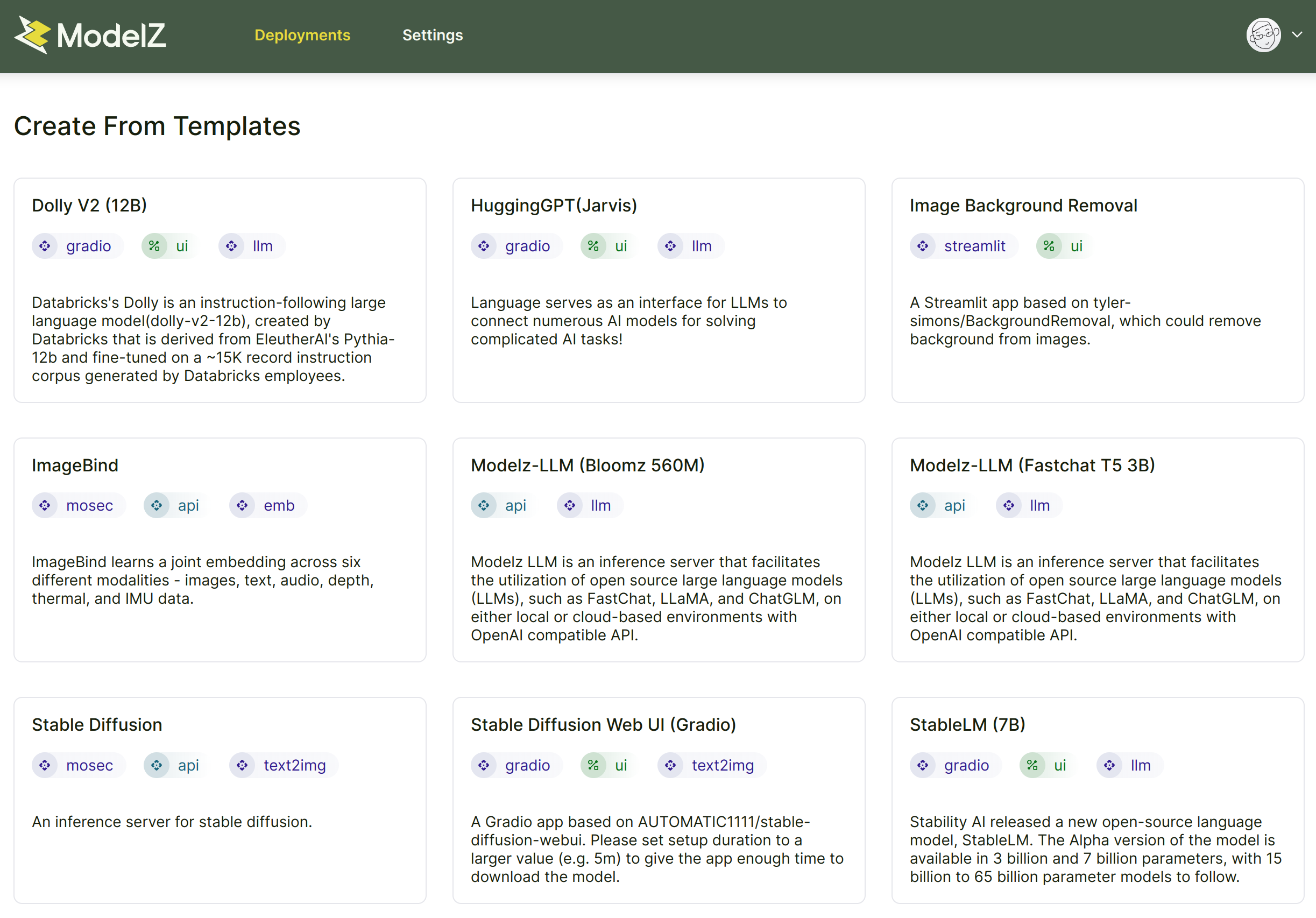

Templates on Model Z

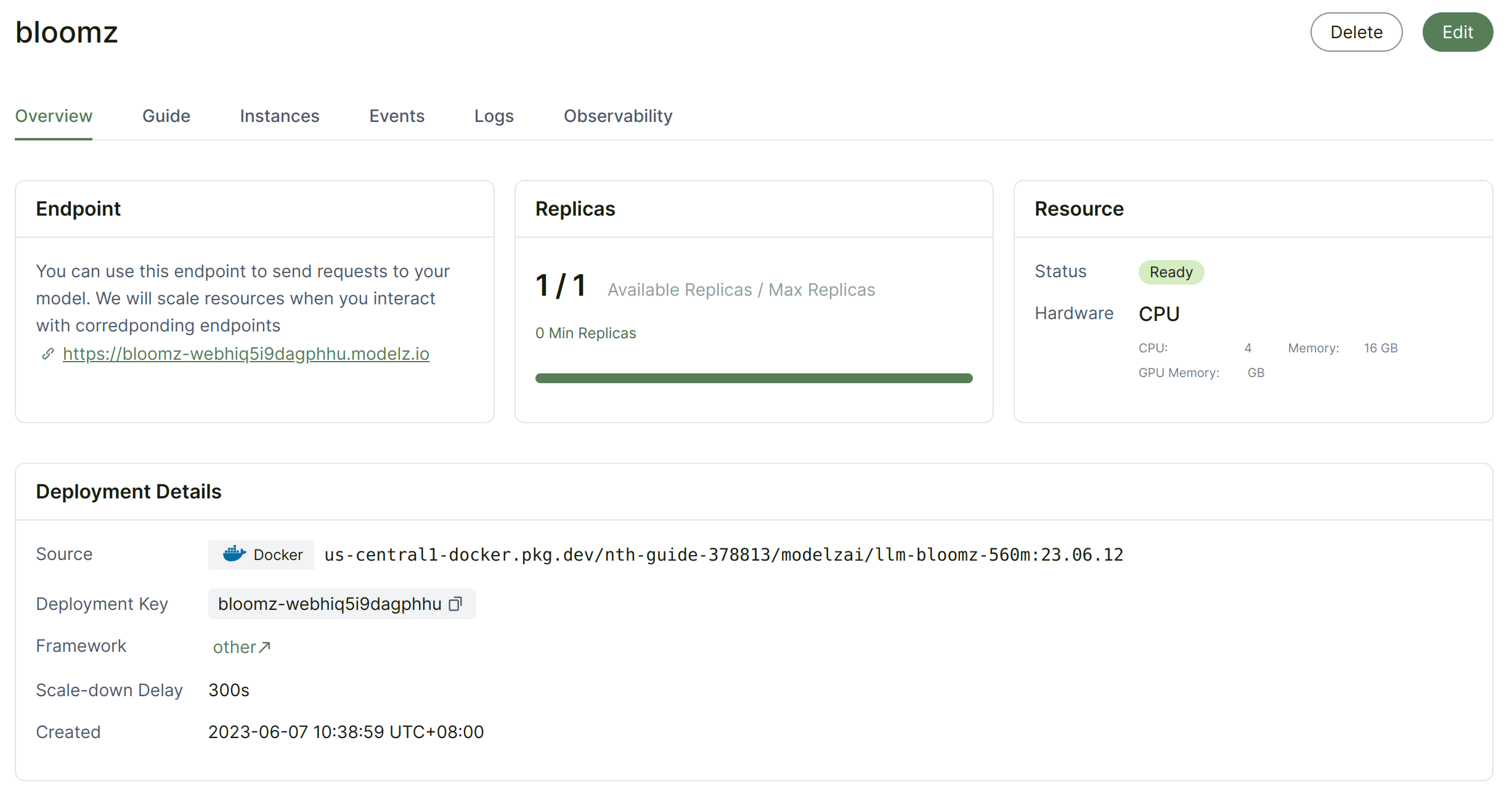

This is an example of a complete workflow for creating an inference deployment using the Modelz Beta platform and the bloomz 560M template. After creating a model service, details are available in the user interface:

details

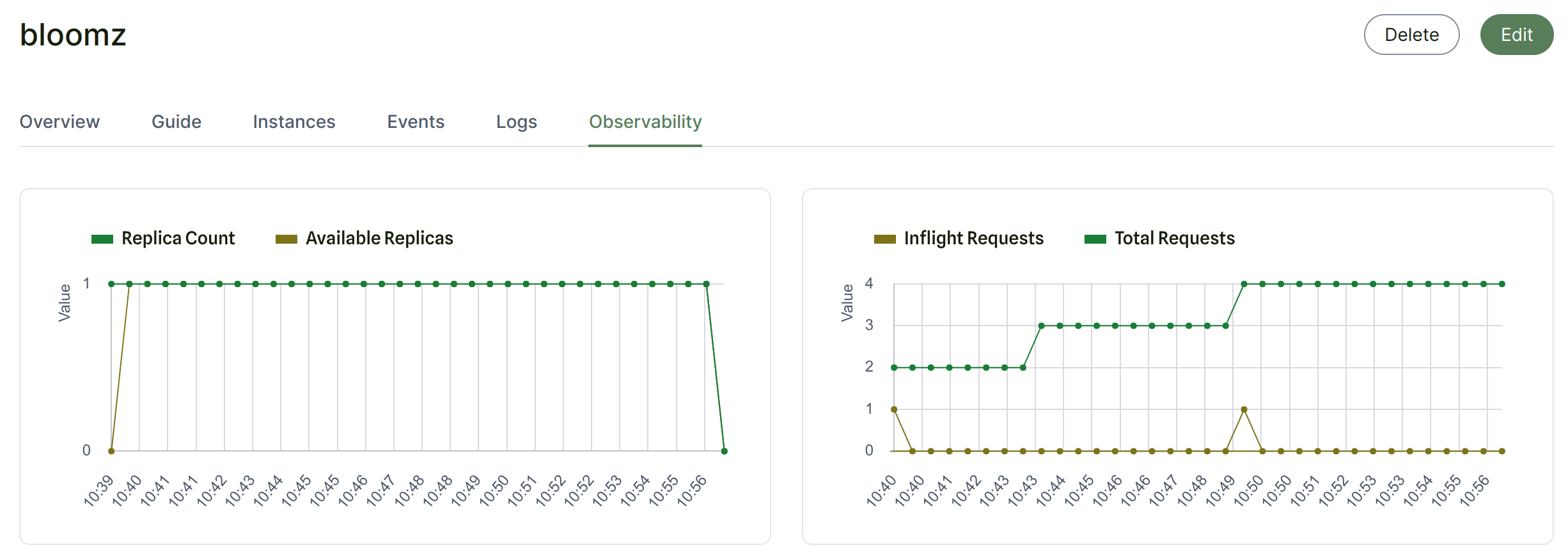

We display logs, events (such as deployment autoscaling events), and metrics (such as total requests, requests in progress, etc.) in the UI. In addition to this, you also get documentation and usage guides for the model.

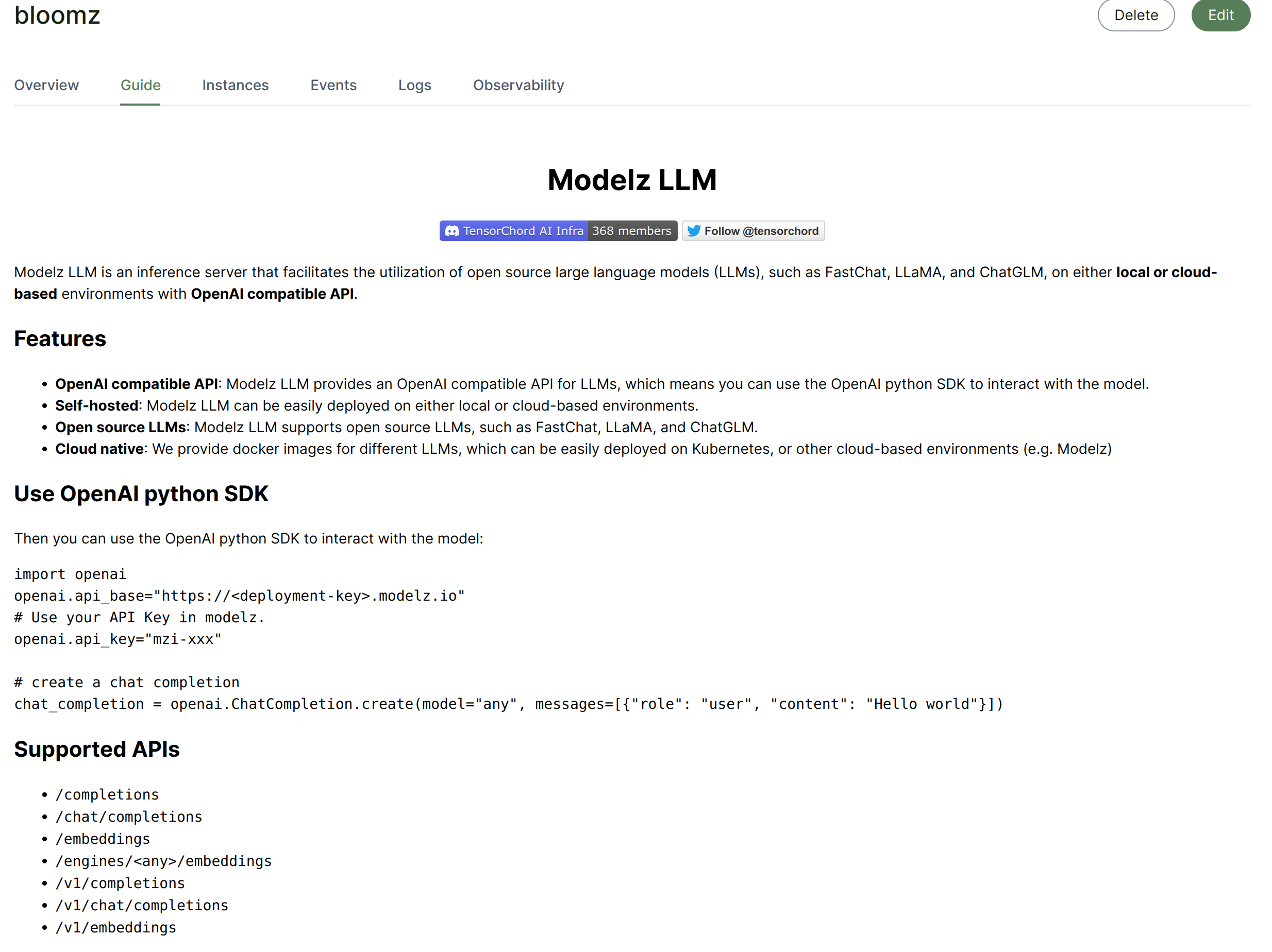

Model documentation and usage guide

The Bloomz 560M in the template is supported by modelz-llm , which provides an OpenAI-compatible API for the model. Therefore, you can use the model directly using the OpenAI Python package or langchain. First, you need to get the endpoint and API key from the dashboard.

import openai openai . api_base = "https://bloomz-webhiq5i9dagphhu.modelz.io" # Use your API Key in modelz. openai . api_key = "mzi-xxx" # create a chat completion chat_completion = openai . ChatCompletion . create ( model = "any" , messages = [{ "role" : "user" , "content" : "Hello world" }])

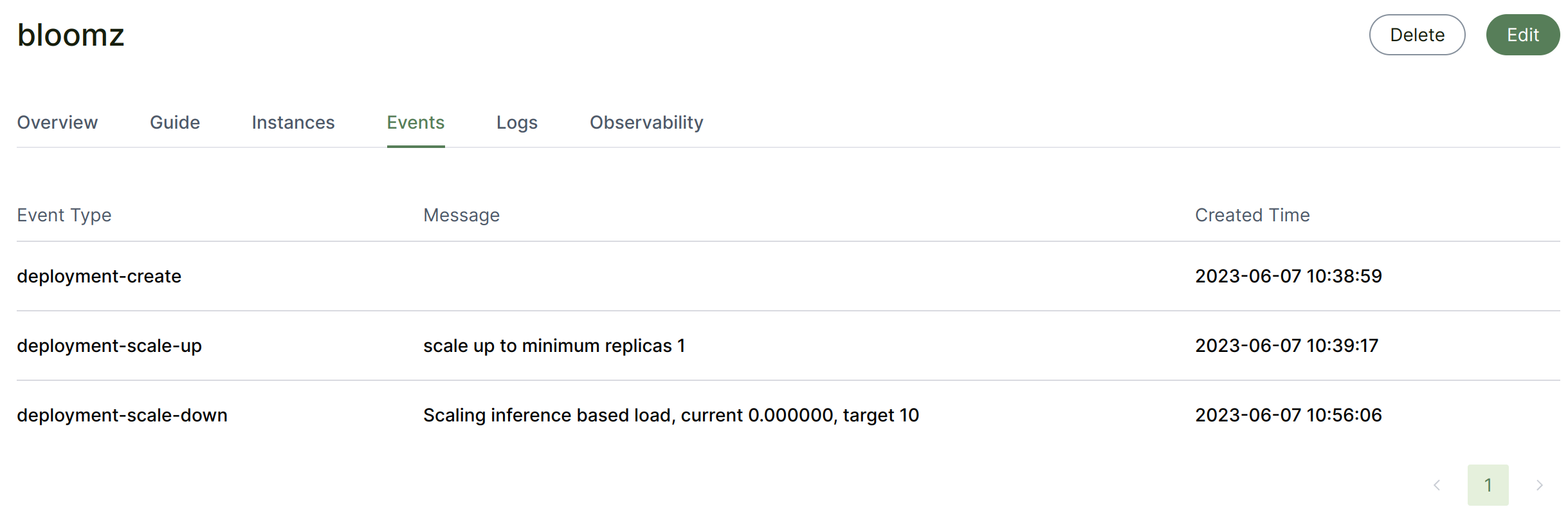

Serverless

The model service will scale down to 0 after a period of inactivity (configurable in the creation page). Autoscaled events and metrics are available in the UI:

zoom event

index

Community

ModelZ builds on envd , mosec , modelz-llm and many other open source projects. If you’re interested in joining the Modelz community, here’s how to get involved!

- Join the Modelz discord community : We have a Discord community where you can connect with other developers, ask questions, and share your knowledge and expertise.

- Contribute to open source projects: Modelz is built on top of many open source projects such as

envd, mosec , modelz-llm , etc. If you’re interested in contributing to these projects, you can check out their GitHub repositories and start contributing. - Share your models and projects: If you’ve built a machine learning model or project using Modelz, we’d love to hear from you! You can share your project using the hashtag #ModelZ, or mention @TensorChord on our Discord community or on Twitter.

If you have any questions or needs about ModelZ, you can also contact us by email: [email protected]

License

- This article is licensed under CC BY-NC-SA 3.0 .

- Please contact me for commercial use.

This article is transferred from: http://gaocegege.com/Blog/modelz-beta

This site is only for collection, and the copyright belongs to the original author.