Original link: https://shyrz.me/news-21-quantifies-information/

22·09·18 Issue 21

Everyone, Qiu An! Thanks to the friends who appreciated the previous issue.

Among the three selected articles in this issue, “Why are you so busy? “from Tom Lingham’s personal blog post, reflecting on the busyness of being a team leader, management, and even decision-making; “How Claude Shannon’s concept of entropy quantifies information” introduces Claude Shannon’s proposed information The concept of entropy and its application; “The Trouble with DALL-E: Five Thoughts on Using Artificial Intelligence to Accelerate the Digital Art Market” from the perspective of artists, analyzes and looks forward to the current situation of AI painting impacting the artist market.

Hope that inspires.

Why are you so busy?

→ Original link: Why are you so busy? Tom Lingham / Blog / August 29, 2022

Disclaimer: In order to protect personal identity, the details of the story in this article have been slightly changed.

“I’m so busy that I barely have time to catch my breath, let alone do modeling exercises with my team.” This is what a colleague of mine told me when I asked him how he was doing after a long day. I believe he’s sincere – he’s too busy. He sends Slack messages and emails inadvertently; often quits in the middle of a meeting with a “going to the next meeting” message in the chat; He was always visibly nervous when he was asked questions about deadlines that were pushed to him; and his team was clearly under pressure, with two key team members leaving. At least it seems to me that other people are secretly paying attention.

How terrible this is! He and the team are too busy to do their jobs, and potentially catastrophically, this is by no means an isolated incident.

The system they are building is a replacement for an old system that exposed customers’ personal data. Customers’ names, ages, dates of birth, and identifying information such as their gender and ethnicity are all available in one way or another through systems exposed on the public internet. It’s really not the kind of job where you should skip any security essentials.

the ridiculousness of being too busy

To me, this situation seems absurd. He himself and the team were too busy to get the job done properly and to any level of quality, which only made things worse. Companies have drawn deadlines and bounds and (almost gleefully) ignored requests for more time and resources. His inexperience in the role meant he just accepted that fate and put pressure on the team to deliver faster to try to make up for it. It also means sacrificing high-quality engineering practice. Most disappointingly, the quality of life of the entire team was sacrificed. He didn’t realize it at the time, but…

As long as you do your job well and keep working on the next most important thing your business prioritizes, any pressure to deliver beyond your team’s capacity is objectively unreasonable.

If the following is true:

- Your team is following software engineering practices that have proven time and time again to be the hallmark of high-performance technical teams (think CI/CD and DevOps).

- You’ve modeled the team’s structure, work, and communication as effectively as possible (in terms of what you can influence).

- Your team is always working on the next most important thing (by business priority).

Then, you don’t have any more leverage to pull that doesn’t lead to a decline in the quality of life for yourself and your team members, and you need to think carefully about why you’re doing it.

You can sacrifice quality, but your team will have to deal with more unplanned work (because of issues in production), reduced setup time, increased change failure rates, etc. You can ask your team to work overtime if they agree and pay well for the extra hours (often not the case), then you will soon find them leaving and go to those who are more reasonable, more satisfying, and better paid work (the game industry is gradually groping).

In this situation, the only reasonable way for the company to exert influence on the job is to:

- Narrow the scope of work.

- Fund additional teams and/or restructure accordingly.

- Change delivery date.

You should only be purposefully busy

In some cases, you need to hone in. Maybe you are a start-up company and want to complete a demo (demo) before a deadline. To secure funding, you need to get this demo done. Maybe you’re an ambitious team that’s working hard for a big bonus. Whatever it is, these all have one thing in common: they are all pursuing a given incentive.

If you are too busy, why is this? What are you and your team going after? If you’re too busy because of pressure from others, why do they think they should be able to demand more of you?

You’re too busy because the company expects you to get things done by a certain date, regardless of your team’s capabilities (they are delivering high-quality output and are always doing the next most important thing the company prioritizes) things)? Then this is the conversation you need to have with your boss and your stakeholders. This is most likely unreasonable.

Are you too busy because the team is dealing with legacy engineering quality issues? Then this is another conversation you need to have. The company should help unlock capacity so the team can address these issues, or at least compensate the support team with aggressive overtime until the issue is resolved. Failing that, maybe reflect on why you joined the company in the first place.

Are you too busy because the team is dealing with engineering quality issues arising from your decisions? It’s a tougher conversation, but you still need to have it. Negotiate time to resolve quality issues and work with your colleagues. It is often in everyone’s best interest to do so.

Summarize

You and your team should never be too busy to work properly or even start to hate work. Especially if you are a team leader or a high-level executive, then you should actually have free time to think on your own and have organic conversations and ideas with colleagues. Contrary to popular belief: back-to-back meetings are not a badge of honor, but a red flag.

How Claude Shannon’s concept of entropy quantifies information

→ Original link: How Shannon Entropy Imposes Fundamental Limits on Communication / Kevin Hartnett / Quanta Magazine / September 6, 2022

To convey a sequence of random events, like a coin toss, you need to use a lot of information because information has no structure. Shannon entropy measures this fundamental constraint.

If someone tells you a fact you already know, they basically tell you nothing. And if what he conveyed is a secret, then it can be said that he really conveyed something.

This distinction is at the heart of Claude Shannon’s information theory. It was first proposed in a landmark 1948 paper, A Mathematical Theory of Communication , and provided a rigorous mathematical framework for quantifying the amount of information required to send and receive messages accurately , which is determined by the degree of uncertainty that the expected information may express.

As an example, in one case I had a special coin – heads on both sides. I’m going to flip it twice. How much information is needed to communicate this result? Not at all, because before receiving the message, you were absolutely sure that both flips turned out to be heads.

In the second case, I use a regular coin for two flips – heads on one side and tails on the other. We can communicate results in binary code: 0 for heads and 1 for tails. Then there are four possible messages—00, 11, 01, 10—and each requires two bits.

So what’s the point of this? In the first case, you have complete certainty about the content of the message and need zero bits to transmit it. In the second case, you have a 1/4 chance of guessing the correct answer — 25% certainty — and the information requires two bits of information to resolve the ambiguity. More generally, the less you know about the content of a message, the more it needs to convey.

Shannon was the first to explain this relationship mathematically with precision. He captured this in a formula that calculated the minimum number of bits needed to convey information—a threshold that came to be known as “Shannon entropy.” He also showed that if the sender uses less than the minimum number of bits, the message will inevitably be distorted.

“He had this great intuition that when you’re most surprised to learn something, information is maximized,” says Tara Javidi , an information theorist at the University of California, San Diego.

The term “entropy” is borrowed from physics, where entropy is a measure of disorder. A cloud has higher entropy than a piece of ice because the cloud has more possibilities than the way the crystal structure of ice arranges water molecules. By analogy, a random piece of information has high Shannon entropy—its information can be arranged in many ways, while information that obeys strict patterns has low entropy. There are also formal similarities in how entropy is calculated in physics and information theory. In physics, the entropy formula consists of taking the logarithm of the possible physical states. In information theory, it is the logarithm of possible event outcomes.

The logarithmic formulation of Shannon’s entropy belies the simplicity of the concept it describes – because another way to think about Shannon’s entropy is how many yes or no questions are needed on average to determine the content of a piece of information.

For example, suppose there are two weather stations, one in San Diego and the other in St. Louis. Everyone wants to send another one’s seven-day forecast for their city. It’s almost always sunny in San Diego, which means you have a lot of confidence in what the forecast says. The weather in St. Louis is more uncertain – the chance of a sunny day is closer to 50-50.

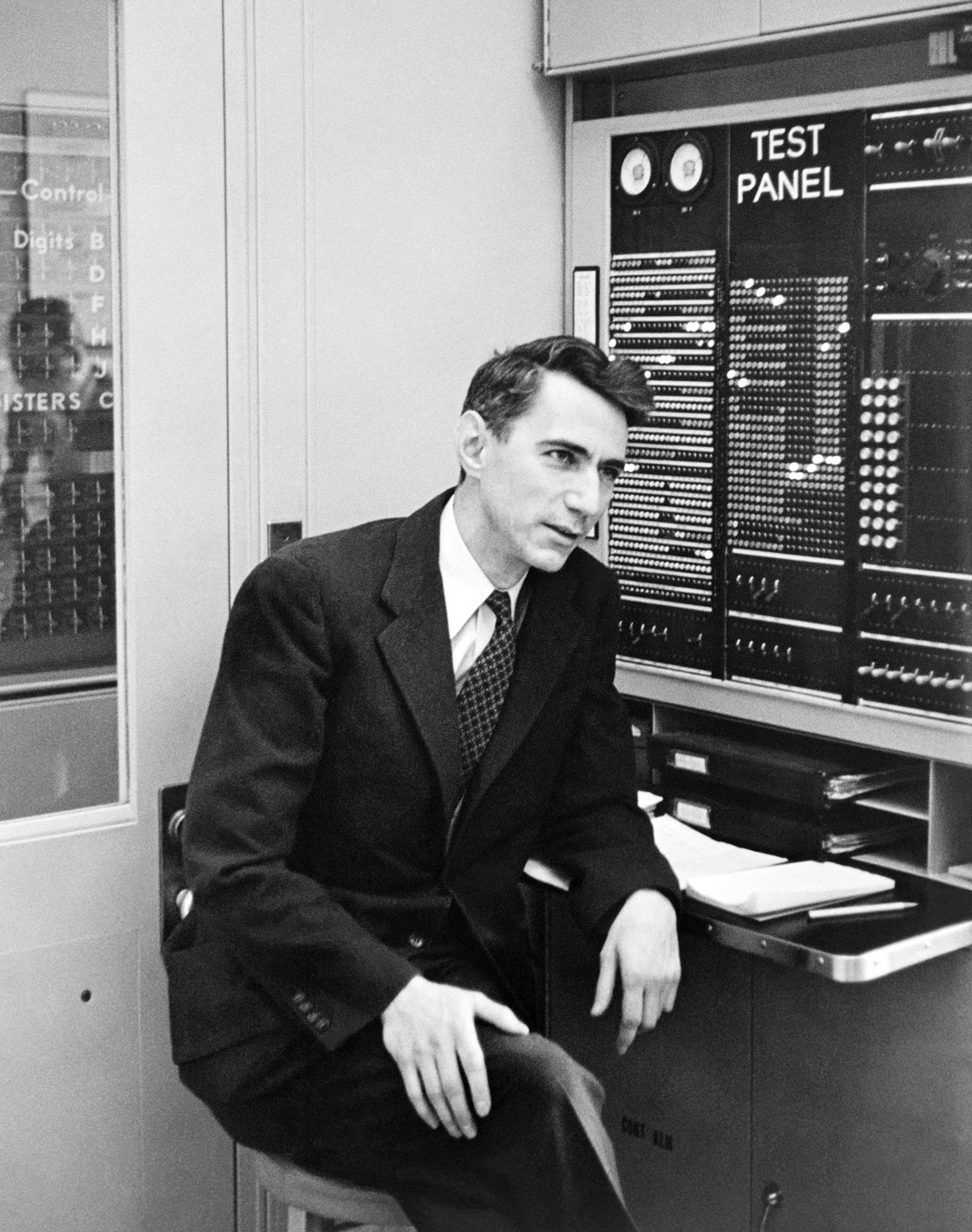

Claude Shannon at Bell Labs in 1954 / The Legacy of Francis Bello / Scientific Resources

Claude Shannon at Bell Labs in 1954 / The Legacy of Francis Bello / Scientific Resources

How many yes or no questions are required to pass each seven-day forecast? For San Diego, the first question might be favorable: Are all seven of the days in the forecast sunny? If the answer is yes (and most likely yes), you can determine the entire forecast with just one question. But for St. Louis, you almost have to read the forecast day by day. Is the first day sunny? What about the next day?

The higher the certainty about the content of the information, the fewer yes or no questions you need to ask to determine it, on average.

As another example, imagine two versions of the alphabet game. In the first version, I randomly selected a letter from the English alphabet and I wanted you to guess it. If you use the best guess strategy, it will take you an average of 4.7 questions to get it. (A useful first question is: “Is this letter in the first half of the alphabet?”)

In the second version of the game, instead of guessing the numerical value of random letters, you guess the letters in actual English words. Now you can adjust your guesses to take advantage of the fact that some letters occur more often than others (“Is this a vowel?”), and knowing the value of one letter helps you guess the value of the next letter (q is almost always followed by u). Shannon calculated the entropy of the English language to be 2.62 bits per letter (or 2.62 yes-or-no questions), well below the 4.7 bits required for each letter to appear randomly. In other words, patterns reduce uncertainty, which enables a great deal of communication with relatively little information.

Note that in examples like this, you can ask better or worse questions. Shannon entropy sets an inviolable bottom line: it is the absolute minimum number of bits required to convey a message, or a question of yes or no.

“Shannon showed that there is something like the speed of light, a fundamental limit,” Javidi said. “He showed that Shannon entropy is a fundamental limit to how much we can compress a source without distortion or loss. risks of.”

Today, Shannon entropy is used as a measure in many application environments, including information compression techniques. For example, you can compress a large movie file because pixel colors have statistical patterns, just like English words. Engineers can build probabilistic models of pixel color patterns from one frame to the next. These models make it possible to calculate Shannon entropy by assigning weights to the patterns and then taking the logarithm of the weights of all possible pixels. This value tells you the limit of “lossless” compression – the absolute maximum a movie can be compressed before you start losing information about its content.

The performance of any compression algorithm can be compared to this limit. If you are far from it, you are motivated to work hard to find better algorithms. But if you get close to it, you know that the information laws of the universe keep you from doing better.

Related reading

- Scientists who developed a new way to understand communication

- How Claude Shannon Created the Future

- How Maxwell’s Demons Continue to Shock Scientists

Trouble with DALL-E: Five thoughts on using artificial intelligence to accelerate the digital art market

→ Original link: The Trouble with DALL-E / Kevin Buist / Outland / August 11, 2022

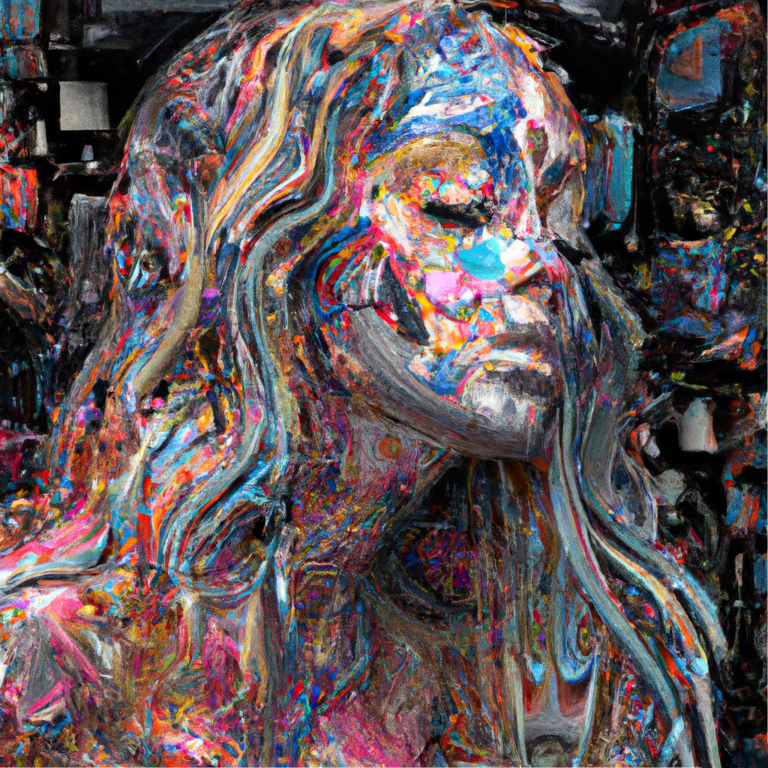

On July 20, Open AI announced that their image generator DALL-E can be used to make commercial projects. Considering that hordes of creators might lazily use AI to produce low-effort NFTs, I tweeted that directly outputting AI as NFTs is a bad idea. I was ridiculed and called a “Luddite” (one of the English handicraft workers who participated in the smashing of machines in the early nineteenth century). Maybe I’m in a hurry to judge? So I’m asking people to tag artists who use AI image generators – whether it’s DALL-E, Midjourney, or whatever – to make NFTs. The tweet received many enthusiastic responses, with dozens of artists tagging themselves and others. Many artists said they made improvements or manipulations to the AI output before it was finalized as an NFT. Others are just forging what AI gives them. However, none of these responses were particularly artistically compelling, and most seemed to illustrate the capabilities of the software rather than the artist himself. When artists began to incorporate this tool into their practice, polishing or adding to AI illustrations didn’t solve the fundamental problems that arose. Here are five instructions on how artists can find (or not find) effective ways to use AI image generators.

Small stories tell big truths

A few artists responded to my twitter with images that looked painterly. Smudges and rich ochre tones appear bland on the small glass screens of phones and computers. These are not paintings, nor are they records of painting activities. One of the best things about looking at a painting is walking through the construction of the image with your eyes. Why did the artist do this arrangement? What did they change when they worked through images? What happens fast and what happens slowly? We can trace the artist’s path through the image. And AI images, even those that look like painters, don’t provide that experience. They are just the mean of a huge library of images. The difference is like the difference between a person’s life story and a macro demographic study. Both describe human life, but one presents universal truths through particularity, while the other presents a single image provided by a black box of myriad universals. When looking at AI images, we cannot match our own subjectivity as a viewer to that of an artist as a creator. What we see are averages, not specific human experiences.

Art is a record of how an artist exists in the world

There is a debate on Twitter about whether the output of an AI image generator is art. This debate is not interesting. Of course, they can be: if the artist calls an image art, it is art. The more pressing questions are why the claim is made, what it does for our response to the image, and how the claim relates to the larger context of the artist’s work. The underlying problem with declaring AI images as art is what they lack. The artwork is the record of the strategy devised by the artist. An image or object carries the story of its own making, as well as the story of its maker. In a broader sense, it is a record of how artists exist in the world, how they make objects, how they find their voices. The same goes for jpgs, paintings, and marble sculptures. The medium doesn’t matter. The problem with AI image generators is that the strategy for generating images is hidden. In the case of DALL-E, it is a proprietary formula owned by Open AI. From this type of work, the only thing we can say about the way an artist exists in the world is that they are clients of Open AI, users of the software. Adjusting the hints to optimize the DALL-E’s output is similar to playing a computer game. Not that it can’t be art. But artists should demand more agency. Instead of accepting mere player characters, they should make their own games, or systems of similar complexity.

Now everything can be a genre

Some of the sharpest criticisms of AI image generators have come from artists, who claim that, like David O’Reilly, these companies have made their way through the work of countless unaccredited artists, illustrators and photographers. to train their models on knowledge and art theft. This seems short-sighted, as AI is only automating one important aspect of image production. Humans are visual sampling machines, citing the vocabulary of pictures as we speak with uninvented words. A completely original image would be illegible. All the pictures, even the abstract ones, use the language of visual references and quotes from other artists.

However, some things are changing. In the past, there was a limited set of words, phrases, and narrative archetypes that could inspire the visual elements of a genre. For example, the words “cowboy” and “pirate” picture images in our minds that are shaped by our shared understanding of their respective genres. But the list of words and phrases that can now be visualized in a general way is no longer limited to our loose list of genres. Now, we can elicit images from any language expression, and all possible phrases become micro-genres. That’s why O’Reilly’s accusation of plagiarism is unfounded. AI is not exactly copying artists. It is a visual description that uses their images to summarize everything the language can express.

All images already exist

If all possible language expressions become micro-genre, then it is easy to conclude that all possible images already exist and that the AI image generator is actually a kind of search engine. It’s reminiscent of a 1982 statement by conceptual artist Sherrie Levine, reflecting on how the world is “full of suffocating images.” “We know that a photograph is just a space in which various images, none of which are original, merge and conflict,” she wrote. “A painting is a passage drawn from countless cultural centers. Citations.” This is how she justifies an artistic practice in which appropriation flirts with plagiarism.

Image saturation in the late 20th century looks odd compared to the vast array of images we face today. If we suffocated in 1982, we will have been drowned for decades by 2022. More practically speaking, this new technology means that the “quote organization” of image production is no longer something that individual artists need to assemble themselves. This work has been done. Image-making becomes search, like a visual version of Borges’ “Babel Library,” the story of an infinite library where every possible monogram is printed in a never-ending volume. The greatest works of literature already exist somewhere. Residents of the library just need to find them.

AI imagery undermines the potential of NFTs

An NFT is a certificate that should signify three things: an artist made the work shown in the certificate, the certificate and the media it points to are unique, and a person owns the media shown in the certificate. AI-generated NFTs break two of these three principles. The artist did not make the image, saying they found it more accurate. This piece is not unique, but a sample from an infinite stream of images. Of course, the token can still be owned. Maybe an AI-generated NFT is like a photo of a fisherman proudly showing off his catch. They are evidence of discovery.

My unease about casting AI images into NFTs comes from the unanswered question of the overall cultural significance of NFTs. Perhaps the ease of minting AI output signals that the definition of an NFT has reached a dead end: a financial asset that is attached to a blurred image of a painting and has nothing to do with the rich experience of humans making or standing in front of a painting. I hope NFTs can depict works of art beyond the connection between the average picture and text. DALL-E is a powerful tool that should definitely be used by artists. But artists should also refuse to settle for being clients of tech companies. Artists should take a variety of tools, old or new, and push them beyond their limits. If AI can automate the work of making pictures, why not use an AI text generator to write prompts and an AI-based code generator to write smart contracts? Artists can even train an AI to analyze the market success of past NFT launches. The question of which elements of art practice can be replaced by AI is terrifying and exciting, but it’s an investigation that should be driven by the artists themselves.

Quick Facts

- ? Design: User Experience Checklist for Interface Designers

- ?Philosophy : How to Read Philosophy in an Adversarial Way

- ?Business: Super.so founder @traf’s short recap on his entrepreneurial journey

- ?Art : Masterpiece Story: Michelangelo’s David

- ? Product: User Experience Core: 105 Cognitive Biases in Product Development

donate

?Shyrism.News is a selected newsletter, bi-weekly, covering new anecdotes, hot topics, cutting-edge technology and other things about life and the future. With careful and meticulous screening, we fight against the algorithm that is strong outside and in the middle, and neutralize the indifferent and rigid code with enthusiastic words.

If you find “Shyrism.News” valuable, please support and share it with your friends or social networks.

Thank you friends for tipping, please be sure to note your salutation or email address to avoid data loss when you clean up your account.

This article is reprinted from: https://shyrz.me/news-21-quantifies-information/

This site is for inclusion only, and the copyright belongs to the original author.