About half a year ago, Disco Diffusion, an AI painting tool, became popular among ordinary users from the Text-to-Image development community and design industry. Even if its interface is simple and full of English and codes, it cannot “dissuade” people. Because for those who don’t have any art skills, writing a small paragraph into the input box can guide the AI to generate a painting with a stunning enough picture.

Simon_Awen was generated by Disco Diffusion, and the descriptor he entered was ? ? ⛅️|Authorized by the author

The speed of the evolution of AI painting tools has far exceeded people’s imagination in the past six months. After Disco Diffusion, tools such as Midjourney on Discord group chat, OpenAI’s realistic DALL·E 2, and open source Stable Diffusion emerged. They are more powerful and user-friendly, and the time to generate a picture is even compressed to a few seconds.

The popularity of AI painting has been pushed up step by step. On some domestic e-commerce platforms, you can even see scattered merchants selling tutorials.

Today, we have sorted out 3 tools that are easiest for ordinary users to use: one of them is dedicated to generating two-dimensional paintings; one has a strong community atmosphere and a strong sense of art in generating maps; one is a product of a domestic team, this time , you express your creativity in Chinese!

Stable Diffusion

Features : Considered to be the strongest AI painting tool, it is completely open source, and there are many “magic revisions” on the market, such as Waifu Diffusion, which is specially used to generate two-dimensional portraits;

Preparation : The following is the online version of Stable Diffusion, DreamStudio. This solution does not require equipment, just open https://ift.tt/P4hA0sX with a browser.

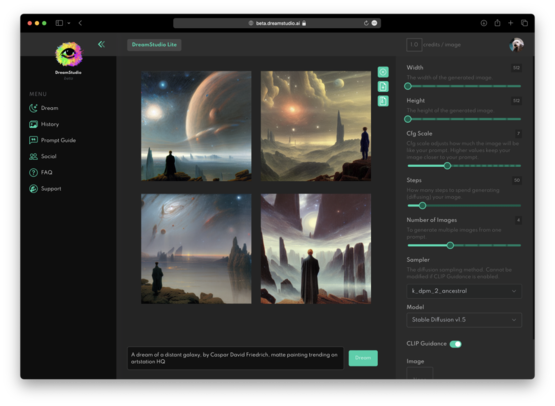

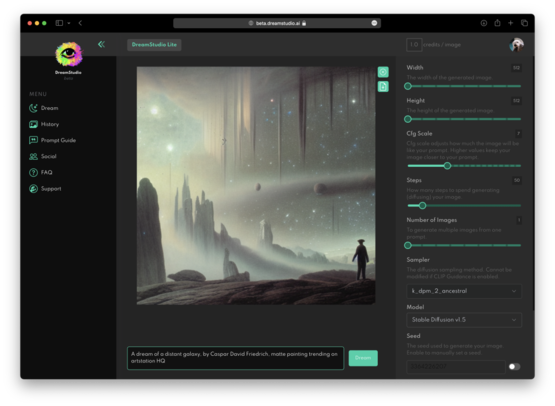

Compared with Disco Diffusion, the interface of Stable Diffusion, an online tool, is very simple and friendly. You open the website and register, then write a description in the input box at the bottom, and click “Dream” to generate it with one click. The waiting time is only a few seconds.

The description is A dream of a distant galaxy (image subject), by Caspar David Friedrich (artist), matte painting trending on artstation HQ (painting style)丨Interface screenshot

There are also a series of adjustment options on the right side of the interface, from top to bottom:

-

Width, Height: the length and width of the generated image;

-

Cfg Scale: It can be roughly understood as the matching degree between the image and the descriptor (prompt), and it is easy to have a distortion effect if it is higher than 20;

-

Steps: The number of iteration steps for the model to generate pictures. Each additional iteration will give the AI more opportunities to compare the descriptor and the current result. The default value is 50;

-

Number of images: the number of generated images;

-

Sampler: The sampling mode of the diffusion denoising algorithm;

-

Seed: Random seed, the random seed generated by the system is different each time, so even if you copy the description given by the artist as it is, you cannot generate the same picture, but if he gives you a specific random seed code, it will can be generated.

After the basic configuration is done, start to do the most critical step in painting generation – writing descriptors. How to write it? The official provides an introductory tutorial:

-

First enter the object and subject of your image, such as a panda, a warrior with a sword, if it is just such a simple description, the generated style will be very random, so you need to describe the style to limit it;

-

The commonly used styles are photorealism, oil painting, pencil drawing, conceptual art, etc. You can specify whether you want a painting (a painting of + raw prompt) or a photo (a photograph of + raw prompt);

-

Add artist keywords with distinctive styles to further clarify and strengthen the style of the generated image, such as Da Vinci, Michelangelo, Monet, etc. In addition, the official also recommends trying to mix multiple artists, which may be integrated into a more amazing effect;

-

You can also add some specific descriptors to complete the finishing touches. For example, if you want the picture to have more realistic lighting, you can bring “Unreal Engine”. The suggested keywords are surrealism (surrealism), sharp focus (sharp focus), 8k, or even “the most beautiful” image ever seen”.

The online version currently has a weak tuning function, such as the inability to generate images in batches, etc. If you want to have a better generation experience, you can deploy the open source Stable Diffusion to your own computer. The configuration requires an RTX 2060 graphics card and other 6GB video memory (and above). ) graphics card, etc. Not expanded here.

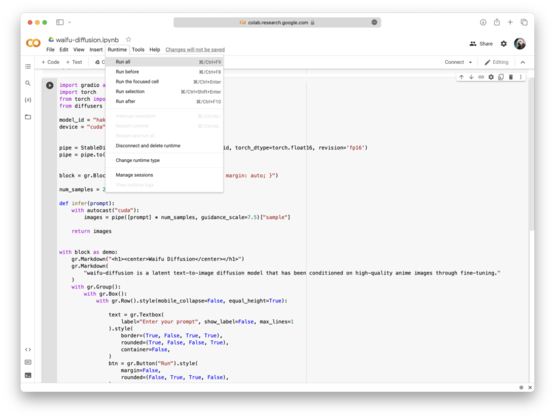

Since the open source of Stable Diffusion, various “magic revisions” of it have rapidly appeared on the market, among which Waifu Diffusion is the most popular recently. Waifu refers to some female characters in comics, animations, and games. Some players and audiences like these characters and will treat them as wives. It can be seen that this is a model dedicated to generating “paper people”.

Screenshot of the interface

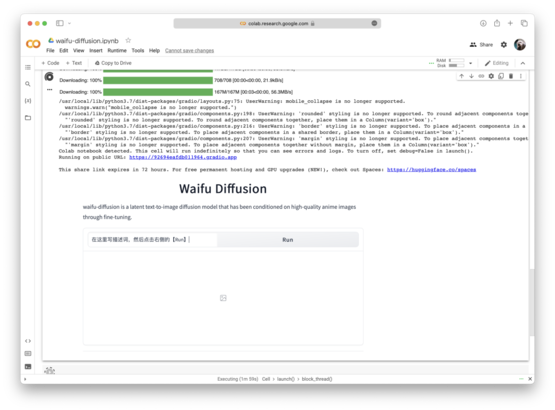

Just open https://ift.tt/sOm29Mj, then click “Run All” above, and wait a few minutes to see the input box for the descriptor.

Screenshot of the interface

As for the descriptor reference, you can search for “waifudiffusion ALT” on Twitter, and then you will see the images of the players. If there is an ALT logo on the image, click on it to find the descriptor of the generated graph.

Screenshot of the interface

There are too many people experimenting with AI painting on Stable Diffusion, and the cumulative daily active users of various channels exceed 10 million. Founder Emad Mostaque said, “Sooner or later, we will reach the stage of generating 1 billion images per day, especially when the function of animation generation is unlocked. “

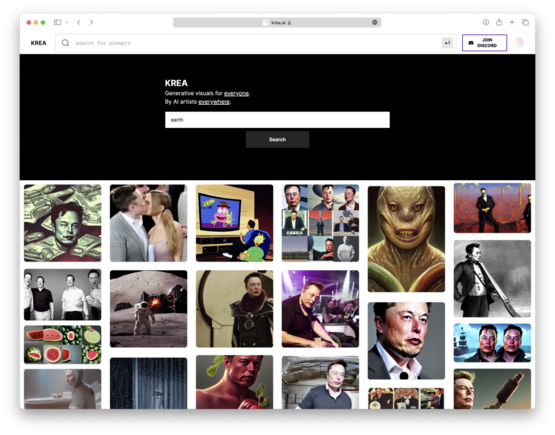

Now, some people have even built a search engine related to AI painting keywords, such as KERA.

Screenshot of the interface

At present, KERA has included millions of keywords. For example, if you search for “Elon Musk”, you can get the above results. If you are interested in a certain result, you can click in to see the corresponding description.

Charging standard : There are about 200 free generation quotas, and then you need to pay to purchase points (the more complex the generation, the larger the size, the more points consumed)

Copyright requirements : You can use your own images for commercial use, but if the images are generated by DreamStudio, they will automatically become CC0 1.0 licenses, so that the service provider Stability.ai can also process your images without paying or even going through you Agree, it will also become a royalty-free image resource in the general public domain. If you deploy the open source Stable Diffusion yourself and consume your own GPU resources, then the copyright belongs to you.

Midjourney

Features : It can be generated while chatting, the community atmosphere is strong, and the paintings have a strong sense of art;

Prepare in advance : Prepare your computer, and register an account with the communication software Discord, open https://ift.tt/N6QlqzF.

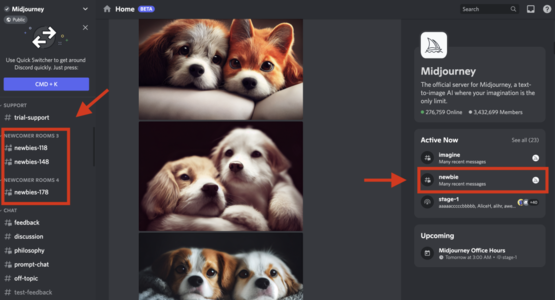

After clicking the link above to enter the official server, you can find any #newbies channel in the channel list on the left to enter, then enter /imagine in the dialog box, enter the descriptor in the fill-in-the-blank box that appears, and press Enter. The Midjourney bot generates 4 images in 60 seconds.

Inside the red box as shown, is our #newbies channel | Midjourney page screenshot

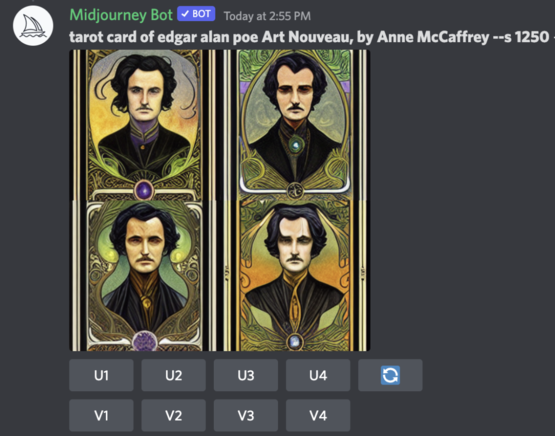

After the image is generated, there will be 4 “U” and 4 “V” options below, U stands for upscaling (increasing clarity), V stands for variations (generates four different images based on the style of the generated image). You can click on them to further refine the image.

Descriptor Edgar Allen Poe’s Tarot, Art Nouveau, Anne McCaffrey – s 1250 | Midjourney page screenshot

Midjourney is set in a lively chat room, and those who are new to Discord may be a little confused. Here are a few things to note: First, when you try it on a public channel, the generated results are visible to all! At the same time, your request may be mixed into a rapidly changing stream of information, don’t walk away! If you really can’t find it, don’t panic, click the inbox in the upper right corner to retrieve your request.

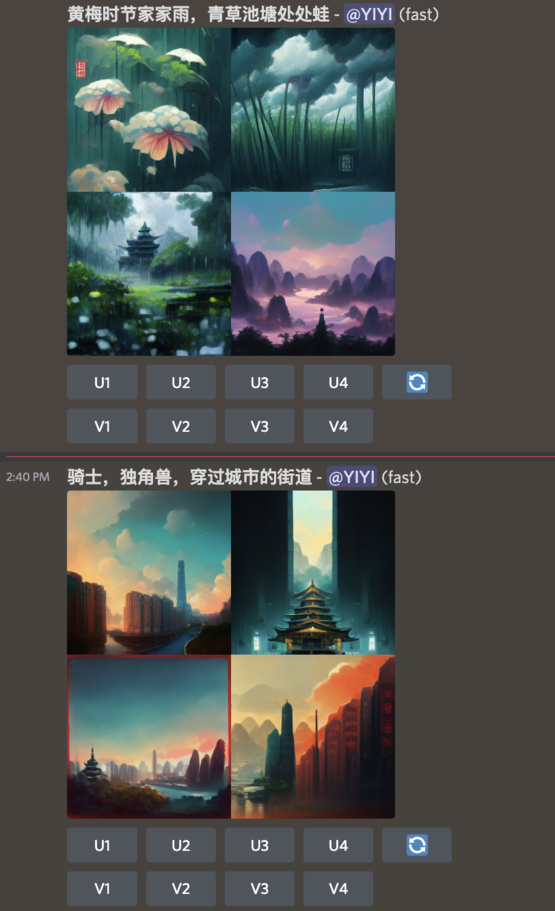

Go in at any time, there are many people playing with you | Screenshot of Midjourney page

For descriptors, the official gave some suggestions:

-

Use objects that already have a large number of visual images, such as Wizard (Wizard), Angel (Angel), Rocket (Rocket), etc.;

-

Use style, artist, painting medium as cue words, such as cyberpunk, Dali, Ghibli, ink painting, sculpture, etc.;

-

Avoid negative sentences, because the model usually ignores it, such as when you enter “a hat that is not red”, the model is more likely to see “hat”, “red”;

-

Use singular or specific numbers rather than “a bunch”, “many”, “some”;

-

Avoid general concepts, you know, what the boss often talks about in meetings, and the needs of Party A.

Fire dragon, but architectural sketch style

The real “high play” can also add some “slang”, that is, a series of prompt words prefixed with “-” to set conditions for pictures. For example, enter –ar 16:9”, and the image scale will become 16 times 9; enter “–s” and add a value, you can decide how far the AI should go on the stylization road, the larger the number, the more outrageous, –s 60,000, God knows what will happen!”

If you really can’t write a descriptor, or if a picture matches the feeling you want, you can also directly write the picture link into the descriptor.

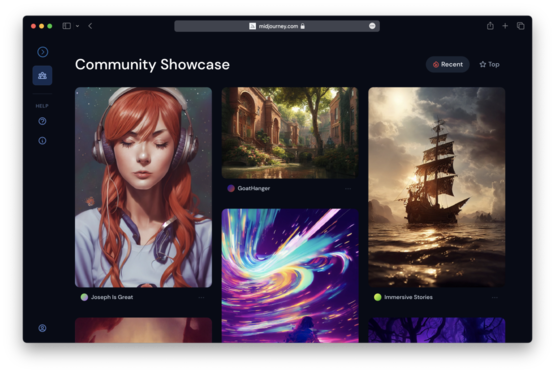

Seeing what others have written is a great way to learn. Of course, you can also ask politely in the #prompt-chat channel at any time: I want to generate a specific style of image, what kind of prompt word should I use? Or you often visit the official gallery ( https://ift.tt/sBv6Nhl), you can refer to the works generated by yourself and others.

Compared to other models, Midjourney is known for its artistry. Some people commented, “Midjourney is like an art student with its own style.” Hundreds of thousands of artists’ ancestors’ souls are attached to them.

It can also be seen from the generated results that no matter what you input, Midjourney is more inclined to give you a painting-like image rather than a fake photo. For example, in the face of the descriptor “Girls discover the meaning of life”, Midjourney and DALL-E got the above look respectively|https://ift.tt/NocDadt

This has also made it popular in the art field. The previously controversial work “Space Opera”, which won the prize in the digital art competition, was generated by Midjourney (and later polished with Photoshop).

“Space Opera” | Jason Allen

What keeps Midjourney still attractive amid the endless emergence of new platforms is its super community—currently more than 3 million people, far more than Minecraft and Fortnite that originally dominated. In the current AIGC entrepreneurial map summarized by Sequoia, only Midjourney has both image generation and consumer/social features.

In the community, people will spontaneously answer questions for newbies, compliment each other, and generously share the descriptors they use. The official will also regularly initiate theme creation and hold Office Hour. In the founder’s words, he wanted people to experience the joy of “doing one thing together”: you “draw” a “dog”, someone will add a stroke to turn it into a “space dog”, and then someone will draw it. It becomes “Aztec Space Dog”…

Can you use Chinese in Midjourney? It’s not impossible, but it doesn’t seem to understand very well.

Charging standard : Anyone can generate 25 pictures on the public channel for free, and subsequent use requires a membership subscription. Basic membership is $10 per month for 200 images; Standard membership is $30 per month with unlimited generation.

Copyright requirements : The works generated in the public channel default to CC BY-NC 4.0 copyright, which means that others can use or modify your works at will. Paid users can commercialize the generated images at will, with one exception. If a company with an annual revenue of more than 1 million is using it, it needs to subscribe to corporate membership instead.

6pen

Features : Supports description in Chinese, and lists many artist and style qualifiers for reference;

Preparation : Download the app on the iOS platform, or open https://6pen.art/ and generate it directly on the web page.

After the fire of Disco Diffusion, some domestic teams began to try to lower the threshold of its use and commercialize it, such as reorganizing the UI, providing cloud computing power, and fine-tuning the model. 6pen is one of those teams.

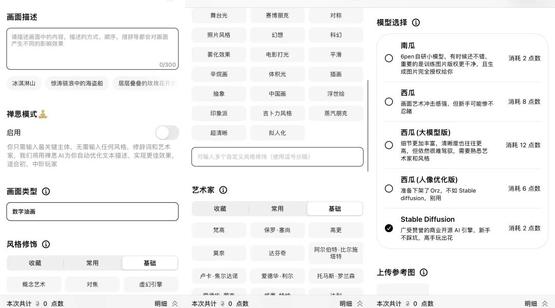

Based on the existing open source models Latent Diffusion and Disco Diffusion on the market, 6pen has developed self-developed models. According to the generation speed and volume, 6pen has developed pumpkin models that are good at small volumes and simple scenes, and are good at complex scenes, but respond to A slower watermelon model. The official said that compared with the original version, the more important optimization part of the self-developed model is to improve the resolution and support Chinese.

6pen is very confident that with the right text description and style modification, they believe that 6pen can achieve the same effect as Midjourney or even DALL·E 2.

The interface is concise, the guidance is meticulous, and many artists and style qualifiers are listed for reference丨app interface screenshots

The official has a very detailed tutorial on how to use it, teaching it step by step, kindly like an old mother who is afraid that you won’t be able to learn it.

We’ve put together some of these suggestions:

-

You can use Chinese description directly!

-

Descriptors should be specific, tell the object you want and its characteristics, but not too many objects, two or three are fine;

-

Give up describing emotions and events, the model will not understand what “she loves me or not” and draw it;

-

Viewing angle, detail and texture, size of objects occupying the picture, color tone, picture characteristics, age, rendering/modeling tools, these are the information that the model can handle;

-

If the selected reference artist has painted a lot of nudes, there will be a probability of pure black pictures (the system determines that you are “playing yellow”);

-

In addition to the descriptors, you can add information such as picture type, artist, size, etc. They are a bit like filters, which can make your painting look more like that;

-

If you have a drawing foundation, you can draw the draft yourself, and then the AI will draw the specific scene on your basis. It is recommended to use color blocks and shapes instead of pure line drafts for the draft, because AI will not automatically fill in the color.

Official Descriptor Case丨Interface Screenshot

In addition, 6pen will also return data such as iterative graphs, Loss curves, and even power consumption for each generation process, allowing users to better understand the production process and help improve.

While waiting for a build, 6pen will let you vote on some builds to see which one works better. At this time, you will feel like a worker for AI, helping the model upgrade and progress.

Wang Dengke, the founder of 6pen, pointed out the current shortcomings of AI painting technology, such as the poor effect of human limbs (mainly fingers) and eyeballs, the poor generation of multi-subject objects, and the inability to generate stories with logical continuation.

Charging standard : It can be generated in a queue for free, or it can be generated quickly for a fee. The price starts from 0.1 yuan;

Copyright requirements : 6pen’s self-developed models are all open sourced using the MIT protocol, and the copyright of the generated images is fully authorized to the creator himself. 6pen also supports Stable Diffusion using the CC0 protocol, and the copyright of the works generated at this time is not exclusively enjoyed by the creator. If the generator uses a living artist as a picture reference, and the style of the generated work is similar to the artist, there may also be a copyright dispute. Similarly, if a reference image is used, and the reference image is not original (such as photography, painting), then the generated result is also subject to copyright disputes.

AI painting is still on the road, and now these tools solve the problem of “writing and writing can draw”, and in the future, it may further solve the problem of “writing and writing can draw as well”.

As the basic functions of these tools and the models behind them are gradually improved, what we have to contend with is how to write prompts.

The other day, I saw someone ask, “Is the word prompt now translated into Chinese?” Someone below answered, “Spell.”

references

[1] China AI Painting Industry Survey Report – Technology, Users, Controversy and Future https://ift.tt/jVE2wKB

[2] The most comprehensive introduction to the most powerful AI art generation model, Stable Diffusion, https://ift.tt/XGkDSEJ

[3] @Simon_Awen’s Weibo “This is everything I know about AI painting” https://ift.tt/tCUIfVr

[4] https://ift.tt/ypx5Ph1

[5] https://ift.tt/k8ziHWN

[6] https://ift.tt/ieCBmIM.

Author: Weng Yang, Rui Yue, biu

Edit: biu

Cover image credit: Unsplash

read more

This article is from Nutshell and may not be reproduced without authorization.

If necessary, please contact [email protected]

This article is reproduced from: http://www.guokr.com/article/462587/

This site is for inclusion only, and the copyright belongs to the original author.