Original link: https://www.msra.cn/zh-cn/news/features/usb

Editor’s note: Currently, the development of semi-supervised learning is in full swing. But existing semi-supervised learning benchmarks are mostly limited to computer vision classification tasks, which preclude consistent and diverse evaluation of classification tasks such as natural language processing, audio processing, etc. In addition, most semi-supervised papers are published by large institutions, and laboratories in academia are often difficult to participate in advancing the field due to computational resource constraints. To this end, researchers from Microsoft Research Asia, in conjunction with researchers from Westlake University, Tokyo Institute of Technology, Carnegie Mellon University, and the Max Planck Institute, proposed the Unified SSL Benchmark (USB): the first A unified semi-supervised classification learning benchmark for vision, language, and audio classification tasks. This paper not only introduces more diverse application fields, but also uses the visual pre-training model for the first time to greatly reduce the verification time of semi-supervised algorithms, making semi-supervised research more friendly to researchers, especially small research groups. Related papers have been accepted by NeurIPS 2022, the top academic conference in the field of artificial intelligence.

Supervised learning builds models to fit labeled data, and neural network models yield competitive results when trained on large amounts of high-quality labeled data using supervised learning. For example, according to the statistics of the Paperswithcode website, traditional supervised learning methods can achieve an accuracy of over 88% on the ImageNet dataset of millions. However, acquiring large amounts of labeled data is often time-consuming and labor-intensive.

In order to alleviate the dependence on labeled data, semi-supervised learning (SSL) aims to improve the generalization of the model by utilizing a large amount of unlabeled data when there is only a small amount of labeled data. Semi-supervised learning is also one of the important topics in machine learning. Before deep learning, researchers in this field proposed classical algorithms such as semi-supervised support vector machines, entropy regularization, and co-training.

Deep Semi-Supervised Learning

With the rise of deep learning, deep semi-supervised learning algorithms have also made great strides. At the same time, technology companies including Microsoft, Google, and Meta have also recognized the great potential of semi-supervised learning in practical scenarios. For example, Google improved its performance in search using noisy student training, a semi-supervised algorithm [1]. State-of-the-art semi-supervised algorithms typically use cross-entropy loss for training on labeled data and consistency regularization on unlabeled data to encourage invariant predictions against input perturbations. For example, the FixMatch[2] algorithm proposed by Google at NeurIPS 2020 uses augmentation anchoring and fixed thresholding techniques to enhance the generalization of the model to enhanced data of different strengths and reduce noise pseudo labels (noisy pseudo labels). labels). During training, FixMatch filters out unlabeled data below a user-provided/pre-defined threshold.

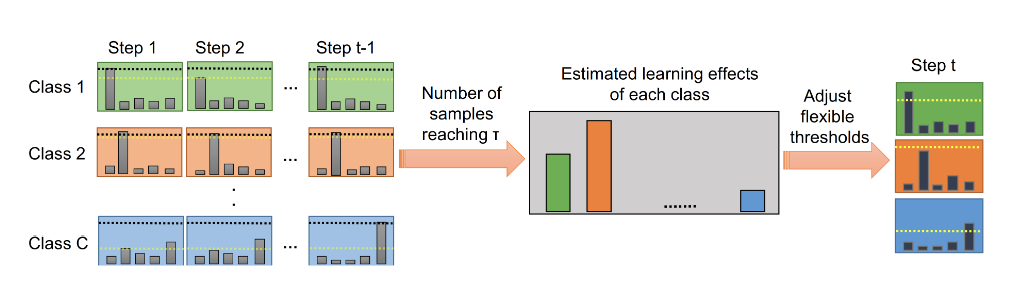

FlexMatch[3] proposed by Microsoft Research Asia and Tokyo Institute of Technology at NeurIPS 2021 takes into account the different learning difficulties between different classes, so it proposes a curriculum pseudo labeling technology, which should be different for different classes. the threshold value. Specifically, for easy-to-learn classes, the model should set a high threshold to reduce the impact of noisy pseudo-labels; for hard-to-learn classes, the model should set a low threshold to encourage fitting of that class. The learning difficulty assessment for each class depends on the number of unlabeled data samples that fall into that class and are above a fixed value.

At the same time, researchers at Microsoft Research Asia also collaborated to propose a unified Pytorch-based codebase for semi-supervised methods, TorchSSL [4], which provides unified support for deep methods, commonly used datasets, and benchmark results in this field.

Figure 1: FlexMatch algorithm flow

Problems and challenges of current semi-supervised learning codebases

Although the development of semi-supervised learning is in full swing, researchers have noticed that most of the current semi-supervised papers only focus on computer vision (CV) classification tasks. For other fields, such as natural language processing (NLP), audio processing (audio), Researchers have no way of knowing whether these algorithms that are effective on CV tasks are still effective in different domains. In addition, most semi-supervised related papers are published by large institutions, and laboratories in academia are often difficult to participate in promoting the development of the field due to the limitation of computing resources. In general, semi-supervised learning benchmarks currently suffer from the following two problems:

(1) Insufficient diversity. Existing semi-supervised learning benchmarks are mostly limited to CV classification tasks (i.e. CIFAR-10/100, SVHN, STL-10 and ImageNet classification), excluding consistent and diverse evaluations for classification tasks such as NLP, audio, etc. Lack of sufficient labeled data in audio and audio is also a common problem.

(2) Time-consuming and unfriendly to academia. Existing semi-supervised learning benchmarks such as TorchSSL are often time-consuming and environmentally unfriendly, as it often requires training deep neural network models from scratch. Specifically, it takes about 300 GPU days to evaluate FixMatch [1] using TorchSSL. Such high training costs make SSL-related research unaffordable for many research laboratories (especially in academia or small research groups), hindering the progress of SSL.

USB: A New Benchmark Library with Diverse Tasks and More Investigator-Friendly

In order to solve the above problems, researchers from Microsoft Research Asia, together with researchers from Westlake University, Tokyo Institute of Technology, Carnegie Mellon University, and the Max Planck Institute, proposed the Unified SSL Benchmark (USB), which is a The first unified semi-supervised classification learning benchmark for vision, language, and audio classification tasks. Compared with previous semi-supervised learning benchmarks (such as TorchSSL) that only focus on a small number of vision tasks, this benchmark not only introduces a more diverse application field, but also greatly reduces semi-supervised algorithms for the first time using a pretrained vision Transformer. verification time (down from 7000 GPUs to 900 GPUs), making semi-supervised research more friendly to researchers, especially small research groups. Related papers have been accepted by NeurIPS 2022, the top academic conference in the international artificial intelligence field. (Click to read the original text for details of the paper)

Article link: https://ift.tt/8tIKs76

Code link: https://ift.tt/xCkAYcF

Solutions provided by USB

So how does USB solve the problems of current semi-supervised benchmarks all at once? The researchers mainly made the following improvements:

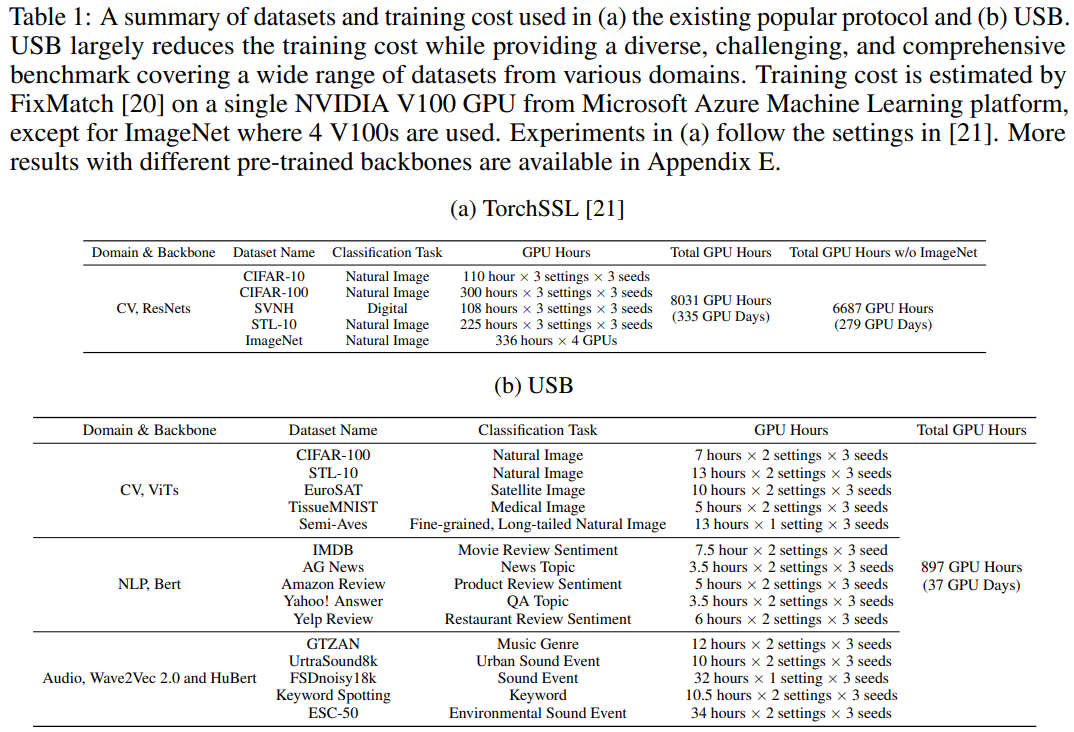

(1) To enhance task diversity, USB introduces 5 CV datasets, 5 NLP datasets and 5 audio datasets, and provides a diverse and challenging benchmark, enabling Consistent evaluation across multiple tasks. Table 1 provides a detailed comparison of tasks and training time between USB and TorchSSL.

Table 1: Task and training time comparison between USB and TorchSSL frameworks

(2) In order to improve the training efficiency, the researchers introduced the pre-trained vision Transformer into SSL instead of training ResNets from scratch. Specifically, the researchers found that using a pretrained model without compromising performance can greatly reduce the number of training iterations (for example, reducing the number of training iterations for the CV task from 1 million steps to 200,000 steps).

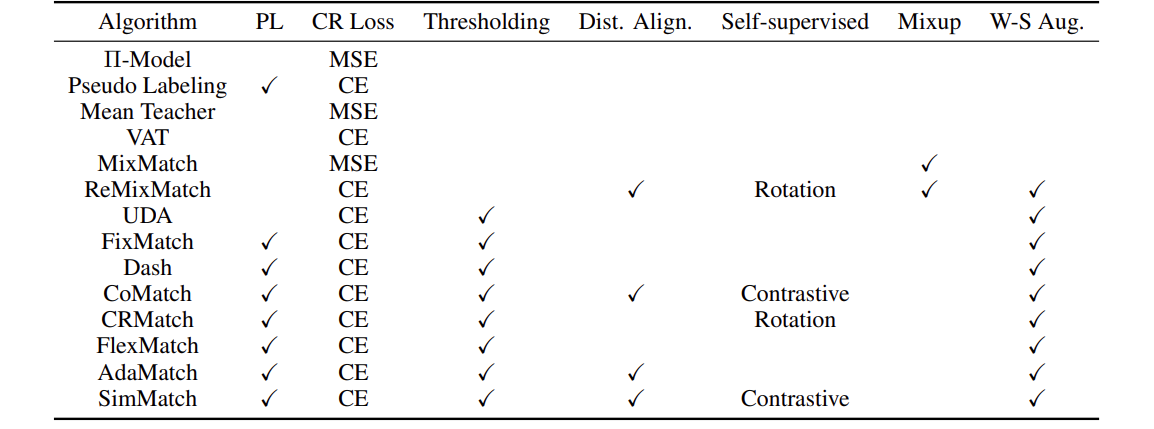

(3) In order to be more friendly to researchers, the researchers open-sourced 14 SSL algorithms and open-sourced a modular code base and related configuration files for researchers to easily reproduce the results in the USB report. To get started quickly, USB also provides detailed documentation and tutorials. In addition, USB also provides a pip package for users to directly call the SSL algorithm. The researchers promise to continuously add new algorithms (such as unbalanced semi-supervised algorithms, etc.) and more challenging datasets to USB in the future. Table 2 shows the algorithms and modules already supported in USB.

Table 2: Supported Algorithms and Modules in USB

Semi-supervised learning has important research and application value in the future by utilizing a large amount of unlabeled data to train more accurate and robust models. The researchers at Microsoft Research Asia look forward to the work of USB to empower academia and industry to make further progress in the field of semi-supervised learning.

references

[1] https://ift.tt/ckbQ19U

[2] Kihyuk Sohn, David Berthelot, Nicholas Carlini, Zizhao Zhang, Han Zhang, Colin A Raffel, Ekin Dogus Cubuk, Alexey Kurakin, and Chun-Liang Li. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. Advances in Neural Information Processing Systems, 33:596–608, 2020.

[3] Bowen Zhang, Yidong Wang, Wenxin Hou, Hao Wu, Jindong Wang, Manabu Okumura, and Takahiro Shinozaki. Flexmatch: Boosting semi-supervised learning with curriculum pseudo labeling. Advances in Neural Information Processing Systems, 34, 2021.

[4] TorchSSL: https://ift.tt/Yh1qnr5

This article is reprinted from: https://www.msra.cn/zh-cn/news/features/usb

This site is for inclusion only, and the copyright belongs to the original author.