Original link: https://www.msra.cn/zh-cn/news/features/virtualcube

Editor’s note: As the saying goes: “Eyes are the windows to the soul”, the information conveyed by eye contact can also further enhance people’s communication. However, with video chatting and video conferencing gradually becoming the norm, you can’t help but ask, how long have we not checked eyes with colleagues, friends, and family?

The 3D video conferencing system VirtualCube, a research project of Microsoft Research Asia, allows participants of online meetings to establish natural eye interaction, and the immersive experience is like face-to-face communication in the same room. Papers related to this technology were accepted by the global virtual reality academic conference IEEE Virtual Reality 2022 and won the Best Paper Award (Journal Papers Track) of the conference.

In everyday conversations, movements such as staring at each other and shaking our heads are part of natural dialogue, especially when we change the subject, control the speech or switch the communication object in face-to-face communication, all of which are accompanied by eye and body movements. However, the current video conferencing technology has certain flaws—because the camera and the screen are not at the same height, if you look at the screen, your eyes are often unnatural, and if you look at the camera, you cannot pay attention to the reactions of other participants, so the video conference It lacks the sense of authenticity and interaction of offline communication. And in actual work, we will also have various conference scenarios, such as multi-person conferences, sitting in the same row and working together, etc. For capturing the side sight and actions of the participants, the existing video conference The system is even more powerless.

If there is a conference system that allows people to communicate as if they are in the same room even if they are in different places, and eye movement can establish eye contact with peers, will this add an immersive reality to remote work? feeling?

3D video system built with existing common hardware equipment

In order to solve these problems, Microsoft Research Asia proposed an innovative 3D video conferencing system – VirtualCube, which can establish a 3D image as large as a real person in a remote video conference. Whether it is frontal communication or side communication, the system can Correctly capture the eyes and movements of the attendees, and establish eye contact and body communication. The related papers were accepted by the global virtual reality academic conference IEEE Virtual Reality 2022 and won the Best Paper Award (Journal Papers Track) of the conference. (Click to read the original text for details of the paper)

The VirtualCube system has three major advantages:

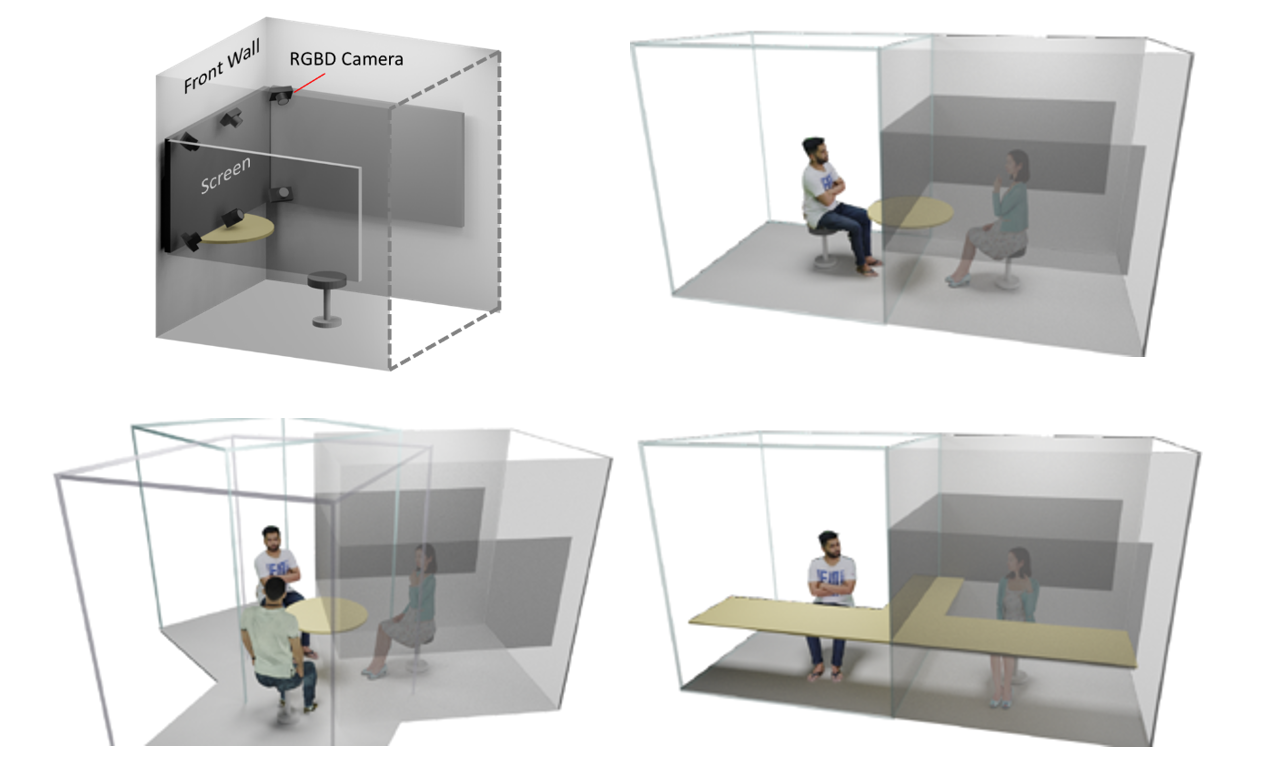

Standardization and simplification, all use existing common hardware equipment. Similar to Cubicles commonly found in workplaces, each VirtualCube provides a consistent physical environment and device configuration: 6 Azure Kinect RGBD cameras are installed directly in front of attendees to capture images of real people and movements such as eyes; There is also a large display screen on the front and left and right sides of the attendees to create an immersive sense of participation. Using existing, standardized hardware can greatly simplify the workload of user equipment calibration, enabling rapid deployment and application of 3D video systems.

6 Azure Kinect RGBD cameras to capture movements like portraits and gazes

Multiplayer, multi-scene, any combination. As the basis for online video conferencing, the virtual meeting environment of VirtualCube can be composed of multiple spaces (Cubes) according to different layouts to support different meeting scenarios, such as two-person face-to-face meetings, two-person side-by-side meetings, and multi-person round tables meetings, etc.

Multiple spaces (Cubes) can be combined arbitrarily

Real-time, high-quality rendering of live-action images. VirtualCube captures subtle changes in participants, including human skin color, texture, and reflections on faces or clothing, and renders them in real-time to generate life-size 3D images that are displayed on remote participants’ screens. Moreover, the background of the virtual meeting environment can also be freely selected according to the needs of users.

Change the meeting scene arbitrarily, you can be immersed in the scene

The combination of V-Cube View and V-Cube Assembly algorithm makes immersive meeting experience no longer a problem

In fact, the industry’s research on 3D video conferencing has never been interrupted. As early as 2000, someone had put forward the imagination related to similar mixed reality technology. Based on this idea, researchers have been exploring how to present video conferences in a more realistic and natural way. During this period, different technical routes and solutions have emerged, but none of them have achieved the desired effect. In this regard, Zhang Yizhong and Yang Jiaolong, the principal researchers of Microsoft Research Asia, said that there are still many unresolved problems in past research: First, in the real environment, no matter what kind of monocular camera equipment is placed, no matter how high the image quality is, participants will It is also difficult for people to form natural eye contact, especially in the case of multi-person meetings; secondly, many studies are optimized for specific meeting scenarios, such as two-person face-to-face meetings or three-person roundtable meetings, it is difficult to support different meeting settings ; Third, although we can see some realistic virtual people in the film and television industry, it requires professional technology and film and television teams to polish and optimize it for a long time. It still requires a certain amount of manual labor. Currently, real-time capture and Real-time rendering.

To this end, Microsoft Research Asia has proposed two new algorithms, V-Cube View and V-Cube Assembly. In VirtualCube, the gestures and eye changes of participants are automatically captured, and high-fidelity images are rendered in real time, allowing participants to Experience the atmosphere of a real meeting in a virtual meeting.

“When two people are talking and looking at each other, what the other person sees is equivalent to placing a camera at the position of their own eyes. But there is a height difference between the screen and the camera, so when one side looks at the other’s eyes on the screen, the camera The captured eyes will deviate. So in VirtualCube, we placed six cameras on the edge of the screen directly in front of the attendees, synthesized the correct viewpoint images through the V-Cube View algorithm, and used V-Cube Assembly to determine the correct relative location, and then give attendees an immersive meeting experience”, Zhang Yizhong introduced.

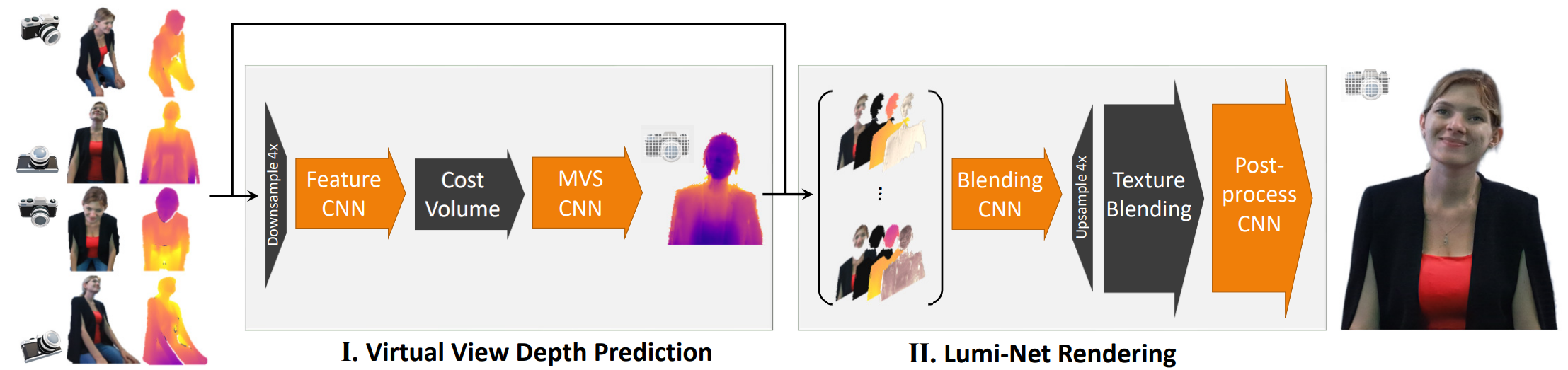

The deep learning-based V-Cube View algorithm uses the RGBD images of the six cameras in the VirtualCube as input to render high-fidelity videos of people from any target viewpoint in real time. The technical challenge here is how to do it both in high fidelity and in real time. In this regard, Yang Jiaolong, a researcher in charge of Microsoft Research Asia, explained: “Real-time rendering of high-fidelity portraits, especially high-fidelity human faces, has always been a challenging research topic. Although traditional 3D reconstruction and texture mapping can be done To real-time rendering, it cannot reproduce the complex materials of real faces and the changes in appearance under different viewpoints. For this reason, we propose a new Lumi-Net rendering method, the core idea of which is to use the reconstructed 3D geometry as Reference to achieve a real-time rendering of a four-dimensional light field, combined with neural networks for image enhancement, thereby improving the quality of rendering, especially the high fidelity of the face area.”

Specifically, the V-Cube View algorithm is divided into three steps. First, the researchers designed a neural network to quickly solve the target viewpoint depth map as a geometry proxy for the human body. Then, the algorithm fuses the acquired multi-view RGB images (ie, rays) under the given geometric reference to achieve rendering. In this step, the researchers are inspired by the traditional Unstructured Lumigraph method, take the direction and depth difference between the input light and the target pixel light as a priori, and learn the most appropriate fusion weight through the neural network. Finally, in order to further improve the drawing quality, the researchers used a neural network to perform image enhancement on the drawing results of the previous step. The whole algorithm realizes end-to-end training, and introduces the perceptual loss function and adversarial learning technology in the training process, so that the algorithm can automatically learn the optimal neural network and achieve high-fidelity rendering. And in order to ensure real-time rendering, the first two steps of the algorithm are performed on low-resolution images, which can greatly reduce the amount of computation required without losing too much precision. The carefully designed and optimized V-Cube View algorithm brings real-time 3D character rendering quality to a new level.

Schematic diagram of V-Cube View algorithm

In addition, in order to allow VirtualCube users to have the same experience as offline communication, the system also needs to consider the relative positional relationship between participants when mapping the participants to the virtual environment. At this time, the V-Cube Assembly algorithm plays an important role. effect. “In the whole virtual meeting environment, the V-Cube Assembly can be defined as the global coordinate system, and the individual VirtualCube is the local coordinate system. The correct 3D geometric change between the global coordinate system and the local coordinate system is correct on the video display. It is crucial to present images of remote attendees,” said Jiaolong Yang.

The researchers will first capture the 3D geometry of the participants in VirtualCube to form a local coordinate system, and then project the 3D geometry data of these local coordinate systems to the global coordinate system, which will be processed by V-Cube Assembly and determined in the global virtual meeting environment. The correct relative position of each VirtualCube participant, and finally the global 3D geometry is transformed into the VirtualCube’s local coordinate system, which is mapped to the VirtualCube’s screen.

Schematic diagram of V-Cube Assembly algorithm

Throwing bricks to attract jade, imagine the infinite possibilities of the future office

VirtualCube provides a new way of thinking for 3D video conferencing systems. Regardless of algorithm design, end-to-end device deployment or engineering debugging, VirtualCube has proven that an immersive 3D video conferencing experience can be achieved using existing common hardware devices.

In addition to allowing attendees to “share” the same physical space, the researchers are also exploring the use of the VirtualCube system for more collaboration needs in remote work. For example, the researchers demonstrated a scenario where, when working together, both participants and their computer desktops would be part of a video conference, so the participants would sit side by side and pass documents and applications from their desktops across the screen Programs make remote collaboration easier.

With the continuous improvement of technology, in the future, everyone may be separated thousands of miles, but they can work together in person, and experience natural communication from a distance, which will greatly improve the efficiency of hybrid office. Researchers at Microsoft Research Asia also hope that VirtualCube can become a seed for exploration and inspire more researchers. With the joint efforts of everyone, we can find a better interactive form of virtual space and open up more possibilities for future office work. .

Related Links:

Paper: https://ift.tt/LRqI3jJ

Project page: https://ift.tt/vkeTFAc

This article is reprinted from: https://www.msra.cn/zh-cn/news/features/virtualcube

This site is for inclusion only, and the copyright belongs to the original author.