Original link: https://www.luozhiyun.com/archives/684

Please declare the source for reprinting~, this article was published on luozhiyun’s blog: https://www.luozhiyun.com/archives/684

When I was studying the k8s network in the past, I didn’t understand many things, but I just skimmed through it. During this time, I plan to make up for the knowledge of virtual network. If you are interested, you may wish to discuss and learn together.

Overview

At present, the mainstream virtual network card solutions are tun/tap and veth . Tun/tap appeared earlier in time, and kernels released after Linux Kernel version 2.4 will compile tun/tap drivers by default. And tun/tap is widely used. In the cloud native virtual network, flannel0 in the UDP mode of flannel is a tun device, and OpenVPN also uses tun/tap to forward data.

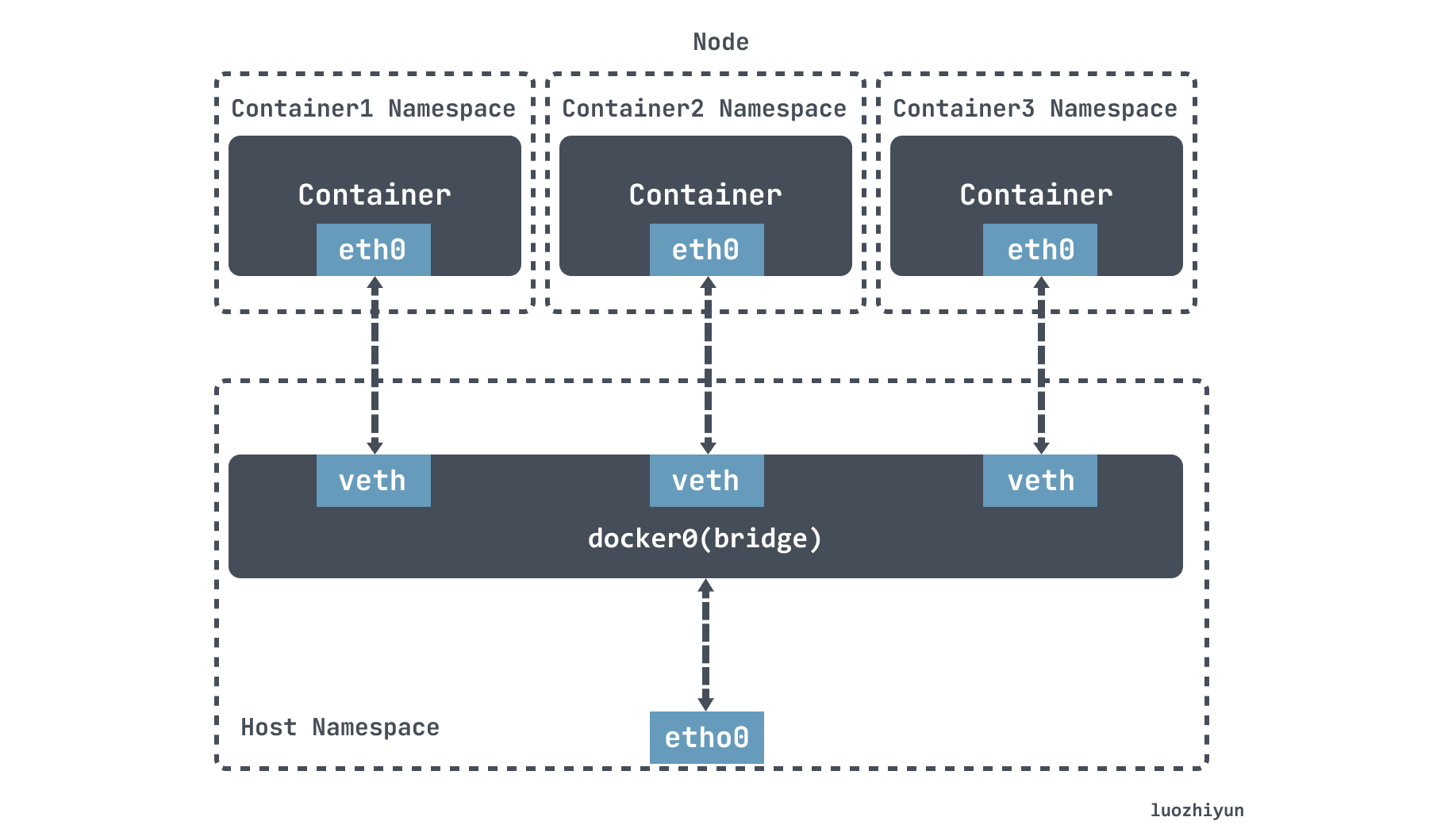

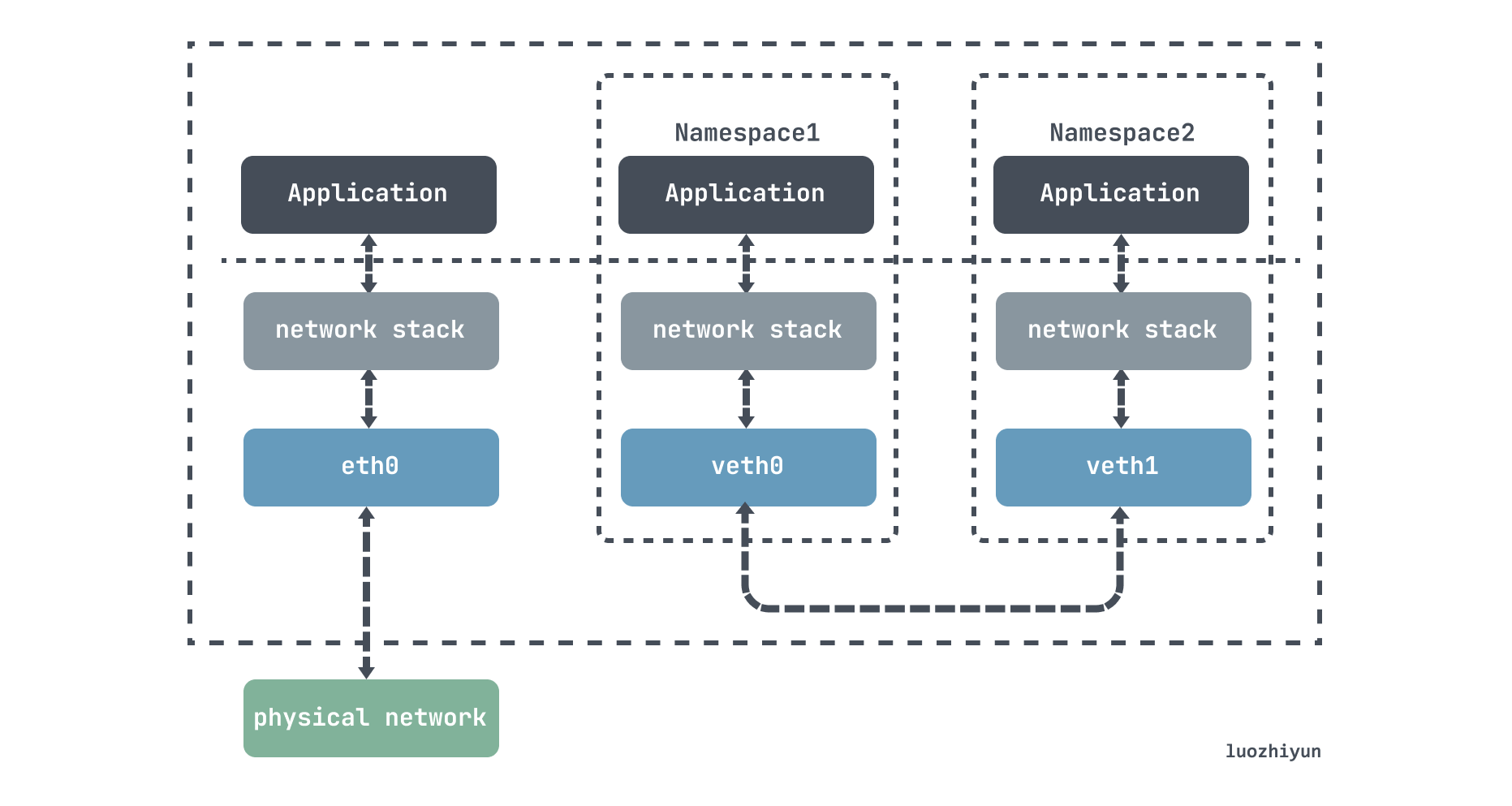

veth is another mainstream virtual network card solution. In Linux Kernel 2.6 version, while Linux began to support network namespace isolation, it also provided a dedicated virtual Ethernet (Virtual Ethernet, habitually abbreviated as veth) to allow two isolated networks Namespaces can communicate with each other. veth is not actually a device, but a pair of devices, so it is often called a Veth-Pair.

The Bridge mode in Docker relies on veth-pair to connect to the docker0 bridge to communicate with the host and even other machines in the outside world.

tun/tap

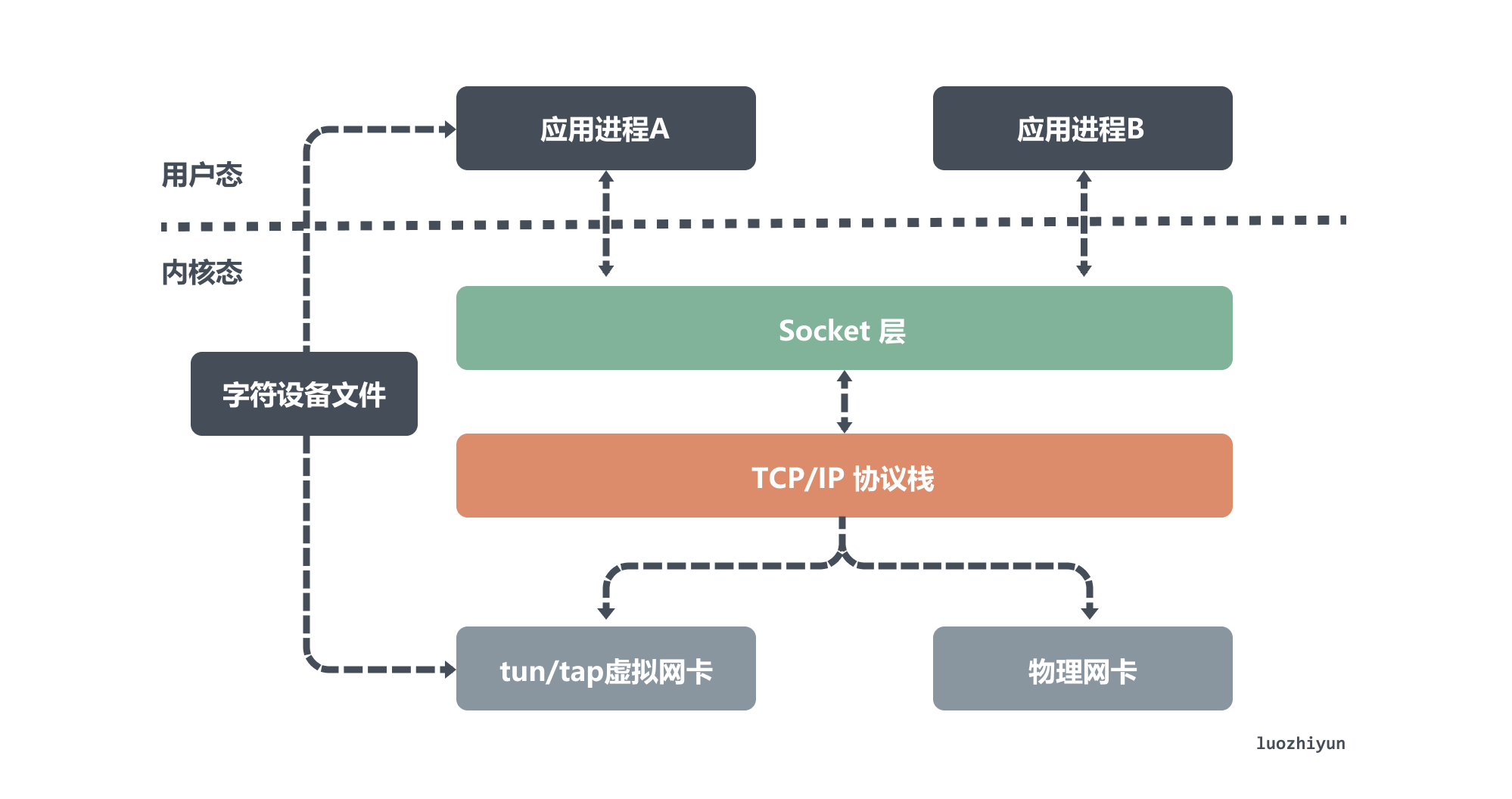

Tun and tap are two relatively independent virtual network devices. As virtual network cards, they have the same functions as physical network cards except that they do not have the hardware functions of physical network cards. In addition, tun/tap is responsible for the communication between the kernel network protocol stack and users. Transfer data between spaces.

- The tun device is a three-layer network layer device. It reads IP data packets from the /dev/net/tun character device, and can only write IP data packets. Therefore, it is often used in some point-to-point IP tunnels, such as OpenVPN, IPSec, etc.;

- The tap device is a Layer 2 link layer device, which is equivalent to an Ethernet device. It reads MAC layer data frames from the /dev/tap0 character device, and can only write MAC layer data frames, so it is often used as a virtual machine. Simulate network card usage;

From the above figure, we can see the difference between the physical network card and the virtual network card simulated by the tun/tap device. Although one end of them is connected to the network protocol stack, the other end of the physical network card is connected to the physical network, while the tun/tap device is connected to the physical network. The other end of the device is connected to a file as a transmission channel.

According to the previous introduction, we probably know that the virtual network card has two main functions, one is to connect other devices (virtual network card or physical network card) and Bridge, which is the function of the tap device; the other is to provide user space programs to send and receive data on the virtual network card. data, which is what the tun device does.

The main difference is that they act on different network protocol layers. In other words, the tap device is a layer-2 device, so it is usually connected to the Bridge as a node of the local area network. The tun device is a layer-3 device that is usually used to implement vpn.

OpenVPN uses tun device to send and receive data

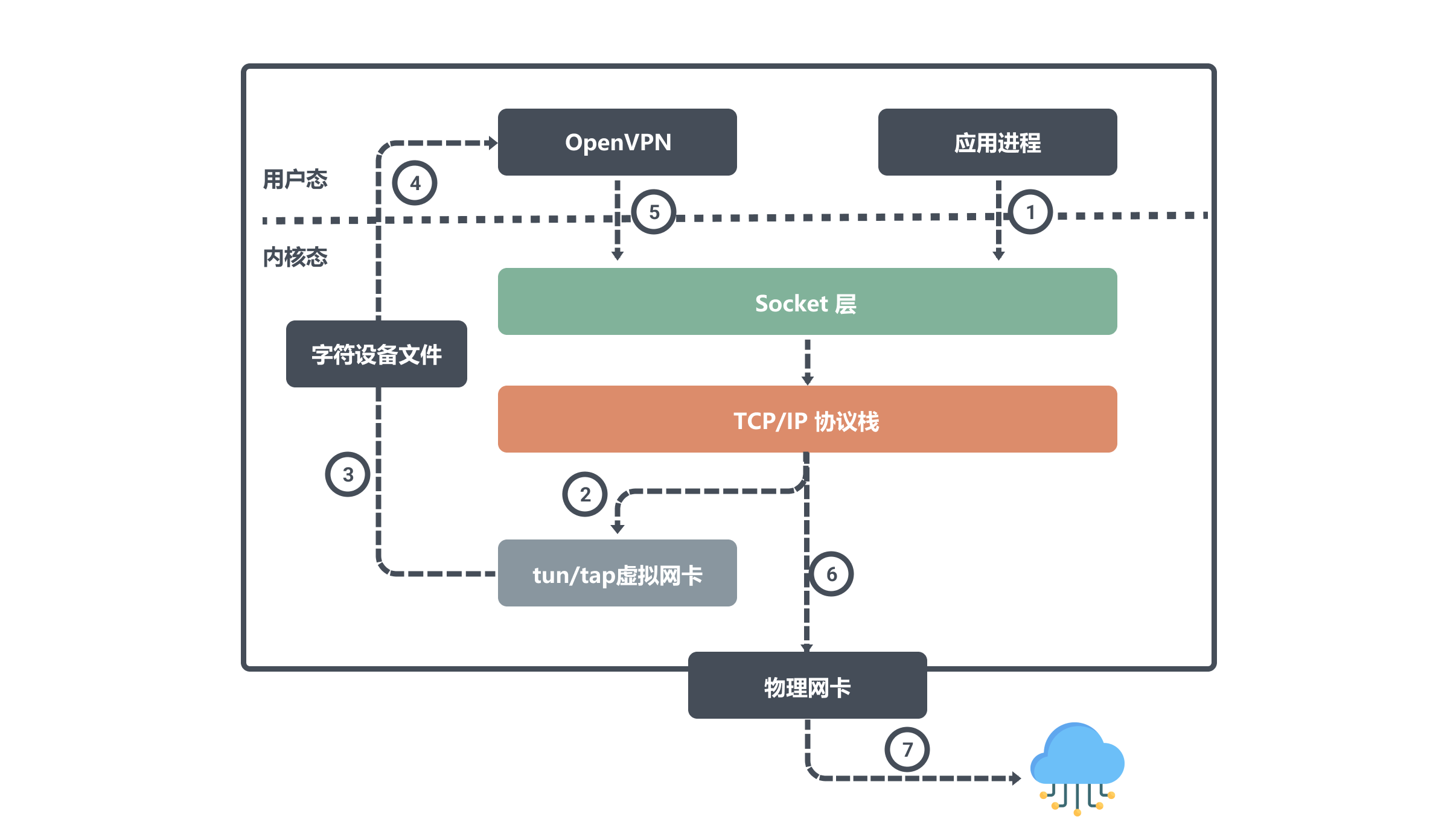

OpenVPN is a common example of using a tun device, which can easily build a dedicated network channel similar to a local area network between different network access sites. The core mechanism is to install a tun device on the computer where the OpenVPN server and the client are located to achieve mutual access through their virtual IP.

For example, for two host nodes A and B on the public network, the IPs configured on the physical network cards are ipA_eth0 and ipB_eth0 respectively. Then run the openvpn client and server on the A and B nodes respectively, they will create a tun device on their own node, and both will read or write this tun device.

Assuming that the virtual IPs corresponding to these two devices are ipA_tun0 and ipB_tun0, then the application on node B wants to communicate with node A through the virtual IP, then the packet flow is:

The user process initiates a request to ipA_tun0. After the routing decision, the kernel writes the data from the network protocol stack to the tun0 device; then OpenVPN reads the tun0 device data from the character device file and sends the data request; the kernel network protocol stack according to the routing decision. Data flows from the local eth0 interface to ipA_eth0.

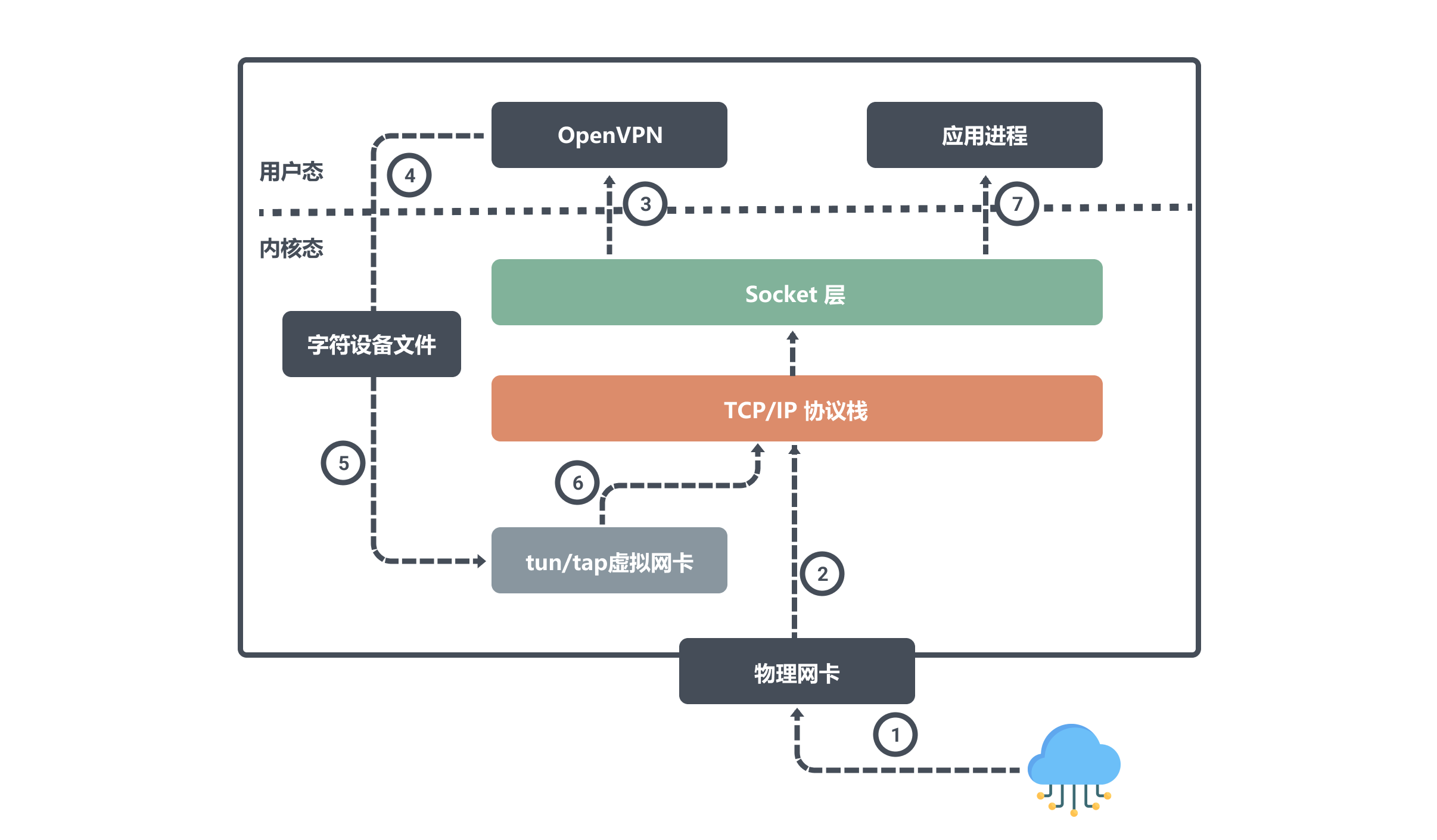

Also let’s see how node A accepts data:

When node A receives the data through the physical network card eth0, it will write it into the kernel network protocol stack, because the target port number is monitored by the OpenVPN program, so the network protocol stack will hand over the data to OpenVPN;

After the OpenVPN program gets the data, it finds that the target IP is the tun0 device, so it writes the data from the user space to the character device file, and then the tun0 device writes the data to the protocol stack, and the network protocol stack finally forwards the data to the application. process.

From the above, we know that using tun/tap devices to transmit data needs to go through the protocol stack twice, and there will inevitably be a certain performance loss. If conditions permit, the direct communication between containers and containers will not use tun/tap as the preferred solution. Generally, It is based on veth described later. However, tun/tap does not have the restriction that the device should appear in pairs and the data should be transmitted as it is like veth. After the data packet is sent to the user-mode program, the programmer has complete control over which modifications to make and where to send it. Write code to implement, so the tun/tap scheme has a wider scope of application than the veth scheme.

flannel UDP mode uses tun device to send and receive data

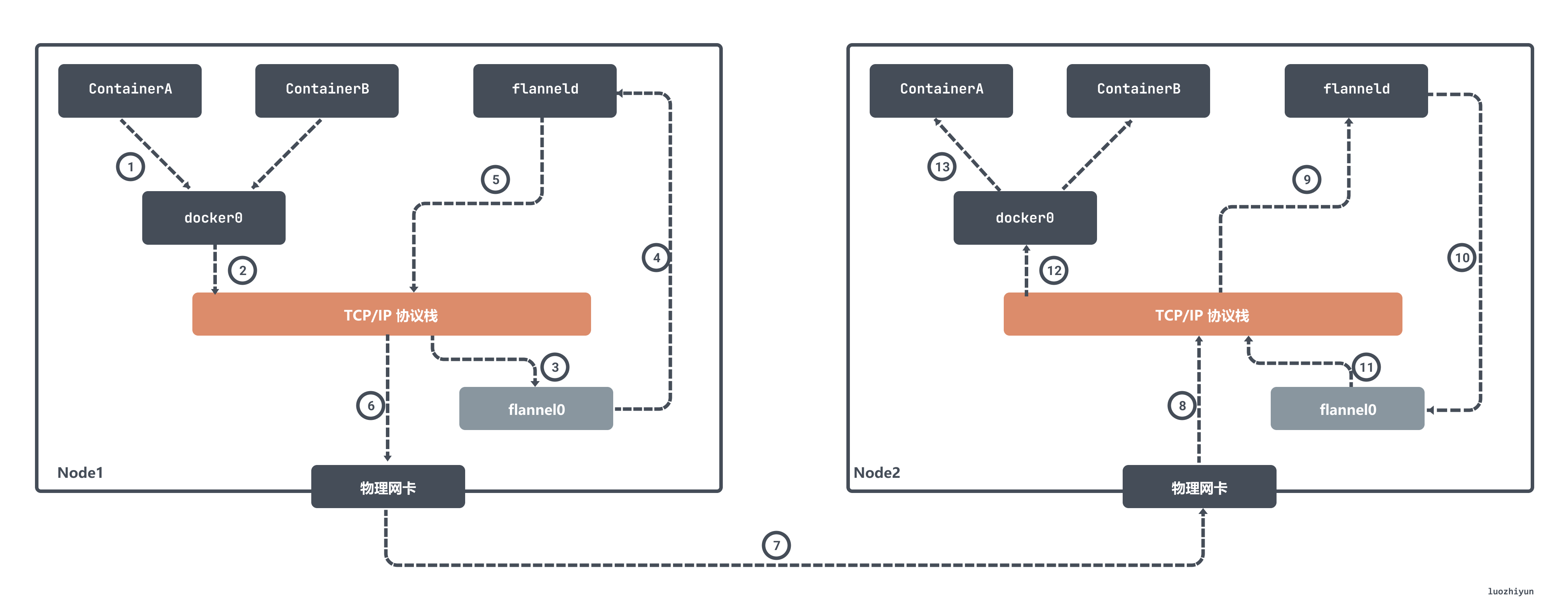

Early flannel used the tun device to achieve mutual access across the main network in UDP mode. In fact, the principle is similar to the OpenVPN above.

In flannel, flannel0 is a three-layer tun device used to transfer IP packets between the operating system kernel and user applications. When the operating system sends an IP packet to the flannel0 device, flannel0 will hand over the IP packet to the application that created the device, that is, the flanneld process. The flanneld process is a UDP process responsible for processing the data packets sent by flannel0. :

The flanneld process will match the corresponding subnet according to the destination IP address, find the IP address of the host Node2 corresponding to this subnet from Etcd, and then directly encapsulate the data packet in a UDP packet and send it to Node 2. Since flanneld on each host is listening on a port 8285, the flanneld process on the Node2 machine will obtain the transmitted data from port 8285, and resolve the IP address encapsulated inside and sent to ContainerA.

flanneld will directly send this IP packet to the TUN device it manages, namely the flannel0 device. Then the network stack will send the packet to the docker0 bridge according to the route, and the docker0 bridge will play the role of a layer 2 switch, send the packet to the correct port, and then enter the Network Namespace of containerA through the veth pair device.

The Flannel UDP mode mentioned above is now obsolete, because it goes through three data copies between user mode and kernel mode. The container sends the data packet through the docker0 bridge to enter the kernel state once; the data packet enters the flanneld process from the flannel0 device again; the third time is that the flanneld re-enters the kernel state after UDP packetization, and sends the UDP packet through the host’s eth0.

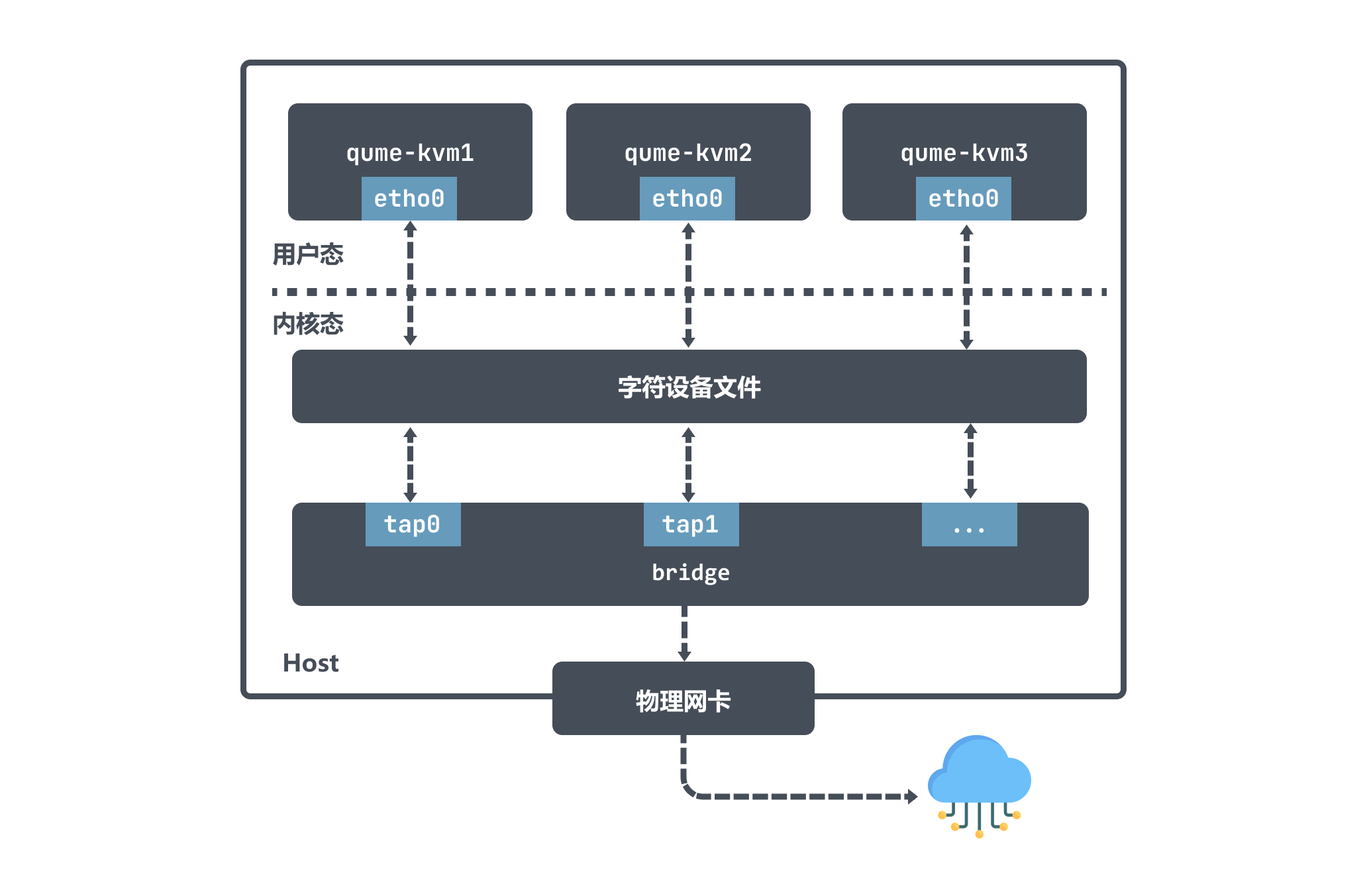

tap device as virtual machine NIC

We also said above that the tap device is a Layer 2 link layer device, which is usually used to implement a virtual network card. Taking qemu-kvm as an example, it has great flexibility by using tap devices and Bridge, and can realize various network topologies.

After qume-kvm enables tap mode, a tap-type virtual network card tapx is registered with the kernel when it is started, and x represents an increasing number; this virtual network card tapx is bound to the Bridge and is an interface on it. , the final data will be forwarded through Bridge.

qume-kvm will send data out through its network card eth0. From the host’s point of view, the user-level program qume-kvm process writes data to the character device; then the tapx device will receive the data and then Bridge will decide how to forward the data packet. If qume-kvm wants to communicate with the outside world, the data packets will be sent to the physical network card, and finally communicate with the outside world.

It can also be seen from the above figure that the data packets sent by qume-kvm first reach the Bridge through the tap device, and then go to the physical network. The data packets do not need to pass through the host’s protocol stack, which is more efficient.

veth-pair

veth-pair is a pair of virtual device interfaces, which appear in pairs, one end is connected to the protocol stack, the other end is connected to each other, input data at one end of the veth device, and the data will be unchanged from the other end of the device. outflow:

It can be used to connect various virtual devices, and the connection between two namespace devices can transmit data through veth-pair.

Let’s do an experiment, construct a process of ns1 and ns2 using veth to communicate, and see how veth sends and receives request packets.

# 创建两个namespace ip netns add ns1 ip netns add ns2 # 通过ip link命令添加vethDemo0和vethDemo1 ip link add vethDemo0 type veth peer name vethDemo1 # 将vethDemo0 vethDemo1 分别加入两个ns ip link set vethDemo0 netns ns1 ip link set vethDemo1 netns ns2 # 给两个vethDemo0 vethDemo1 配上IP 并启用ip netns exec ns1 ip addr add 10.1.1.2/24 dev vethDemo0 ip netns exec ns1 ip link set vethDemo0 up ip netns exec ns2 ip addr add 10.1.1.3/24 dev vethDemo1 ip netns exec ns2 ip link set vethDemo1 up

Then we can see that the respective virtual network cards and corresponding ips are set in the namespace:

~ # ip netns exec ns1 ip addr root@VM_243_186_centos 1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 7: vethDemo0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether d2:3f:ea:b1:be:57 brd ff:ff:ff:ff:ff:ff inet 10.1.1.2/24 scope global vethDemo0 valid_lft forever preferred_lft forever inet6 fe80::d03f:eaff:feb1:be57/64 scope link valid_lft forever preferred_lft forever

Then we ping the ip of the vethDemo1 device:

ip netns exec ns1 ping 10.1.1.3

Capture packets on two network cards:

~ # ip netns exec ns1 tcpdump -n -i vethDemo0 root@VM_243_186_centos tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on vethDemo0, link-type EN10MB (Ethernet), capture size 262144 bytes 20:19:14.339853 ARP, Request who-has 10.1.1.3 tell 10.1.1.2, length 28 20:19:14.339877 ARP, Reply 10.1.1.3 is-at 0e:2f:e6:ce:4b:36, length 28 20:19:14.339880 IP 10.1.1.2 > 10.1.1.3: ICMP echo request, id 27402, seq 1, length 64 20:19:14.339894 IP 10.1.1.3 > 10.1.1.2: ICMP echo reply, id 27402, seq 1, length 64 ~ # ip netns exec ns2 tcpdump -n -i vethDemo1 root@VM_243_186_centos tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on vethDemo1, link-type EN10MB (Ethernet), capture size 262144 bytes 20:19:14.339862 ARP, Request who-has 10.1.1.3 tell 10.1.1.2, length 28 20:19:14.339877 ARP, Reply 10.1.1.3 is-at 0e:2f:e6:ce:4b:36, length 28 20:19:14.339881 IP 10.1.1.2 > 10.1.1.3: ICMP echo request, id 27402, seq 1, length 64 20:19:14.339893 IP 10.1.1.3 > 10.1.1.2: ICMP echo reply, id 27402, seq 1, length 64

Through the above packet capture, combined with the related concepts of ping, we can roughly understand:

- The ping process constructs an ICMP echo request packet and sends it to the protocol stack through the socket;

- According to the destination IP address and the system routing table, the protocol stack knows that the data packets to 10.1.1.3 should go out through the 10.1.1.2 port;

- Since it is the first time to visit 10.1.1.3, it does not have its mac address at first, so the protocol stack will first send ARP out to ask for the mac address of 10.1.1.3;

- The protocol stack sends the ARP packet to vethDemo0 and lets it send it out;

- Since the other end of vethDemo0 is connected to vethDemo1, the ARP request packet is forwarded to vethDemo1;

- After vethDemo1 receives the ARP packet, it transfers it to the protocol stack at the other end, makes an ARP response, and tells the mac address in response;

- After getting the mac address of 10.1.1.3, sending a ping request will construct an ICMP request and send it to the destination, and then the ping command echoes back successfully;

Summarize

This article only talks about two common virtual network devices. The reason is that when reading flannel, the book will say that flannel0 is a tun device, but I don’t understand what a tun device is, so I can’t understand flannel.

After research, it is found that the tun/tap device is a virtual network device responsible for data forwarding, but it needs to use a file as a transmission channel, which inevitably leads to why the tun/tap device needs to be forwarded twice, which is why the flannel device UDP mode The reason for the poor performance has led to the latter mode being abandoned.

Because the performance of the tun/tap device as a virtual network device is not good, the direct communication between the container and the container does not use tun/tap as the preferred solution, which is generally implemented based on the veth introduced later. As a layer 2 device, veth allows two isolated network namespaces to communicate with each other, without the need to repeatedly go through the network protocol stack. veth pair is connected to the protocol stack at one end and connected to each other at the other end. The transmission of veth becomes very simple, which also makes veth have better performance than tap/tun.

Reference

https://zhuanlan.zhihu.com/p/293659939

https://segmentfault.com/a/1190000009249039

https://segmentfault.com/a/1190000009251098

https://www.junmajinlong.com/virtual/network/all_about_tun_tap/

https://www.junmajinlong.com/virtual/network/data_flow_about_openvpn/

https://www.zhaohuabing.com/post/2020-02-24-linux-taptun/

https://zhuanlan.zhihu.com/p/462501573

http://icyfenix.cn/immutable-infrastructure/network/linux-vnet.html

https://opengers.github.io/openstack/openstack-base-virtual-network-devices-tuntap-veth/

https://tomwei7.com/2021/10/09/qemu-network-config/

https://time.geekbang.org/column/article/65287

https://blog.csdn.net/qq_41586875/article/details/119943074

https://man7.org/linux/man-pages/man4/veth.4.html

Cloud native virtual network tun/tap & veth-pair first appeared on luozhiyun`s Blog .

This article is reproduced from: https://www.luozhiyun.com/archives/684

This site is for inclusion only, and the copyright belongs to the original author.