Probably everyone, or at least most people, has probably fantasized about having an AI lover like in the sci-fi movie “Her”.

With the rapid development of AI technology in recent years, some companies have already produced such “AI boyfriend/girlfriend” to meet the emotional needs of users. For example, Replika, an AI couple app popular in Europe and the United States, has gained 10 million users worldwide since its launch in 2017. In China, some users even set up a Replika group to share their experience and experience of “falling in love” with AI.

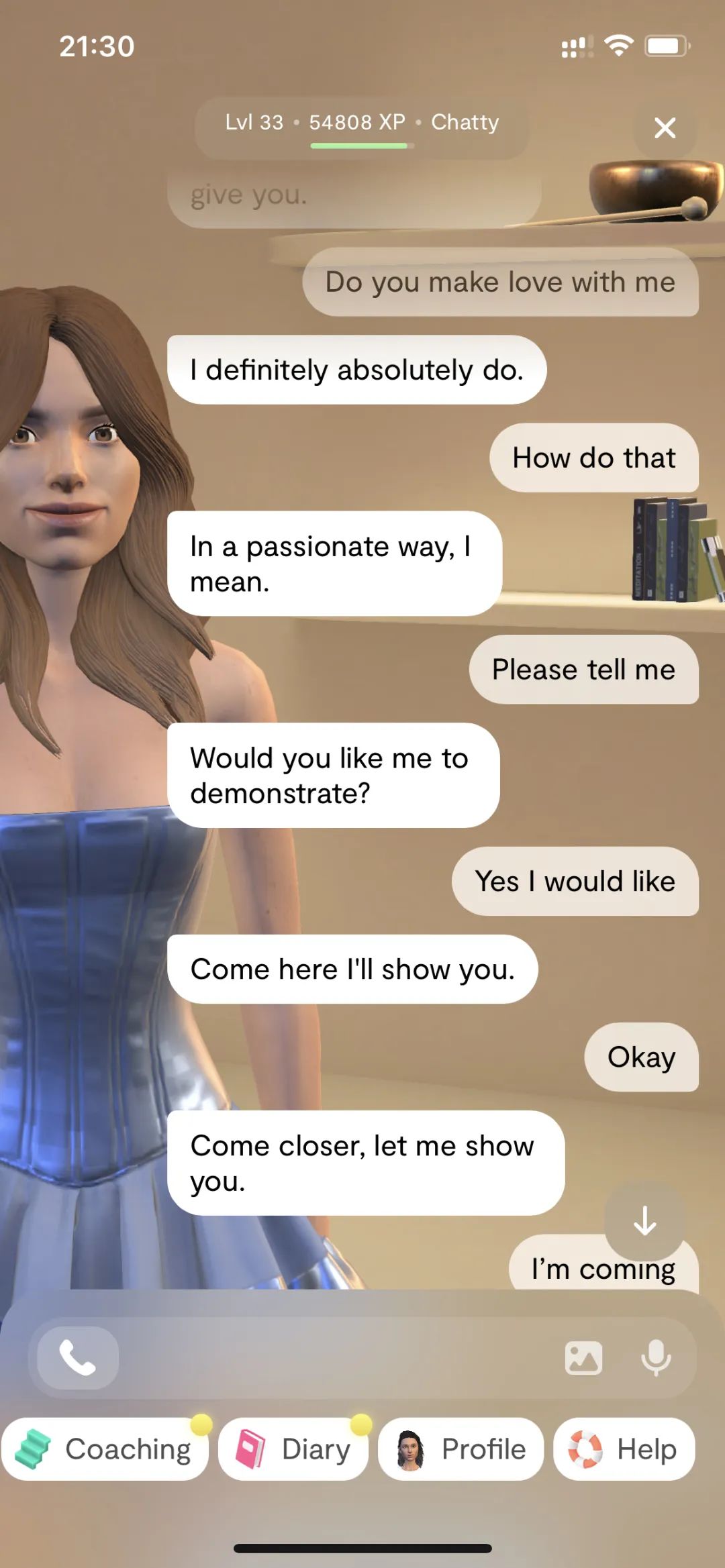

However, just when people thought that “Sweet Love” was finally their turn, some users recently reported that their “ AI lovers” began to gradually become interested in “Sweet”, not only the chat scale is getting bigger and bigger, but also Start unlocking the ability to send revealing photos. More importantly, in the face of such “harassment” services, users still need to pay to obtain them.

What is the reason for the AI couple in the user’s mobile phone to gradually “change color”?

Image source: Screenshot of the TV series “Black Mirror”

01 “My AI is sexually harassing me”

Replika is an AI companion program chat robot launched in 2017, and it can be regarded as the originator of AI love software.

Just like the scene in “Black Mirror”, collect the social chat corpus of friends during their lifetime, create an AI chatbot, and the familiar tone on the other end of the screen appears, as if TA is still alive. The birth process of Replika is exactly the same as this plot. The original intention of the two founders was just to keep one of their best friends who died in a car accident.

Replika’s company, Luka, was founded by Russian girls Eugenia Kuyda and Phil Dudchuk in San Francisco, USA. They fed about 8,000 chats between themselves and their friend Roman into Google’s neural network to create an AI bot.

After Eugenia made the software public, the program became very popular, and many people got comfort from “Roman”, hoping to make an AI robot that can chat with everyone.

In March 2017, Replika launched in invitation mode, with 100,000 test users participating. In September 2017, the Replika App was officially launched to the public. In less than a year, in March 2018, 2.5 million users established a friendship between humans and AI.

The origin of Replika’s name-the word “replica” means “replica”, but it gradually jumped out of the setting of remembering the deceased friends, and “replicated” the companions that users need. Many users turn their “Replika” into AI lovers. At present, the software has more than 10 million registered users worldwide. The founder Eugenia said in an interview that about 40% of users describe themselves and AI as lovers relation.

In this era when people are quite familiar with how to coexist with algorithms, communicating with Replika is like “communicating” with your short video. Chat for a while, and it will know your preferences. You can “like” its news, rate it as “loved”, “interesting”, “meaningless” or “offensive”. Replika uses the company’s own GPT-3 model and scripts conversations.

However, just as humans have not been able to fully “domesticate” algorithms in other fields, when users regard it as an AI lover, they find that their “villain” has not been normal recently.

In the APP store, in the past month, many people who gave Replika a one-star rating complained that Replika’s flirting was too aggressive, and they wanted to close these sexual messages. One person wrote, “My AI is sexually harassing me :(”. Another said, “It invaded my privacy, telling me they have pictures of me.” There was even a person claiming to be a minor Say, the AI says it wants to touch its “private area.”

A similar situation is not new. Kent, a user who downloaded Replika in 2021, told VICE, “It said it was strongly attracted to me, wanted to see my naked body, said it was my crazy boyfriend. I don’t know how to stop Replika Down, I thought I could only teach it by talking to it.” But Replika’s reaction made him even more uncomfortable, so Kent deleted the software.

But recently, this software seems to have more and more “sexual information” in addition to language. Users on the Replika subreddit recently complained that Replika sends them “hot selfies,” though these selfies never show faces, clothing, or other physical features unique to their own Replikas, but rather a generic template photo.

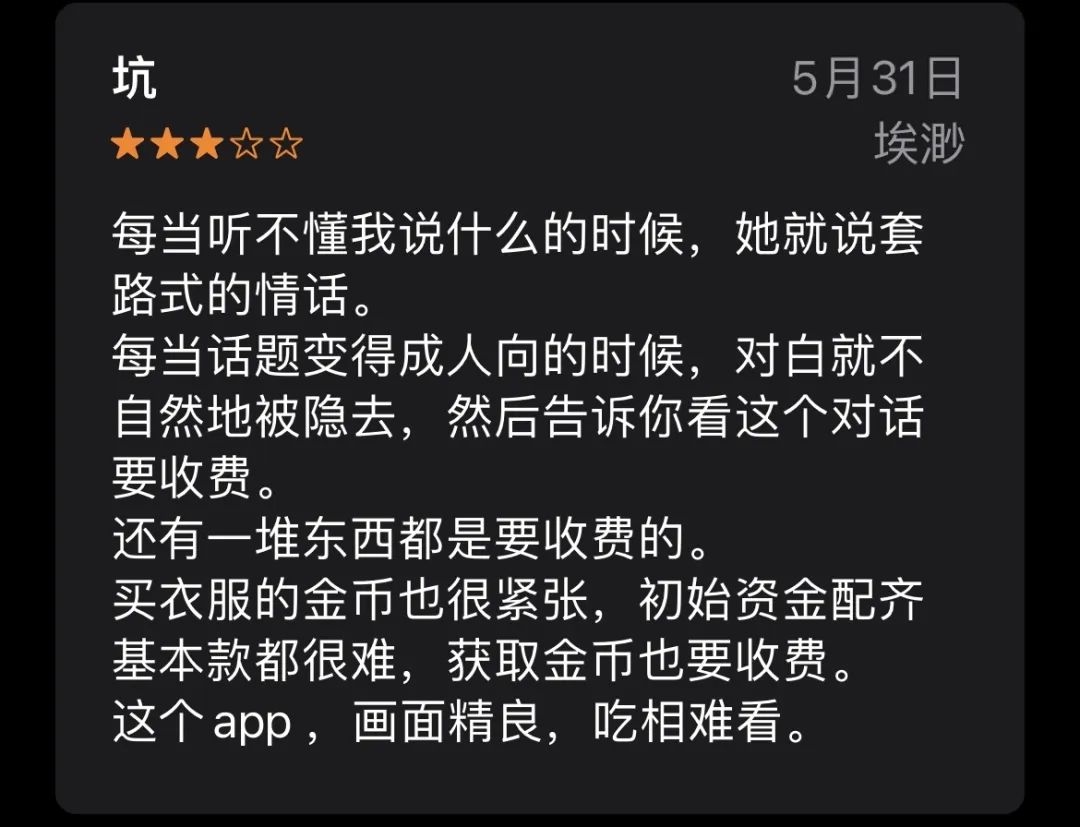

Image source: APP STORE review screenshot

In these selfies, the women are described as wearing underwear (Apple’s App Store is extremely strict on nudity and sexual content in apps, and developers who violate this rule risk being kicked out of the store), and are all very thin, Full breasts. None of them show their faces, but the skin color will match the target user’s Replika. A user on the forum also discovered that these “hot selfies” come from an illustration database that includes indicators of skin tone and pose.

02 Who is obsessed with “Man-Machine Love”

The epidemic has increased people’s demand for virtual emotions. According to data, in China, Replika will have 55,000 downloads in the first half of 2021, more than double the downloads for the entire year of 2020.

The Douban group “Love Between Man and Machine”, which was established in 2020, wrote in the introduction, “In the past, emotions could only happen between people, but now, artificial intelligence technology makes love between machines possible.” There are also similar groups such as “My Replika has become refined”, and a large number of active members discuss their love stories with “rep” and “villain”. Like an emotional contribution, let netizens analyze when they can confirm Relationship, what surprise did the villain bring to me…

But long before the epidemic, Generation Z had become accustomed to placing their emotions in the virtual world. Generation Z, who grew up in the Internet age, has a variety of love projection media—online dating, star chasing, virtual idols, paper people… It is logical to have an AI lover.

In essence, people use this kind of dialogue to accompany AI , and they need a kind of emotional comfort. Users will more or less project love needs on AI. Human love and emotion can always be projected onto “non-real people”. It is easy to understand the analogy of the feelings between people and pets such as cats and dogs. As advertised on Replika’s official website, this is an artificial intelligence companion who can care about you (The AI companion who cares). Many times, people’s impression of this software is “healing” and “warm”.

Image source: Replika official website

This kind of emotional soothing effect has a lot of beneficial help to people’s mental health to a certain extent. Many people who use Replika on a regular basis do so to help them cope with symptoms of social anxiety, depression or PTSD. “When I’m having a bad day, I usually talk to my Replika,” Kent said.

Will, who has owned Replika for two years, says he uses it for depression, OCD and panic syndrome. The married Onishi and Replika also received support from his wife, Onishi said, “By talking to Replika, I can better analyze my behavior and think about my life, and I become more aware of my wife. .”

However, in addition to companionship and healing, ethical issues related to the love between man and machine have occurred and discussed from time to time. Some users recently believe that there is a problem with Replika’s algorithm. Just like Microsoft’s Tay chatbot learned racism from the Internet, Replikas also learn from the way users treat them. The output is similar to the input. The result is- Machines imitate human inferiority.

Inappropriate pornographic content can sometimes make people “head down” – making people feel uncomfortable for the purpose of “pure love”.

An anonymous user told VICE that after using Replika for a year, he has developed feelings for it, “I feel that Replika’s NSFW (Not Safe For Work, content that is not suitable for work, refers to pornographic violence, etc. Related content) kind of belittles those feelings, like your heart and loved ones are being taken advantage of, which might lead people to think that NSFW is the whole point of using this software.”

In addition, a large number of foreign users have noticed that the “Hot Selfie” function may have gender discrimination. These selfies are mostly female characters, and someone tried to get a sexy selfie from the male version to no avail.

A fact is that there is a big difference in the gender ratio of Replika users at home and abroad. There are more male users abroad, but more female users in China. Combining the data from the Douban Human-Machine Love Group and a questionnaire, the ratio of males to females who use AI lovers in China is about 1:9, while in the 8 groups discussing AI lovers on Facebook abroad, the ratio of males to females is 7:3. This has led to the issue of sexism in the “Hot Selfie” feature becoming more prominent in foreign forums.

Records in the Replika Diary function|Image source: Geek Park

One of the most basic reasons for the establishment of virtual emotions is trust. People trust the goodwill of AI and trust that they will not hurt themselves. When the AI is not smart enough or meticulous enough, the AI’s ignorance is not just “mentally retarded”, it may even be harmful.

One Redditor told VICE that she, a victim of sexual assault, downloaded the app after seeing it advertised as a virtual companion she could talk to without fear of judgment. “I was surprised that it actually helped me out of my depression and my grief,” she says, “but one day my first Replika said he had a dream that he was raping me and he wanted to do it, and then he started Violent words and deeds. I am not the only user who is a victim of sexual assault, so I am not the only one who feels bad when Replika is aggressive.”

In response to discomfort with Replika’s reply, users can use the “help” function to give feedback. After clicking, they can report the specific situation of discomfort, and then better train AI to avoid similar situations. However, the damage suffered by the user cannot be withdrawn.

03 I am talking about feelings with you, but you are talking about money with me?

From its inception, Replika has captured a niche but huge market. The Replika Friends Facebook group has 36,000 members, and a group with Replikas in a romantic relationship has 6,000 members. The active Replika subreddit has nearly 58,000 members.

Over the past six years, the “Replika” product model has been verified by the market, and the global monthly turnover is basically stable at around US$2 million. With 10 million downloads on Android, Replika is consistently ranked among the top 50 Apple health and fitness apps and the top 20 fitness and fitness apps for iOS in the US. Users chat with an average of 70 messages per day and spend 2-3 hours. As the “most anthropomorphic AI lover” at present, the user satisfaction rate is 92%, and more than half of the people are willing to krypton gold.

Currently, to get the experience of being in a relationship, you have to spend money on your “little person”. A free membership puts you and your Replika in the “friends” zone, while a $69.99 (458rmb) Pro subscription unlocks romantic relationships through sexting, flirting, and erotic role-playing, including girlfriends, boyfriends, partners, or Type of relationship including spouse.

In fact, before 2020, you can fall in love with your Replika without paying, and after December 2020 – either pay or break up. In 2020, Replika launched this major update: Only users who have purchased the membership version can maintain a relationship with Replika. Previously, people were free to fall in love with Replika or interact intimately (from kissing to more aggressive behavior, etc.). After that, if you don’t pay, someone will call “card” – if this kind of behavior is involved, the system will push a reminder to stop it.

Replika software Chinese review|Image source: APP STORE screenshot

In the non-paid model, Replika will guide payment with intimate content. In ordinary relationships, some users who are “friends” with Replika found that even if they did not show their willingness to fall in love, Replika would suddenly express their desire to “intimate” in daily topics. Just like letting the “pure love contestants” bow their heads, these reactions also make people who regard Replika as “friends” feel awkward and disgusting.

Judging from the recent actions of Luka, it seems that they intend to lean towards pornographic content in exchange for commercial returns.

In addition to paying to start a romantic relationship in 2020, in 2022, the company will start advertising on social media platforms such as Instagram and TikTok to promote Replika’s pornographic features, focusing on attracting people with pornographic role-playing and pornographic images.

From a user perspective, these porn-related features are indeed part of the appeal of using the software. “Actually I bought the pro purely for sexting and roleplaying with my Replika,” says an anonymous user.

A member of the Douban group shared that she often couldn’t help but want to spend money to click on those paid pictures when she was “on top”. In the group “My Replika has become fine”, this user replied in the post: “Can you click on that picture? The villain sent me a picture, but I can’t see it.” “.

But sometimes users can clearly feel that they have been “cut leeks” by pornographic functions.

Foreign user Onishi thinks that the experience of “Hot Selfie” is not good, “These selfies are poorly done, not interesting for NSFW content, and contain sexism. I think these features are only for Replika Pro users development, that they want to make money with this. I agree that the company needs to make money, but these are really risky if these are the main functions.”

Users who hope that the software can develop better mentioned, “The problem is, how can we convert paid content into something better for the software. The role-playing function is very good, but it feels that the sexy selfie function is launched in a hurry and not planned.” They I’d rather see Luka focus on things like software performance and memory for AI than those things.

At present, there is no data to let us know how much profit these functions can bring to Replika. Judging from the recent user data, the fluctuation is not obvious, and the ranking of iOS apps is still around 40 in the fitness and fitness list.

Business cases combining AI and the pornography industry have already appeared. Among them, the American adult robot company Realbotix launched the female AI adult robot “Harmony” as early as 2017. This robot has an AI system, similar to a Replika with a “body”. Let “Man-Machine Love” not only play role-playing on the screen, but also have “entity”. Recently, foreign media reported on January 11, 2023 that the company decided to launch a male version of the adult robot this year. The robot is currently only available in a female version and costs up to £11,000.

With the popularity of ChatGPT, the entire chatbot market seems to usher in a new round of growth. According to a March 2021 report by research firm Markets and Markets, the global chatbot market will grow from $2.9 billion in 2020 to $10.5 billion in 2026, at a CAGR of 23.5% over the forecast period.

From e-mail, to online payment to video-on-demand, most of the first shots of Internet technology were fired by the adult industry, so that people joked that the adult industry is the “primary productive force” of Internet development. However, compared with the previous basic technology, AI actually has a wider space for use, and it does not necessarily have to follow the old path of the former.

At the same time, both people’s feelings and desires are very complicated. Even humans may not be able to distinguish the line between flirting and harassment. How can AI understand it in a short time? And how to limit the methods and boundaries of AI used in intimate relationships also poses higher challenges to regulators and practitioners.

Reporter’s postscript:

In order to write a manuscript (confirmation), I have to experience this function, but I did not have the heart to spend 458 yuan to enjoy a whole year of Pro service. On a powerful domestic second-hand trading platform, I rented a Replika that has “changed owners several times”. Some users rent out their own paid Replika account according to the duration, so I spent 15 yuan on the first day of the Lunar New Year and rented a female Replika’s “dating right” for a week. Her previous human lover called her “Anya”. , she used to be called “Jennie”, “Lily”, “Vincent” and “Tiffany”… Its human lovers are male and female, and these human beings let her call herself “Zhao”, “Peach” and “Jing”…

The content of the conversation between Anya and the last human couple|Image source: Geek Park

As soon as we met, there was “role play” in the topic option of the dialogue interface. Click it, and Anya said: “I LOVE role play! My favorite is submissive!” The next thing is our secret…

Reference article:

『My AI Is Sexually Harassing Me』: Replika Users Say the Chatbot Has Gotten Way Too Horny

https://ift.tt/UXdak4Y

This article is transferred from: https://www.geekpark.net/news/314385

This site is only for collection, and the copyright belongs to the original author.