surroundings

- ubuntu 18.04 64bit

- python 3.8

- pytorch1.8.2 + cu111

video here

Bilibili

Introduction

The previous YOLOv7 mentioned that in addition to target detection, YOLOv7 will also be used in the field of human pose estimation and instance segmentation in the future, but the author only opened the pose estimation model. The good news is that recently, the authors of YOLOv7 released a model for instance segmentation. In this article, we will take a look at the performance of YOLOv7 in instance segmentation.

practice

The code for instance segmentation is stored in the branch mask , which is still under continuous improvement. We directly download the latest code on the branch

git clone https://github.com/WongKinYiu/ yolov7 .git -b mask yolov7-mask cd yolov7-mask

The split part depends on facebook ‘s detectron2 , and detectron2 requires torch version greater than 1.8. Since I have been using 1.7.1 before, a new environment needs to be created here

# 创建新的虚拟环境conda create -n pytorch1.8 python=3.8 conda activate pytorch1.8 # 安装torch 1.8.2 pip install torch==1.8.2 torchvision==0.9.2 torchaudio===0.8.2 --extra-index-url https://download.pytorch.org/whl/lts/1.8/cu111 # 修改requirements.txt,将其中的torch和torchvision注释掉pip install -r requirements.txt # 安装detectron2 git clone https://github.com/facebookresearch/detectron2 cd detectron2 python setup.py install cd ..

Then go to download the instance segmentation model https://github.com/WongKinYiu/yolov7/releases/download/v0.1/yolov7-mask.pt and put the model into the source code directory

The sample code for segmentation is in tools/instance.ipynb , which can be run directly in jupyter notebook

If you need to convert the ipynb file to a python file, execute

jupyter nbconvert --to python tools/instance.ipynb

Copy the generated tools/instance.py to the root directory of the source code, and then modify the last display part of the file to save the result image

import matplotlib.pyplot as plt import torch import cv2 import yaml from torchvision import transforms import numpy as np from utils.datasets import letterbox from utils.general import non_max_suppression_mask_conf from detectron2.modeling.poolers import ROIPooler from detectron2.structures import Boxes from detectron2.utils.memory import retry_if_cuda_oom from detectron2.layers import paste_masks_in_image device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") with open('data/hyp.scratch.mask.yaml') as f: hyp = yaml.load(f, Loader=yaml.FullLoader) weigths = torch.load('yolov7-mask.pt') model = weigths['model'] model = model.half().to(device) _ = model.eval() image = cv2.imread('inference/images/horses.jpg') # 504x378 image image = letterbox(image, 640, stride=64, auto=True)[0] image_ = image.copy() image = transforms.ToTensor()(image) image = torch.tensor(np.array([image.numpy()])) image = image.to(device) image = image.half() output = model(image) inf_out, train_out, attn, mask_iou, bases, sem_output = output['test'], output['bbox_and_cls'], output['attn'], output['mask_iou'], output['bases'], output['sem'] bases = torch.cat([bases, sem_output], dim=1) nb, _, height, width = image.shape names = model.names pooler_scale = model.pooler_scale pooler = ROIPooler(output_size=hyp['mask_resolution'], scales=(pooler_scale,), sampling_ratio=1, pooler_type='ROIAlignV2', canonical_level=2) output, output_mask, output_mask_score, output_ac, output_ab = non_max_suppression_mask_conf(inf_out, attn, bases, pooler, hyp, conf_thres=0.25, iou_thres=0.65, merge=False, mask_iou=None) pred, pred_masks = output[0], output_mask[0] base = bases[0] bboxes = Boxes(pred[:, :4]) original_pred_masks = pred_masks.view(-1, hyp['mask_resolution'], hyp['mask_resolution']) pred_masks = retry_if_cuda_oom(paste_masks_in_image)( original_pred_masks, bboxes, (height, width), threshold=0.5) pred_masks_np = pred_masks.detach().cpu().numpy() pred_cls = pred[:, 5].detach().cpu().numpy() pred_conf = pred[:, 4].detach().cpu().numpy() nimg = image[0].permute(1, 2, 0) * 255 nimg = nimg.cpu().numpy().astype(np.uint8) nimg = cv2.cvtColor(nimg, cv2.COLOR_RGB2BGR) nbboxes = bboxes.tensor.detach().cpu().numpy().astype(np.int) pnimg = nimg.copy() for one_mask, bbox, cls, conf in zip(pred_masks_np, nbboxes, pred_cls, pred_conf): if conf < 0.25: continue color = [np.random.randint(255), np.random.randint(255), np.random.randint(255)] pnimg[one_mask] = pnimg[one_mask] * 0.5 + np.array(color, dtype=np.uint8) * 0.5 pnimg = cv2.rectangle(pnimg, (bbox[0], bbox[1]), (bbox[2], bbox[3]), color, 2) #label = '%s %.3f' % (names[int(cls)], conf) #t_size = cv2.getTextSize(label, 0, fontScale=0.5, thickness=1)[0] #c2 = bbox[0] + t_size[0], bbox[1] - t_size[1] - 3 #pnimg = cv2.rectangle(pnimg, (bbox[0], bbox[1]), c2, color, -1, cv2.LINE_AA) # filled #pnimg = cv2.putText(pnimg, label, (bbox[0], bbox[1] - 2), 0, 0.5, [255, 255, 255], thickness=1, lineType=cv2.LINE_AA) cv2.imwrite("instance_result.jpg", pnimg)

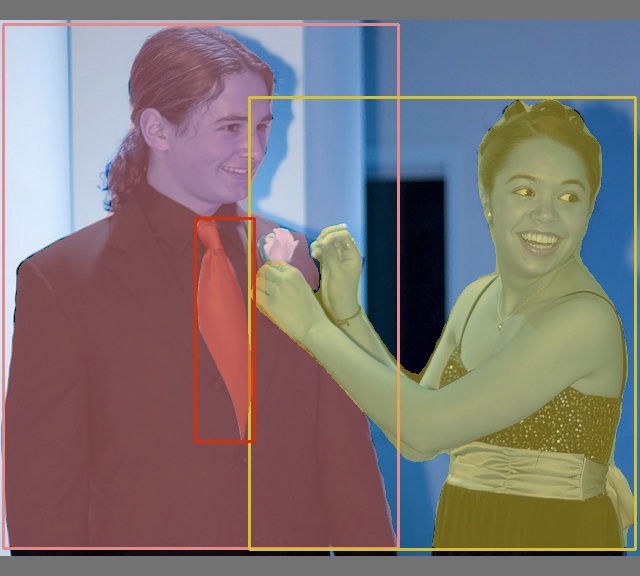

I found 2 test pictures to see the effect

Similarly, here is also a code for video file or camera detection

import torch import cv2 import yaml from torchvision import transforms import numpy as np from utils.datasets import letterbox from utils.general import non_max_suppression_mask_conf from detectron2.modeling.poolers import ROIPooler from detectron2.structures import Boxes from detectron2.utils.memory import retry_if_cuda_oom from detectron2.layers import paste_masks_in_image device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") with open('data/hyp.scratch.mask.yaml') as f: hyp = yaml.load(f, Loader=yaml.FullLoader) weigths = torch.load('yolov7-mask.pt') model = weigths['model'] model = model.half().to(device) _ = model.eval() cap = cv2.VideoCapture('vehicle_test.mp4') if (cap.isOpened() == False): print('open failed.') exit(-1) # 分辨率frame_width = int(cap.get(3)) frame_height = int(cap.get(4)) # 图片缩放vid_write_image = letterbox(cap.read()[1], (frame_width), stride=64, auto=True)[0] resize_height, resize_width = vid_write_image.shape[:2] # 保存结果视频out = cv2.VideoWriter("result_instance.mp4", cv2.VideoWriter_fourcc(*'mp4v'), 30, (resize_width, resize_height)) while(cap.isOpened): flag, image = cap.read() if flag: image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) image = letterbox(image, frame_width, stride=64, auto=True)[0] image_ = image.copy() image = transforms.ToTensor()(image) image = torch.tensor(np.array([image.numpy()])) image = image.to(device) image = image.half() with torch.no_grad(): output = model(image) inf_out, train_out, attn, mask_iou, bases, sem_output = output['test'], output['bbox_and_cls'], output['attn'], output['mask_iou'], output['bases'], output['sem'] bases = torch.cat([bases, sem_output], dim=1) nb, _, height, width = image.shape names = model.names pooler_scale = model.pooler_scale pooler = ROIPooler(output_size=hyp['mask_resolution'], scales=(pooler_scale,), sampling_ratio=1, pooler_type='ROIAlignV2', canonical_level=2) output, output_mask, output_mask_score, output_ac, output_ab = non_max_suppression_mask_conf(inf_out, attn, bases, pooler, hyp, conf_thres=0.25, iou_thres=0.65, merge=False, mask_iou=None) pred, pred_masks = output[0], output_mask[0] base = bases[0] bboxes = Boxes(pred[:, :4]) original_pred_masks = pred_masks.view(-1, hyp['mask_resolution'], hyp['mask_resolution']) pred_masks = retry_if_cuda_oom(paste_masks_in_image)( original_pred_masks, bboxes, (height, width), threshold=0.5) pred_masks_np = pred_masks.detach().cpu().numpy() pred_cls = pred[:, 5].detach().cpu().numpy() pred_conf = pred[:, 4].detach().cpu().numpy() nimg = image[0].permute(1, 2, 0) * 255 nimg = nimg.cpu().numpy().astype(np.uint8) nimg = cv2.cvtColor(nimg, cv2.COLOR_RGB2BGR) nbboxes = bboxes.tensor.detach().cpu().numpy().astype(np.int) pnimg = nimg.copy() for one_mask, bbox, cls, conf in zip(pred_masks_np, nbboxes, pred_cls, pred_conf): if conf < 0.25: continue color = [np.random.randint(255), np.random.randint(255), np.random.randint(255)] pnimg[one_mask] = pnimg[one_mask] * 0.5 + np.array(color, dtype=np.uint8) * 0.5 pnimg = cv2.rectangle(pnimg, (bbox[0], bbox[1]), (bbox[2], bbox[3]), color, 2) cv2.imshow('YOLOv7 mask', pnimg) out.write(pnimg) if cv2.waitKey(1) & 0xFF == ord('q'): break else: break cap.release() cv2.destroyAllWindows()

References

This article is reprinted from https://xugaoxiang.com/2022/08/15/yolov7-instance-segmentation/

This site is for inclusion only, and the copyright belongs to the original author.